When we want to use our familiar tools and workflows from software development for data science and machine learning projects, we quickly run into problems. Data science and machine learning model building follow a different process than the classic software development process, which is fairly linear.

When I create a branch in software development, I have a clear goal in mind of what the outcome of that branch will be: I want to fix a bug, develop a user story, or revise a component. I start working on this defined task. Then, once I upload my code to the version control system, automated tests run – and one or more team members perform a code review. Then I usually do another round to incorporate the review comments. When all issues are fixed, my branch is integrated into the main branch and the CI/CD pipeline starts running; a normal development process. In summary, the majority of the branches I create are eventually integrated in and deployed to a production environment.

In the area of machine learning and data science, things are different. Instead of a linear and almost “mechanical” development process, the process here is very much driven by experiments. Experiments can fail; that is the nature of an experiment. I also often start an experiment precisely with the goal of disproving a thesis. Now, any training of a machine learning model is an experiment and an attempt to achieve certain results with a specific model and algorithm configuration and data set. If we imagine that for a better overview we manage each of these experiments in a separate branch, we will get very many branches very quickly. Since the majority of my experiments will not produce the desired result, I will discard many branches. Only a few of my experiments will ever make it into a production environment. But still, I want to have an overview of what experiments I have already done and what the results were so that I can reproduce and reuse them in the future.

But that’s not the only difference between traditional software development and machine learning model development. Another difference is behavior over time.

ML models deteriorate over time

Classic software works just as well after a month as it did on day one. Of course, there may be changes in memory and computational capacity requirements, and of course bugs will occur, but the basic behavioral characteristics of the production software do not change. With machine learning models, it’s different. For these, the quality decreases over time. A model that operates in a production environment and is not re-trained will degrade over time and never achieve as good a predictive accuracy as it did on day one.

Concept drift is to blame [1]. The world outside our machine learning system changes and so does the data that our model receives as input values. Different types of concept drift occur: data can change gradually, for example, when a sensor becomes less accurate over a long period of time due to wear and tear and shows an ever-increasing deviation from the actual measured value. Cyclical events such as seasons or holidays can also have an effect if we want to predict sales figures with our model.

But concept drift can also occur very abruptly: If global air traffic is brought to a standstill by COVID-19, then our carefully trained model for predicting daily passenger traffic will deliver poor results. Or if the sales department launches an Instagram promotion without notice that leads to a doubling of buyers of our vitamin supplement, that’s a good result, but not something our model is good at predicting.

There are two ways to counteract this deterioration in prediction quality: either we enable our model to actively retrain itself in the production environment, or we have to update our model frequently. Or better yet, update as often as we can somehow. We may also have made a necessary adjustment to an algorithm or introduced a new model that needs to be rolled out as quickly as possible.

So in our machine learning workflow, our goal is not just to deliver models to the user. Instead, our goal must be to build infrastructure that quickly informs our team when a model is providing incorrect predictions and enables the team to lift a new, better model into production environments as quickly as possible.

MLOps as DevOps for Machine Learning

We have seen that data science and machine learning model building require a different process than traditional, “linear” software development. It is also necessary that we achieve a high iteration speed in the development of machine learning models, in order to counteract concept drift. For this reason, it is necessary that we create a machine learning workflow and a machine learning platform to help us with these two requirements. This is a set of tools and processes that are to our machine learning workflow what DevOps is to software development: A process that enables rapid but controlled iteration in development supported by continuous integration, continuous delivery, and continuous deployment. This allows us to quickly and continuously bring high-quality machine learning systems into production, monitor their performance, and respond to changes. We call this process MLOps [2] or CD4ML (Continuous Delivery for Machine Learning) [3].

MLOps also provides us with other benefits: Through reproducible pipelines and versioned data, we create consistency and repeatability in the training process as well as in production environments. These are necessary prerequisites to implement business-critical ML use cases and to establish trust in the new technology among all stakeholders.

In the enterprise environment, we have a whole set of requirements that need to be implemented and adhered to in addition to the actual use case. There are privacy, data security, reproducibility, explainability, non-discrimination, and various compliance policies that may differ from company to company. If we leave these additional challenges for each team member to solve individually, we will create redundant, inconsistent and simply unnecessary processes. A unified machine learning workflow can provide a structure that addresses all of these issues, making each team member’s job easier.

Due to the experimental and iterative nature of machine learning, each step in the process that can be automated has a significant positive impact on the overall run time of the process from data to productive model. A machine learning platform allows data scientists and software engineers to focus on the critical aspects of the workflow and delegate the routine tasks to the automated workflows. But what sub-steps and tools can a platform for MLOps be built from?

Components of an MLOps pipeline

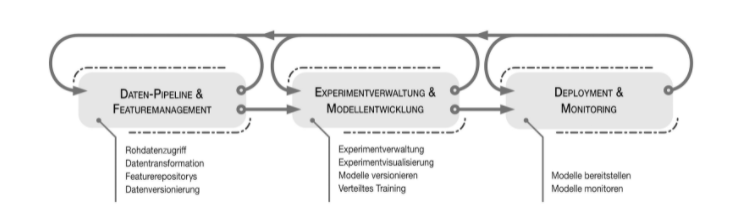

An MLOps workflow can be roughly divided into three areas:

- Data pipeline and feature management

- Experiment management and model development

- Deployment and monitoring

In the following, I describe the individual areas and present a selection of tools that are suitable for implementing the workflow. Of course, this selection is not conclusive or even representative, since the entire landscape is in a rapid development process, so that only individual snapshots are always possible.

Data pipeline and feature management

As hackneyed as slogans like “data is the new oil” may seem, they have a kernel of truth: The first step in any machine learning and data science workflow is to collect and prepare data.

Centralized access to raw data

Companies with modern data warehouses or data lakes have a distinct advantage when developing machine learning products. Without a centralized point to collect and store raw data, finding appropriate data sources and ensuring access to that data is one of the most difficult steps in the lifecycle of a machine learning project in larger organizations.

Centralized access can be implemented here in the form of a Hadoop-based platform. However, for smaller data volumes, a relational database such as Postgres [4] or MySQL [5], or a document database based on an EL stack [6] is also perfectly adequate. The major cloud providers also provide their own products for centralized raw data management: Amazon Redshift [7], Google BigQuery [8] or Microsoft Azure Cosmos DB [9].

In any case, it is necessary that we first archive a canonical form of our original data before applying any transformation to it. This gives us an unmodified dataset of original data that we can use as a starting point for processing.

Even at this point in the workflow, it is important to rely on good documentation and to document the sources of the data, its meaning, and where it is stored. Even though this step seems simple, it is still of utmost importance. Invalid data, the wrong naming of a column of data, or a misconfigured scraping job can lead to a lot of frustration and wasted time.

Data Transformation

Rarely will we train our machine learning model directly on raw data. Instead, we generate features from the raw data. In the context of machine learning, a feature is one or more processed data attributes that an algorithm uses to make predictions. This could be a temperature value, for example, but in the case of deep learning applications also highly abstract features in images. To extract features from raw data, we will apply various transformations. We will typically define these transformations in code, although some ETL tools also allow us to define them using a graphical interface. Our transformations will either be run as batch jobs on larger sets of data, or we will define them as long-running streaming applications that continuously transform data.

We also need to split our dataset into training and testing datasets. To train a machine learning model, we need a set of training data. To test how our model performs with previously unknown data, we need another structurally identical set of test data. Therefore, we split our original transformed data set into two data sets. These must not overlap, meaning that the same data point does not occur twice in them. A common split here is to use 70 percent of the dataset for training and 30 percent for testing the model.

The exact split of the data sets depends on the context. For time-series data, sequential slices from the series should be chosen, while for image processing, random images from the data set should be chosen since they have no sequential relation to each other.

For non-sequential data, the individual data points can also be placed in a (pseudo-)random order. We also want to perform this process in a reproducible and automated manner rather than manually. A pleasantly usable tool for management and coordination here is Apache Airflow [10]. Here, according to the “pipeline as code” principle, one can define various pipelines in the form of a data flow graph, connect a wide variety of systems, and thus perform the desired transformations.

Feature repositories

Many machine learning models and systems within a company use the same or at least similar features. Now, once we have extracted a feature from our raw data, there is a high probability that this feature can be useful for other applications as well. Therefore, it can be useful not to have to implement feature extraction again for each application. For this, we can store known features in a feature store. This can be done either in a dedicated component (such as Feast) [11], or in well-documented database tables populated by appropriate transformations. These transformations can be mapped automatically using Apache Airflow.

Data versioning

In addition to code versioning, data versioning is useful in a machine learning context. This allows us to increase the reproducibility of our experiments and to validate our models and their predictions by retracing the exact state of a training dataset that was used at a given time. Tools such as DVC [12] or Pachyderm [13] can be used for this purpose.

Experiment management and model development

In order to deploy an optimal model into production, we need to create a process that enables the development of that optimal model. To do this, we need to capture and visualize information that enables the decision of what the optimal model is, since in most cases this decision is made by a human and not automated.

Since the data science process is very experiment-driven, multiple experiments are run in parallel, often by different people at the same time. And most will not be deployed in a production environment. The experimental approach in this phase of research is very different from the “traditional” software development process, as we can expect that the code for these experiments will be discarded in the majority of cases, and only some experiments will reach a production status.

Experiment management and visualizations

Running hundreds or even thousands of iterations on the way to an optimally trained ML model is not uncommon. In the process, quite a few parameters used to define each experiment and the results of that experiment are accumulated. Often, this metadata is stored in Excel spreadsheets or, in the worst case, in the heads of team members. However, to establish optimal reproducibility, avoid time-consuming multiple experiments, and enable optimal collaboration, this data should be captured automatically. Possible tools here are MLflow tracking [14] or Sacred [15]. To visualize the output metrics, either classical dashboards like Grafana [16] or specialized tools like TensorBoard [17] can be used. TensorBoard can also be used for this purpose independently of its use with TensorFlow. For example, PyTorch provides a compatible logging library [18]. However, there is still much room for optimization and experimentation here. For example, the combination of other tools from the DevOps environment such as Jenkins [19] and Terraform [20] would also be conceivable.

Version control for models

In addition to the results of our experiments, the trained models themselves can also be captured and versioned. This allows us to more easily roll back to a previous model in a production environment. Models can be versioned in several ways: In the simplest variant, we export our trained model in serialized form, for example as a Python .pkl file. We then record this in a suitable version control system (Git, DVC), depending on its size.

Another option is to provide a central model registry. For example, the MLflow Model Registry [21] or the model registry of one of the major cloud providers can be used here. Also, the model can be packaged in a Docker container and managed in a private Docker Registry [22].

Infrastructure for distributed training

Smaller ML models can usually still be trained on one’s own laptop with reasonable effort. However, as soon as the models become larger and more complex, this is no longer possible and a central on-premise or cloud server becomes necessary for training. For automated training in such an environment, it makes sense to build a model training pipeline.

This is executed with training data at specific times or on demand. The pipeline receives the configuration data that defines a training cycle. These data are for example model type, hyperparameters and used features. The pipeline can obtain the training data set automatically from the feature store and distribute it to all model variants to be trained in parallel. Once training is complete, the model files, original configuration, learned parameters, and metadata and timings are captured in the experiment and model tracking tools. One possible tool for building one is Kubeflow [23]. Kubeflow provides a number of useful features for automated training and for (cost-)efficient resource management based on Kubernetes.

Deployment and monitoring

Unless our machine learning project is purely a proof of concept or an academic project, we will eventually need to lift our model into a production environment. And that’s not all: once it gets there, we’ll need to monitor it and deploy a new version as needed. Also, in a large part of the cases, we will have not just one, but rather a whole set of models in our production environments.

Deploy models

On a technical level, any model training pipeline must produce an artifact that can be deployed into a production environment. The prediction results may be bad, but the model itself must be in a packaged state that allows it to be deployed directly into a production environment. This is a familiar idea from software development: continuous delivery. This packaging can be done in two different ways.

Either our model is deployed as a separate service and accessed by the rest of our systems via an interface. Here, the deployment can be done, for example, with TensorFlow Serving [24] or in a Docker container with a matching (Python) web server.

An alternative way of deployment is to embed it in our existing system. Here, the model files are loaded and input and output data are routed within the existing system. The problem here is that the model must be in a compatible format or a conversion must be performed before deployment. If such a conversion is performed, automated tests are essential. Here it must be ensured that both the original and the converted model deliver identical prediction results.

Monitoring

A data science project does not end with the deployment of a model into production. Even a production model has to face many challenges. The value distribution of my input values may be different in the real world than the one mapped in the training data. Also, value distributions can change slowly over time or due to singular events. This then requires retraining with the changed data.

Also, despite intensive testing, errors may have crept in during the previous steps. For this reason, infrastructure should be provided to continuously collect data on model performance. The input values and the resulting predictions of the model should also be recorded, as far as this is compatible with the applicable data protection regulations. On the other hand, if privacy considerations are only introduced at this point, one has to ask how a sufficient amount of training data could be collected without questionable privacy practices.

Here’s a sampling of basic information we should be collecting about our machine learning system in production:

- How many times did the model make a prediction?

- How long does it take the model to perform a prediction?

- What is the distribution of the input data?

- What features were used to make the prediction?

- What results were predicted and what real results were observed later in the system?

Tools such as Logstash [25] or Prometheus [26] can be used to collect our monitoring data. To get a quick overview of the performance of the model, it is recommended to set up a dashboard that visualizes the most important metrics and to set up an automatic notification system that alerts the team in case of strong deviations so that appropriate countermeasures can be taken if needed.

Challenges on the road to MLOps

Companies face numerous challenges in staffing and challenges within their teams on the road to a successful machine learning strategy. There is also the financial challenge of attracting experienced software engineers and data scientists. But even if we manage to assemble a good team, we need to enable them to work together in the best possible way to bring out the strengths of each team member. Generally speaking, data scientists feel very comfortable using various statistical tools, machine learning algorithms, and Jupyter notebooks. However, they are often less familiar with version control software and testing tools that are widely used in software engineering. While software engineers are familiar with these tools, they often lack the expertise to choose the algorithm for a problem or to extract the last five percent of predictive accuracy from a model through skillful optimizations. Our workflows and processes must be designed to support both groups as best as possible and enable smooth collaboration.

In terms of technological challenges, we face a broad and dynamic technology landscape that is constantly evolving. In light of this confusing situation, we are often faced with the question of how to get started with new machine learning initiatives.

How do I get started with MLOps?

Building MLOps workflows must always be an evolutionary process and cannot be done in a one-time “big bang” approach. Each company has its own unique set of challenges and needs when developing its machine learning strategies. In addition, there are different users of machine learning systems, different organizational structures, and existing application landscapes. One possible approach here may be to create an initial prototype without ML components. Then, one should start building the infrastructure and simplest models.

From this starting point, the infrastructure created can then be used to move forward in small and incremental steps to more complicated models and lift them into production environments until the desired level of predictive accuracy and performance has been achieved. Short development cycles for machine learning models, in the range of days rather than weeks or even months, enable faster response to changing circumstances and data. However, such short iteration cycles can only be achieved with a high degree of automation.

Developing, putting into production, and keeping machine learning models productive is itself a complex and iterative process with many inherent challenges. Even on a small or experimental scale, many companies find it difficult to implement these processes cleanly and without failures. The data science and machine learning development process is particularly challenging in that it requires careful balancing of the iterative, exploratory components and the more linear engineering components.

The tools and processes for MLOps presented in this article are an attempt to provide structure to these development processes.

Although there are no proven and standardized processes for MLOps, we can take many lessons learned from “traditional” software engineering. In many teams there is a division between data scientists and software engineers. Here we have the pragmatic approach: Data scientists develop a model in a Jupyter notebook and throw this notebook over the fence to software engineering, which then follows the model into production with a DevOps approach.

If we think back a few years, “throwing it over the fence” is exactly the problem that gave rise to DevOps. This is exactly how Werner Vogels (CTO at Amazon) described the separation between development and operations in his famous interview in 2006 [27]: “The traditional model is that you take your software to the wall that separates development and operations, and throw it over and then forget about it. Not at Amazon.” Then came the phrase that looks good on DevOps conference T-shirts, coffee mugs and motivational posters: “You build it, you run it.” As naturally as development and operations belong together today, we must also make the collaboration between data science and DevOps a matter of course.

Links & Literature

[1] Tsymbal, Alexey: “The problem of concept drift. Definitions and related work. Technical Report”: https://www.scss.tcd.ie/publications/tech-reports/reports.04/TCD-CS-2004-15.pdf

[3] https://martinfowler.com/articles/cd4ml.html

[4] https://www.postgresql.org

[6] https://www.elastic.co/de/elasticsearch/

[7] https://aws.amazon.com/redshift/

[8] https://cloud.google.com/bigquery

[9] https://docs.microsoft.com/de-de/azure/cosmos-db/

[10] https://airflow.apache.org

[11] https://feast.dev

[12] https://dvc.org

[13] https://www.pachyderm.com

[14] https://www.mlflow.org/docs/latest/tracking.html

[15] https://github.com/IDSIA/sacred

[16] https://grafana.com

[17] https://www.tensorflow.org/tensorboard

[18] https://pytorch.org/docs/stable/tensorboard.html

[21] https://www.mlflow.org/docs/latest/model-registry.html

[22] https://docs.docker.com/registry/deploying/

[24] https://www.tensorflow.org/tfx/guide/serving

[25] https://www.elastic.co/de/logstash

[27] https://queue.acm.org/detail.cfm?id=1142065