In principle, Tribuo is an ML system intended to help close the feature gap between Python and Java in the field of artificial intelligence, at least to a certain extent.

According to the announcement, the product, which is licensed under the (very liberal) Apache license, can look back on a history of several years of use within Oracle. This can be seen in the fact that the library offers very extensive functions — in addition to the generation of “own” models, there are also interfaces for various other libraries, including TensorFlow.

The author and editors are aware that machine learning cannot be included in an article like this. However, just because of the importance of this idea, we want to show you a little bit about how you can play around with Tribuo.

Stay up to date

Learn more about MLCON

Modular library structure

Machine learning applications are not usually among the tasks you run on resource-constrained systems — IoT edge systems that run ML payloads usually have a hardware accelerator, such as the SiPeed MAiX. Nevertheless, Oracle’s Tribuo library is offered in a modularized way, so in theory, developers can only include those parts of the project in their Solutions that they really need. An overview of which functions are provided in the individual packages can be found under https://tribuo.org/learn/4.0/docs/packageoverview.html.

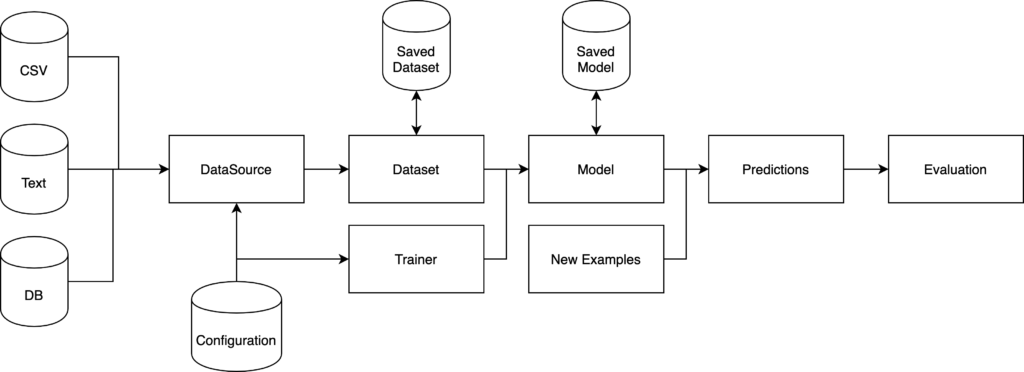

In this introductory article, we do not want to delve further into modularization, but instead, program a little on the fly. A Tribuo-based artificial intelligence application generally has the structure shown in Figure 1.

The figure informs us that Tribuo seeks to process information from A to Z itself. On the far left is a DataSource object that collects the information to be processed by artificial intelligence and converts it into Tribuo’s own storage format called Example. These Example objects are then held in the form of a Dataset instance, which — as usual — moves towards a model that delivers predictions. A class called Evaluator can then make concrete decisions based on this information, which is usually quite general or probability-based.

An interesting aspect of the framework in this context is that many Tribuo classes come with a more or less generic system for configuration settings. In principle, an annotation is placed in front of the attributes, whose behavior can be adjusted:

public class LinearSGDTrainer implements Trainer<Label>, WeightedExamples {

@Config(description="The classification objective function to use.")

private LabelObjective objective = new LogMulticlass();

The Oracle Labs Configuration and Utilities Toolkit (OLCUT) described in detail in https://github.com/oracle/OLCUT can read this information from XML files — in the case of the property we just created, the parameterization could be done according to the following scheme:

<config>

<component name="logistic"

type="org.tribuo.classification.sgd.linear.LinearSGDTrainer">

<property name="objective" value="log"/>

The point of this approach, which sounds academic at first glance, is that the behavior of ML systems is strongly dependent on the parameters contained in the various elements. By implementing OLCUT, the developer gives the user the possibility to dump these settings, or return a system to a defined state with little effort.

Tribuo integration

After these introductory considerations, it is time to conduct your first experiments with Tribuo. Even though the library source code is available on GitHub for self-compilation, it is recommended to use a ready-made package for first attempts.

Oracle supports both Maven and Gradle and even offers (partial) support for Kotlin in the Gradle special case. However, we want to work with classic tools in the following steps, which is why we grab an Eclipse instance and animate it to create a new Maven-based project skeleton by clicking New | New Maven project.

In the first step of the generator, the IDE asks if you want to load templates called archetype. Please select the Create a simple project (skip archetype selection) checkbox to animate the IDE to create a primitive project skeleton. In the next step, we open the file pom.xml to command an insertion of the library according to the scheme in Listing 1.

<name>tribuotest1</name>

<dependencies>

<dependency>

<groupId>org.tribuo</groupId>

<artifactId>tribuo-all</artifactId>

<version>4.0.1</version>

<type>pom</type>

</dependency>

</dependencies>

</project>

To verify successful integration, we then command a recompilation of the solution — if you are connected to the Internet, the IDE will inform you that the required components are moving from the Internet to your workstation.

Given the proximity to the classic ML ecosystem, it should not surprise anyone that the Tribuo examples are by and large in the form of Jupiter Notebooks — a widespread form of presentation, especially in the research field, that is not suitable for serial use in production, at least in the author’s opinion.

Therefore, we want to rely on classical Java programs in the following steps. The first problem we want to address is classification. In the world of ML, this means that the information provided is classified into a group of categories or histogram bins called a class. In the field of machine learning, a group of examples called data sets (sample data sets) has become established. These are prebuilt databases that are constant and can be used to evaluate different model hierarchies and training levels. In the following steps, we want to rely on the Iris data set provided in https://archive.ics.uci.edu/ml/datasets/iris. Funnily enough, the term iris does not refer to a part of the eye, but to a plant species.

Fortunately, the data set is available in a form that can be used directly by Tribuo. For this reason, the author working under Linux opens a terminal window in the first step, creates a new working directory, and downloads the information from the server via wget:

t@T18:~$ mkdir tribuospace

t@T18:~$ cd tribuospace/

t@T18:~/tribuospace$ wget https://archive.ics.uci.edu/ml/machine-learning-databases/iris/bezdekIris.data

Next, we add a class to our Eclipse project skeleton from the first step, which takes a Main method. Place in it the code from Listing 2.

import org.tribuo.classification.Label;

import java.nio.file.Paths;

public class ClassifyWorker {

public static void main(String[] args) {

var irisHeaders = new String[]{"sepalLength", "sepalWidth", "petalLength", "petalWidth", "species"};

DataSource<Label> irisData =

new CSVLoader<>(new LabelFactory()).loadDataSource(Paths.get("bezdekIris.data"),

irisHeaders[4],

irisHeaders);

This routine seems simple at first glance, but it’s a bit tricky in several respects. First, we are dealing with a class called Label — depending on the configuration of your Eclipse working environment, the IDE may even offer dozens of Label candidate classes. It’s important to make sure you choose the org.tribuo.classification.Label import shown here — a label is a cataloging category in Tribuo.

The syntax starting with var then enables the IDE to reach a current Java version — if you want to use Tribuo (effectively), you have to use at least JDK 8, or even better, JDK 10. After all, the var syntax introduced in this version can be found in just about every code sample.

It follows from the logic that — depending on the system configuration — you may have to make extensive adjustments at this point. For example, the author working under Ubuntu 18.04 first had to provide a compatible JDK:

tamhan@TAMHAN18:~/tribuospace$ sudo apt-get install openjdk-11-jdk

Note that Eclipse sometimes cannot reach the new installation path by itself — in the package used by the author, the correct directory was /usr/lib/jvm/java-11-openjdk-amd64/bin/java.

Anyway, after successfully adjusting the Java execution configuration, we are able to compile our application — you may still need to add the NIO package to the Maven configuration because the Tribuo library relies on this novel EA library across the field for better performance.

Now that we have our program skeleton up and running, let’s look at it in turn to learn more about the inner workings of Tribuo applications. The first thing we have to deal with — think of Figure 1 on the far left — is data loading (Listing 3).

public static void main(String[] args) {

try {

var irisHeaders = new String[]{"sepalLength", "sepalWidth", "petalLength", "petalWidth", "species"};

DataSource<Label> irisData;

irisData = new CSVLoader<>(new LabelFactory()).loadDataSource( Paths.get("/home/tamhan/tribuospace/bezdekIris.data"),

irisHeaders[4],

irisHeaders);

The provided dataset contains comparatively little information — headers and co. are looked for in vain. For this reason, we have to pass an array to the CSVLoader class that informs you about the column names of the data set. The assignment of irisHeaders ensures that the target variable of the model receives separate announcements.

In the next step, we have to take care of splitting our dataset into a training group and a test group. Splitting the information into two groups is quite a common procedure in the field of machine learning. The test data is used to “verify” the trained model, while the actual training data is used to adjust and improve the parameters. In the case of our program, we want to make a 70-30 split between training and other data, which leads to the following code:

var splitIrisData = new TrainTestSplitter<>(irisData, 0.7, 1L);

var trainData = new MutableDataset<>(splitIrisData.getTrain());

var testData = new MutableDataset<>(splitIrisData.getTest());

Attentive readers wonder at this point why the additional parameter 1L is passed. Tribuo works internally with a random generator. As with all or at least most pseudo-random generators, it can be animated to behave more or less deterministically if you set the seed value to a constant. The constructor of the class TrainTestSplitter exposes this seed field — we pass the constant value one here to achieve a reproducible behavior of the class.

At this point, we are ready for our first training run. In the area of training machine learning-based models, a group of procedures emerged that are generally grouped together by developers. The fastest way to a runnable training system is to use the LogisticRegressionTrainer class, which inherently loads a set of predefined settings:

var linearTrainer = new LogisticRegressionTrainer();

Model<Label> linear = linearTrainer.train(trainData);

The call to the Train method then takes care of the framework triggering the training process and getting ready to issue predictions. It follows from this logic that our next task is to request such a prediction, which is forwarded towards an evaluator in the next step. Last but not least, we need to output its results towards the command line:

Prediction<Label> prediction = linear.predict(testData.getExample(0));

LabelEvaluation evaluation = new LabelEvaluator().evaluate(linear,testData);

double acc = evaluation.accuracy();

System.out.println(evaluation.toString());

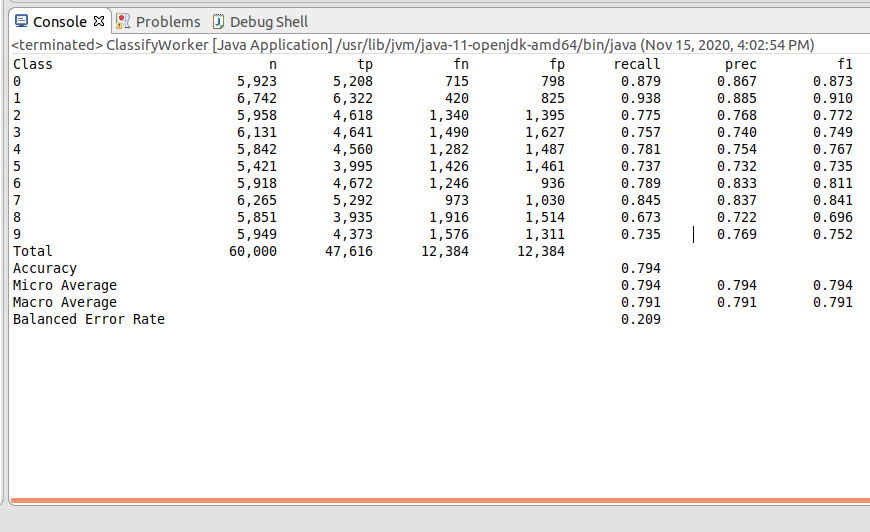

At this point, our program is ready for a first small test run — Figure 2 shows how the results of the Iris data set present themselves on the author’s workstation.

Learning more about elements

Having enabled our little Tribuo classifier to perform classification against the Iris data set in the first step, let’s look in detail at some advanced features and functions of the classes used.

The first Interesting feature is to check the work of TrainTestSplitter class. For this purpose it is enough to place the following code in a conveniently accessible place in the Main method:

System.out.println(String.format("data size = %d, num features = %d, num classes = %d",trainingDataset.size(),trainingDataset.getFeatureMap().size(),trainingDataset.getOutputInfo().size()));

The data set exposes a set of member functions that output additional information about the tuples they contain. For example, executing the code here would inform how many data sets, how many features, and how many classes to subdivide.

The LogisticRegressionTrainer class is also only one of several processes you can use to train the model. If we want to rely on the CART process instead, we can adapt it according to the following scheme:

var cartTrainer = new CARTClassificationTrainer();

Model<Label> tree = cartTrainer.train(trainData);

Prediction<Label> prediction = tree.predict(testData.getExample(0));

LabelEvaluation evaluation = new LabelEvaluator().evaluate(tree,testData);

If you run the program again with the modified classification, a new console window opens with additional information — it shows that Tribuo is also suitable for exploring machine learning processes. Incidentally, given the comparatively simple and unambiguous structure of the data set, the same result is obtained with both classifiers.

Number Recognition

Another dataset widely used in the ML field is the MNIST Handwriting Sample. This is a survey conducted by MNIST about the behavior of people writing numbers. Logically, such a model can then be used to recognize postal codes — a process that helps save valuable man-hours and money when sorting letters.

Since we have just dealt with basic classification using a more or less synthetic data set, we want to work with this more realistic information in the next step. In the first step, this information must also be transferred to the workstation, which is done under Linux by calling wget:

tamhan@TAMHAN18:~/tribuospace$ wget

http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

tamhan@TAMHAN18:~/tribuospace$ wget

http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

The most interesting thing about the structure given here is that each wget command downloads two files — both the training and the actual working files are in separate archives each with label and image information.

The MNIST data set is available in a data format known as IDX, which in many cases is compressed via GZIP and easier to handle. If you study the Tribuo library documentation available at https://tribuo.org/learn/4.0/javadoc/org/tribuo/datasource/IDXDataSource.html, you will find that with the class IDXDataSource, a loading tool intended for this purpose is available. It even decompresses the data on the fly if required.

It follows that our next task is to integrate the IDXDataSource class into our program workflow (Listing 4).

public static void main(String[] args) {

try {

LabelFactory myLF = new LabelFactory();

DataSource<Label> ids1;

ids1 = new

IDXDataSource<Label>(Paths.get("/home/tamhan/tribuospace/t10k-images-idx3-ubyte.gz"),

Paths.get("/home/tamhan/tribuospace/t10k-labels-idx1-ubyte.gz"), myLF);

From a technical point of view, the IDXDataSource does not differ significantly from the CSVDataSource used above. Interestingly, we pass two file paths here, because the MNIST data set is available in the form of a separate training and working data set.

By the way, developers who grew up with other IO libraries should pay attention to a little beginner’s trap with the constructor: The classes contained in Tribuo do not expect a string, but a finished Path instance. However, this can be generated with the unbureaucratic call of Paths.get().

Be that as it may, the next task of our program is the creation of a second DataSource, which takes care of the training data. Its constructor differs — logically — only in the file name for the source information (Listing 5).

DataSource<Label> ids2;

ids2 = new

IDXDataSource<Label>(Paths.get("/home/tamhan/tribuospace/train-images-idx3-ubyte.gz"),

Paths.get("/home/tamhan/tribuospace/train-labels-idx1-ubyte.gz"), myLF);

var trainData = new MutableDataset<>(ids1);

var testData = new MutableDataset<>(ids2);

var evaluator = new LabelEvaluator();

Most data classes included in Tribuo are generic with respect to output format, at least to some degree. This affects us in that we must permanently pass a LabelFactory to inform the constructor of the desired output format and the Factory class to be used to create it

The next task we take care of is to convert the information into Data Sets and launch an emulator and a CARTClassificationTrainer training class:

var cartTrainer = new CARTClassificationTrainer();

Model<Label> tree = cartTrainer.train(trainData);

The rest of the code — not printed here for space reasons — is then a one-to-one modification of the model processing used above. If you run the program on the workstation, you will see the result shown in Figure 3. By the way, don’t be surprised if Tribuo takes some time here — the MNIST dataset is somewhat larger than the one in the table used above.

Since our achieved accuracy is not so great after all, we want to use another training algorithm here. The structure of Tribuo shown in Figure 1, based on standard interfaces, helps us make such a change with comparatively little code.

This time we want to use the LinearSGDTrainer class as a target, which we integrate into the program by the command import org.tribuo.classification.sgd.linear.LinearSGDTrainer;. By the way, the explicit mention of it is not a pedantry of the publisher — besides the Classification class used here, there is also a LinearSGDTrainer, which is intended for regression tasks and cannot help us at this point, however.

Since the LinearSGDTrainer class does not come with a convenience constructor that initializes the trainer with a set of (more or less well-working) default values, we have to make some changes:

var cartTrainer = new LinearSGDTrainer(new LogMulticlass(), SGD.getLinearDecaySGD(0.2),10,200,1,1L);

Model<Label> tree = cartTrainer.train(trainData);

At this point, this program version is also ready to use — since LinearSGD requires more computing power, it will take a little more time to process.

Excursus: Automatic configuration

LinearSGD may be a powerful machine learning algorithm — but the constructor is long and difficult to understand because of the number of parameters, especially without IntelliSense support.

Since with ML systems you generally work with a Data Scientist with limited Java skills, it would be nice to be able to provide the model information as a JSON or XML file.

This is where the OLCUT configuration system mentioned above comes in. If we include it in our solution, we can parameterize the class in JSON according to the scheme shown in Listing 6.

"name" : "cart",

"type" : "org.tribuo.classification.dtree.CARTClassificationTrainer",

"export" : "false",

"import" : "false",

"properties" : {

"maxDepth" : "6",

"impurity" : "gini",

"seed" : "12345",

"fractionFeaturesInSplit" : "0.5"

}

Even at first glance, it is noticeable that parameters like the seed to be used for the random generator are directly addressable — the line “seed” : “12345” should be found even by someone who is challenged by Java.

By the way, OLCUT is not limited to the linear creation of object instances — the system is also able to create nested class structures. In the markup we just printed, the attribute “impurity” : “gini” is an excellent example of this — it can be expressed according to the following scheme to generate an instance of the GiniIndex class:

"name" : "gini",

"type" : "org.tribuo.classification.dtree.impurity.GiniIndex",

Once we have such a configuration file, we can invoke an instance of the ConfigurationManager class as follows:

ConfigurationManager.addFileFormatFactory(new JsonConfigFactory())

String configFile = "example-config.json";

String.join("\n",Files.readAllLines(Paths.get(configFile)))

OLCUT is agnostic in the area of file formats used — the actual logic for reading the data present in the file system or configuration file can be written in by an adapter class. In the case of our present code we use the JSON file format, so we register an instance of JsonConfigFactory via the addFileFormatFactory method.

Next, we can also start parameterizing the elements of our machine learning application using ConfigurationManager:

var cm = new ConfigurationManager(configFile);

DataSource<Label> mnistTrain = (DataSource<Label>) cm.lookup("mnist-train");

The lookup method takes a string, which is compared against the Name attributes in the knowledge base. Provided that the configuration system finds a match at this point, it automatically invokes the class structure described in the file.

ConfigurationManager is extremely powerful in this respect. Oracle offers a comprehensive example at https://tribuo.org/learn/4.0/tutorials/configuration-tribuo-v4.html that harnesses the configuration system using Jupiter Notebooks to generate a complex machine learning toolchain.

This measure, which seems pointless at first glance, is actually quite sensible. Machine learning systems live and die with the quality of the training data as well as with the parameterization — if these parameters can be conveniently addressed externally, it is easier to adapt the system to the present needs.

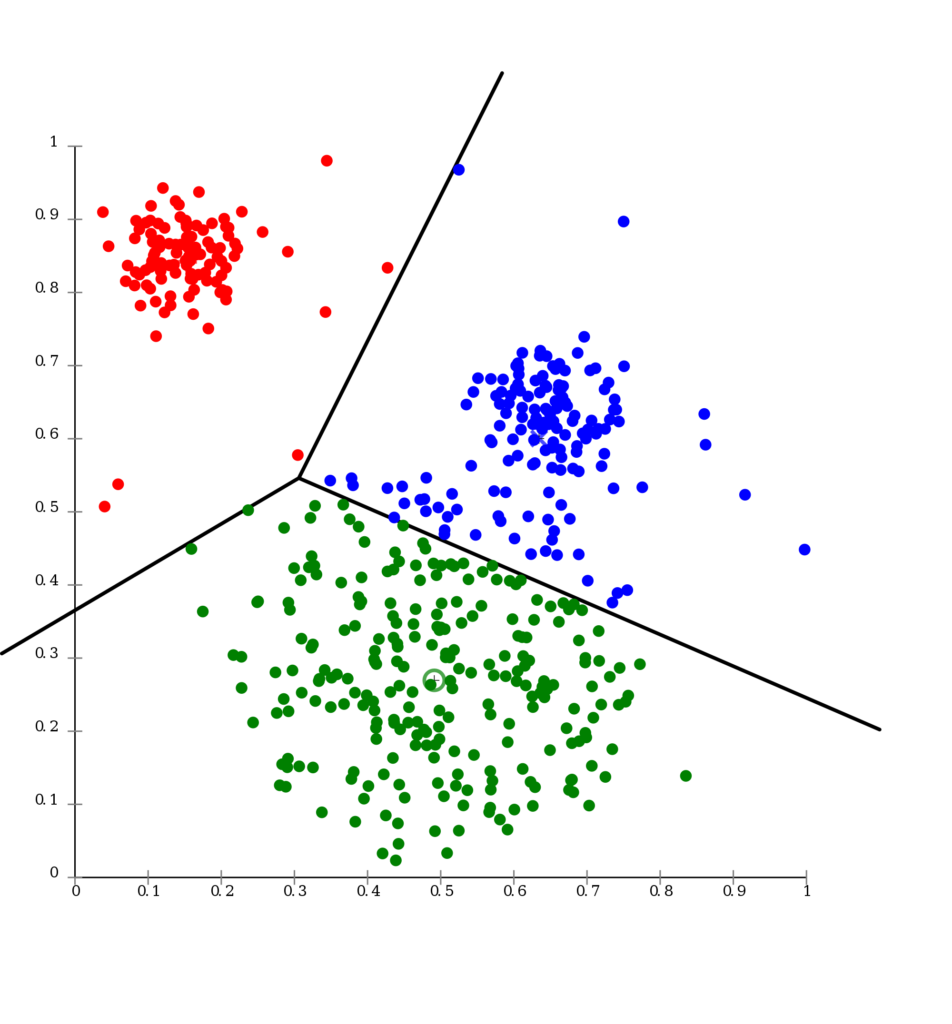

Grouping with synthetic data sets

In the field of machine learning, there is also a group of universal procedures that can be applied equally to various detailed problems. Having dealt with classification so far, we now turn to clustering. As shown in Figure 4, the focus here is on the idea of inscribing groups into a wild horde of data in order to be able to recognize trends or associations more easily.

It follows from logic that we also need a database for regression experiments. Tribuo helps us at this point with the ClusteringDataGenerator class, which harnesses the Gaussian distribution (from mathematics and probability theory) to generate test data sets. For now, we want to populate two test data fields according to the following scheme:

public static void main(String[] args) {

try {

var data = ClusteringDataGenerator.gaussianClusters(500, 1L);

var test = ClusteringDataGenerator.gaussianClusters(500, 2L);

The numbers passed as the second parameter determine which number will be used as the initial value for the random generator. Since we pass two different values here, the PRNG will generate two sequences of numbers that differ from the sequence here, but which always follow the rules of the Gaussian normal distribution.

In the field of clustering methods, the K-Means process has become well-established. For this reason alone, we want to use it again in our Tribuo example. If you look carefully at the structure of the code used, you will notice that the standardized structure is also apparent:

var trainer = new KMeansTrainer(5,10,Distance.EUCLIDEAN,1,1);

var model = trainer.train(data);

var centroids = model.getCentroidVectors();

for (var centroid : centroids) {

System.out.println(centroid);

}

Of particular interest is actually the passed Distance value, which determines which method should be used to calculate or weight the two-dimensional distances between elements. It is critical in that the Tribuo library comes with several Distance Enums — the one we need is in the namespace org.tribuo.clustering.kmeans.KMeansTrainer.Distance.

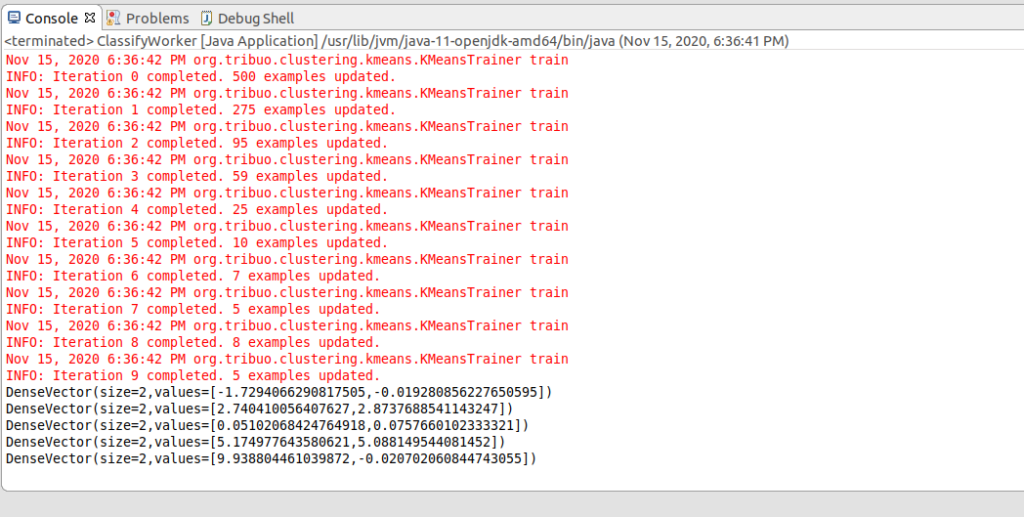

Now the program can be executed again — Figure 5 shows the generated center point matrices.

The red messages are progress reports: most of the classes included in Tribuo contain logic to inform the user about the progress of long-running processes in the console. However, since these operations also consume processing power, there is a way to influence the frequency of operation of the output logic in many constructors.

Be that as it may: To evaluate the results, it is helpful to know the Gaussian parameters used by the ClusteringDataGenerator function. Oracle, funnily enough, only reveals them in the Tribuo tutorial example; in any case, the values for the three parameter variables are as follows:

N([ 0.0,0.0], [[1.0,0.0],[0.0,1.0]])

N([ 5.0,5.0], [[1.0,0.0],[0.0,1.0]])

N([ 2.5,2.5], [[1.0,0.5],[0.5,1.0]])

N([10.0,0.0], [[0.1,0.0],[0.0,0.1]])

N([-1.0,0.0], [[1.0,0.0],[0.0,0.1]])

Since a detailed discussion of the mathematical processes taking place in the background would go beyond the scope of this article, we would like to cede the evaluation of the results to Tribuo instead. The tool of choice is, once again, an element based on the evaluator principle. However, since we are dealing with clustering this time, the necessary code looks like this:

ClusteringEvaluator eval = new ClusteringEvaluator();

var mtTestEvaluation = eval.evaluate(model,test);

System.out.println(mtTestEvaluation.toString());

When you run the present program, you get back a human-readable result — the Tribuo library takes care of preparing the results contained in ClusteringEvaluator for convenient output on the command line or in the terminal:

Clustering Evaluation

Normalized MI = 0.8154291916732408

Adjusted MI = 0.8139169342020222

Excursus: Faster when parallelized

Artificial intelligence tasks tend to consume immense amounts of computing power — if you don’t parallelize them, you lose out.

Parts of the Tribuo library are provided by Oracle out of the box with the necessary tooling that automatically distributes the tasks to be done across multiple cores of a workstation.

The trainer presented here is an excellent example of this. As a first task, we want to use the following scheme to ensure that both the training and the user data are much more extensive:

var data = ClusteringDataGenerator.gaussianClusters(50000, 1L);

var test = ClusteringDataGenerator.gaussianClusters(50000, 2L);

In the next step, it is sufficient to change a value in the constructor of the KMeansTrainer class according to the following scheme — if you pass eight here, you instruct the engine to take eight processor cores under fire at the same time:

var trainer = new KMeansTrainer(5,10,Distance.EUCLIDEAN,8,1);

At this point, you can release the program for testing again. When monitoring the overall computing power consumption with a tool like Top, you should see a brief flash of the utilization of all cores.

Regression with Tribuo

By now, it should be obvious that one of the most important USPs of the Tribuo library is its ability to represent more or less different artificial intelligence procedures via a common scheme of thought, process, and implementation shown in Figure 1. As a final task in this section, let’s turn to regression — which, in the world of artificial intelligence, is the analysis of a data set to determine the relationship between variables in an input set and an output set. This is for early neural networks in the field of AI for games — and the area that one expects most from AI as a non-initiated developer.

For this task we want to use a wine quality data set: The data sample available in detail at https://archive.ics.uci.edu/ml/datasets/Wine+Quality correlates wine ratings to various chemical analysis values. So the purpose of the resulting system is to give an accurate judgment about the quality or ratings of wine after feeding it with this chemical information.

As always, the first task is to provide the test data, which we (of course) do again via wget:

tamhan@TAMHAN18:~/tribuospace$ wget https://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv

Our first order of business is to load the sample information we just provided — a task that is done via a CSVLoader class:

try {

var regressionFactory = new RegressionFactory();

var csvLoader = new CSVLoader<>(';',regressionFactory);

var wineSource = csvLoader.loadDataSource(Paths.get("/home/tamhan/tribuospace/winequality-red.csv"),"quality");

Two things are new compared to the previous code: First, the CSVLoader is now additionally passed a semicolon as a parameter. This string informs it that the submitted sample file has a “special” data format in which the individual pieces of information are not separated by commas. The second special feature is that we now use an instance of RegressionFactory as factory instance — the previously used LabelFactory is not really suitable for regression analyses.

The wine data set is not divided into training and working data. For this reason, the TrainTestSplitter class from before comes to new honors; we assume 30 percent training and 70 percent useful information:

var splitter = new TrainTestSplitter<>(wineSource, 0.7f, 0L);

Dataset<Regressor> trainData = new MutableDataset<>(splitter.getTrain());

Dataset<Regressor> evalData = new MutableDataset<>(splitter.getTest());

In the next step we need a trainer and an evaluator (Listing 7).

var trainer = new CARTRegressionTrainer(6);

Model<Regressor> model = trainer.train(trainData);

RegressionEvaluator eval = new RegressionEvaluator();

var evaluation = eval.evaluate(model,trainData);

var dimension = new Regressor("DIM-0",Double.NaN);

System.out.printf("Evaluation (train):%n RMSE %f%n MAE %f%n R^2 %f%n",

evaluation.rmse(dimension), evaluation.mae(dimension), evaluation.r2(dimension));

Two things are new about this code: First, we now have to create a regressor that marks one of the values contained in the data set as relevant. Second, we perform an evaluation against the data set used for training as part of the model preparation. While this approach can lead to overtraining, it does provide some interesting parameters if used intelligently.

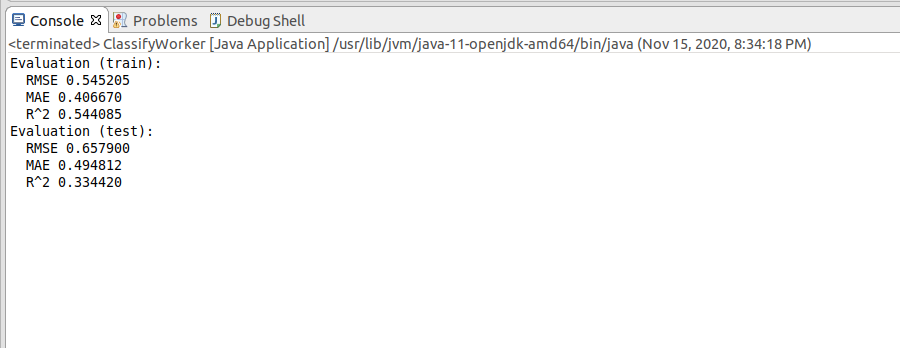

If you run our program as-is, you will see the following output on the command line:

Evaluation (train):

RMSE 0.545205

MAE 0.406670

R^2 0.544085

RMSE and MAE are both parameters that describe the quality of the model. For both, a lower value indicates a more accurate model.

As a final task, we have to take care of performing another evaluation, but it no longer gets the training data as a comparison. To do this, we simply need to adjust the values passed to the Evaluate method:

evaluation = eval.evaluate(model,evalData);

dimension = new Regressor("DIM-0",Double.NaN);

System.out.printf("Evaluation (test):%n RMSE %f%n MAE %f%n R^2 %f%n",

evaluation.rmse(dimension), evaluation.mae(dimension), evaluation.r2(dimension));

The reward for our effort is the screen image shown in Figure 6 — since we are no longer evaluating the original training data, the accuracy of the resulting system has deteriorated.

This program behavior makes sense: if you train a model against new data, you will naturally get worse data than if you short-circuit it with an already existing source of information. Note at this point, however, that overtraining is a classic antipattern, especially in financial economics, and has cost more than one algorithmic trader a lot of money.

This brings us to the end of our journey through the world of artificial intelligence with Tribuo. The library also supports anomaly detection as a fourth design pattern, which we will not discuss here. At https://tribuo.org/learn/4.0/tutorials/anomaly-tribuo-v4.html you can find a small tutorial — the difference between the three methods presented so far and the new method, however, is that the new method works with other classes.

Conclusion

Hand on heart: If you know Java well, you can learn Python — while swearing, but without any problems: So it is more a question of not wanting to than a problem of not being able to.

On the other hand, there is no question that Java payloads are much easier to integrate into various enterprise processes and enterprise toolchains than their Python-based counterparts. The use of the Tribuo library already provides a remedy since you do not have to bother with the manual brokering of values between the Python and the Java parts of the application.

If Oracle would improve the documentation a bit and offer even more examples, there would be absolutely nothing against the system. Thus, it is true that the hurdle to switching is somewhat higher: Those who have never worked with ML before will learn the basics faster with Python, because they will find more turnkey examples. On the other hand, it is also true that working with Tribuo is faster in many cases due to the various comfort functions. All in all, Oracle succeeds with Tribuo in a big way, which should give Java a good chance in the field of machine learning — at least from the point of view of an ecosystem.