Are you developing an application that stores time-based data? Orders, ratings, comments, appointments, time bookings, repairs or customer contacts? Do you have detailed log files about the number and duration of visits? Hand on heart: How quickly would you notice if your systems (or your users) were behaving differently than you thought? Maybe one of your clients is trying to flood the software with way too much data, or a product in your webshop is “going through the roof”? Maybe there are performance issues in certain browsers or unnatural CPU spikes that deserve a closer look? Metrics Advisor from Azure Cognitive Services provides an AI-powered service that monitors your data and alerts you when anomalies are suspected.

Stay up to date

Learn more about MLCON

What is normal?

The big challenge here is defining what constitutes an anomaly in the first place. Imagine a whole shelf full of developer magazines, with only one sports magazine among them. You could rightly say that the sports magazine is an anomaly. But perhaps, by chance, all the magazines are in A4 format, and only two are in A5 – another anomaly. Thus, for automated anomaly detection, it is important to learn from experience and understand which anomalies are actually relevant – and where there is a false alarm that should be avoided in the future.

In the case of time-based data – which is what Metrics Advisor is all about – there are several approaches to anomaly detection. The simplest way is to define hard limits: Anything below or above a certain threshold is considered an anomaly. This doesn’t require machine learning or artificial intelligence; the rules are quickly implemented and clearly understood. For monitoring data, that might be enough: If 70 percent of the storage space is occupied, you want to react. But often the (data) world does not run in rigid paths, sometimes the relative change is more decisive than the actual value: if there has been a significant increase or decrease of more than 10 percent within the last three hours, an anomaly should be detected. An example could be taken from finance. If your private account balance changes from €20,000 to €30,000, this is probably to be considered an anomaly. If a company account changes from €200,000 to €210,000, this is not worth mentioning. As you can tell from this example, the classification of what constitutes an anomaly may change over time. When founding a startup, €100,000 is a lot of money; when founding a large corporation, it is a marginal note. But what if your data is subject to seasonal fluctuations, or individual days such as weekends or holidays behave significantly differently? Here, too, the classification is not so trivial. Is a wave of influenza in the winter months to be expected and only an anomaly in the summer, or should every increase in infection numbers be flagged? As you can see, the question of anomaly detection is to some extent a very subjective one, regardless of the tooling – and not all decisions can be taken over by the technology. Machine learning, however, can help learn from historical data and distinguish normal fluctuations from anomalies.

Metrics Advisor

Metrics Advisor is a new service in the Azure Cognitive Services lineup and is only available in a preview version for now. Internally, another service is used, namely the Anomaly Detector (also part of the Cognitive Services). Metrics Advisor complements this with numerous API methods, a web frontend for managing and viewing data feeds, root-cause analysis and alert configurations. To experiment, you need an Azure subscription. There you can create a Metrics Advisor resource; it’s completely free to use in the preview phase.

The example I would like to use to demonstrate the basic procedure uses data from Google Trends [1]. I have evaluated and downloaded weekly Google Trends scores for two search terms (“vaccine” and “influenza”) for the last five years for four countries (USA, China, Germany, Austria) and would like to try to identify any anomalies in this data. The entire administration of the Metrics Advisor can be done via the provided REST API [2], a faster way to get started is via the provided web frontend, the so-called workspace [3].

Data Feeds

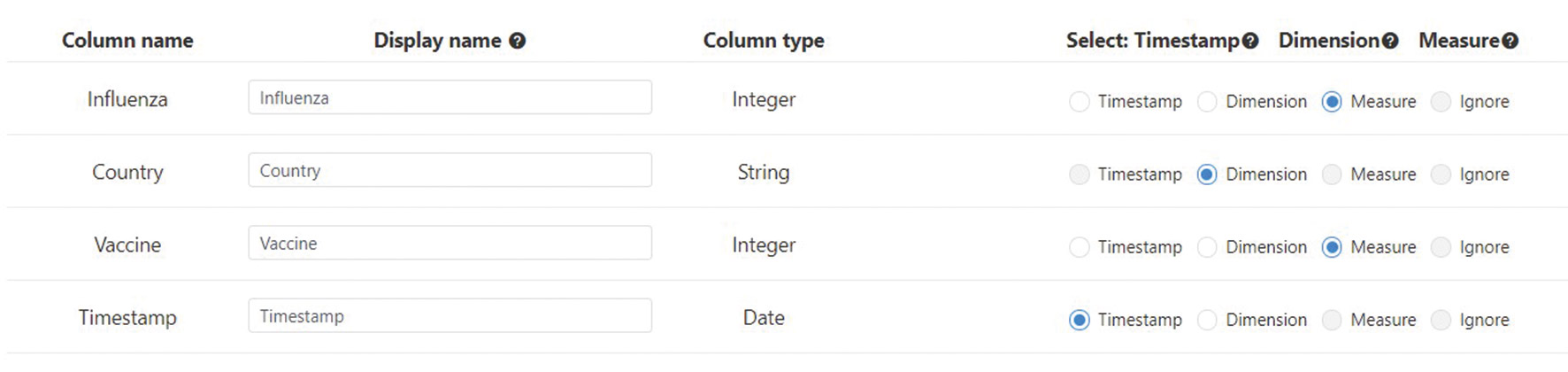

To start with, we create a new data feed that provides the basic data for the analysis. Various Azure services and databases are available out of the box as data sources: Application Insights, Blob Storage, Cosmos DB, Data Lake, Table Storage, SQL Database, Elastic Search, Mongo DB, PostgreSQL – and a few more. In our example, I loaded the Google Trends data into a SQL Server database. In addition to the primary key, the table has four other columns: the date, the country, and the scores for vaccine and influenza. In Metrics Advisor, an SQL statement must now be specified (in addition to the connection string) to query all values for a given date. This is because the service will continue to periodically visit our database to retrieve and analyze the new data. The frequency at which this update should happen is set via the granularity: The data can be analyzed annually, monthly, weekly, daily, hourly or in even shorter periods (the smallest unit is 300 seconds). Depending on the selected granularity, Microsoft also recommends how much historical data to provide. If we choose a 5-minute interval, then data from the last four days will suffice. In our case, a weekly analysis, four years is recommended. After clicking on Verify and get schema, the SQL statement is issued and the structure of our data source is determined. We see the columns shown in Figure 1 and need to assign meaning: Which column contains the timestamp? Which columns should be analyzed as metrics – and where are additional facts (dimensions) that could be possible causes of anomalies?

Now, before the data is actually imported, there is one more thing to consider: The roll up settings. For a later root cause analysis, it is necessary to build a multidimensional cube that calculates aggregated values per dimension (i.e. in our case per week also an aggregation over all countries). This way, in case of anomalies, it can later be investigated which dimensions or characteristics seem to be causal for the change in value. If the aggregations are not already in our data source, Metrics Advisor can be asked to calculate them. The only decision we have to make here is the type of aggregation (sum, average, min, max, count). Here our example lags a bit: we select average, but the value of the USA thus flows in with the same factor as the value of the small Austria. As you can see: often one fails because of the data quality or has to be careful that statements are not based on wrong calculations.

Finally, we start the import, which can take several hours depending on the amount of data. The status of the import can also be tracked in the workspace, and individual time periods can be reloaded at any time.

Analysis and fine tuning

Once the data import is complete, we can take a first look at our results. The main goal of Metrics Advisor is to analyze and detect new anomalies – that is, to investigate whether the most recent data point is an anomaly or not. Nevertheless, historical data is also taken into consideration. Depending on the granularity, the service looks several hours to years into the past and tries to flag anomalies there as well. In our case (five years of data, weekly aggregation), the so-called Smart Detection provides results for the past six months and marks individual points in time as an anomaly (Fig. 2).

Now it is time to take a look at the suggestions: Are the identified anomalies actually relevant? Is the detection too sensitive or too tolerant? There are some ways to improve the detection rate. Let’s recall the beginning of this article: The big challenge is to define what constitutes an anomaly in the first place.

You will probably notice a prominently placed slider in the workspace very quickly. With it we can control the sensitivity. The higher the value, the smaller the area containing normal points. We also get these limits visualized within the charts as a light blue area. Sometimes it is useful not to warn at the first occurrence of an anomaly, but only when several anomalies have been detected over a period of time. We can configure Metrics Advisor to look at a certain number of points retrospectively and not consider an anomaly until a certain percentage of those points have been detected as an anomaly. For example, a brief performance problem should be tolerated, but if 70 percent of the readings have been detected as an anomaly in the last 15 minutes, it should be considered a problem overall.

According to the use case, it may make sense to supplement Smart Detection with manual rules. A Hard Threshold can be used to define a lower or upper limit as well as a range of values that should be considered an anomaly. The Change Threshold offers the mentioned possibility to evaluate a percentage change to one or more predecessor points as an anomaly. By the way the different rules are linked (or/and) we influence the detection: for example, an anomaly should only be detected as such if Smart Detection strikes and the value is above 30. We can compose and name several of these configurations. In addition, it is possible to store special rules for individual dimensions.

Depending on our settings, more or less anomalies are detected in the data, and the Metrics Advisor then tries to convert them into so-called incidents. An incident can consist of a single anomaly, but is often made up of related anomalies and thus entire time periods are listed under a common incident. Tools are available in the Incident Hub for closer examination: We can filter the found incidents (by time, criticality, and dimension), start an initial automatic root cause analysis (see “Root cause” in Fig. 3), and drill down through multiple dimensions to gather insights.

Feedback

Perhaps the greatest benefit of using artificial intelligence for anomaly detection is the ability to learn through feedback. Even if sensitivity and thresholds have been set well, sometimes the service will get it wrong. However, it is for these data points that feedback can then be provided via the API or portal: Where was an anomaly incorrectly detected? Where was an anomaly missed? The service accepts this feedback and tries to assign similar cases more correctly in the future. The service also tries to recognize time periods – and can be proven wrong if we mark a time range and report it as a period.

For predictable anomalies that have temporal reasons (holidays, weekends, cyclically recurring events), there are separate options for configuration. These should therefore not be reported subsequently as feedback, but stored as so-called preset events.

Alerts

We should now be at a point where data is imported regularly and anomaly detection hopefully works reliably. However, the best detection is of no use if we learn about it too late. Therefore, Alert Configurations should be set up to actively notify about anomalies. Currently, there are three channels to choose from: via email, as a WebHook, or as a ticket in Azure DevOps. The WebHook variant in particular offers exciting possibilities for integration: we can display the detected anomalies in our own application or trigger a workflow using Azure Logic Apps. Perhaps we simply restart the affected web app as a first automated action.

Snooze settings also seem handy; an alert can automatically ensure that no more alerts are sent for a configurable period of time afterwards. This avoids waking up in the morning with 500 emails in your inbox, all with the same content.

Summary

Metrics Advisor provides an exciting and easy entry into the world of anomaly detection of time-based data. Long-time data scientists may prefer other ways and means (and may be interested in the paper at [4]), but for application developers who want to start their first experiments with matching data, this service is a potent gateway drug. The preview status is currently still evident mainly in the web portal and in the lack of documentation quality of the REST API; however, good conceptual documentation is already available.

Have fun trying it out and experimenting with your own data sources.

Links & Literature

[2] https://docs.microsoft.com/en-us/azure/cognitive-services/metrics-advisor/

[3] https://metricsadvisor.azurewebsites.net

[4] https://arxiv.org/abs/1906.03821