We’ve all been there: we’ve spent weeks or even months working on our ML model. We collected and processed data, tested different model architectures, and spent a lot of time fine-tuning our model’s hyperparameters. Our model is ready! Maybe we need a few more tweaks to improve performance more, but it’s ready for the real world. Finally, we put our model into production, and sit back and relax. Three weeks later, we get an angry call from our customer because our model makes predictions that don’t have anything to do with reality. A look at the log reveals no errors. In fact, everything still looks good.

However, since we haven’t established continuous monitoring for our model, we don’t know if and when our model’s predictions change. We have to hope that they’ll always be just as good as on day one. But if our infrastructure is made out of only duct tape and hope, then we’ll find lots of errors only in production.

Stay up to date

Learn more about MLCON

What is MLOps, anyway?

We want to create infrastructure and processes that combine the development of machine learning systems with the system’s operation. That’s the goal that MLOps is pursuing. This is closely interwoven with DevOps’ goals and definition. But the goal here isn’t just developing and operating software systems, but developing and operating machine learning systems.

As a data scientist, I have to ask myself why I should worry about the operational part of ML systems at all. Technically, my goal is just to train the best possible ML model. While this is a worthy goal, I have to keep in mind the system’s overall context and the business of the company I’m operating out in. About 90% of all ML models are never deployed in a production environment [1], [2]. This means they never reach a user or customer. Provocatively speaking: 90% of machine learning projects are useless for our business. Of course, in the end, a model is only useful if it generates added value for my users or processes.

Besides, when developing a machine learning system, I always keep the following quote from Andrew Ng in mind: “You deployed your model in production? Congratulations. You’re halfway done with your project.” [3]

Productive challenges

Our machine learning project isn’t over when we bring our first model into a production environment. After all, even a model that delivered excellent results in training and testing faces many challenges once it arrives in production. Perhaps the best-known challenge are changes in the distribution of the data that our model receives as input. A whole range of events can trigger this, but they’re often grouped under the term “concept drift”. The data set used to train our model is the only part of the reality our model can perceive. This is one of the reasons why collecting as much data as we can is so critical for a well-functioning, robust model. When more data is available to the model it can represent a bigger part of the real world with higher fidelity. But the training data is always just a static snapshot of reality, while the world around it is constantly changing. If our model isn’t trained with new data, it has no way to update its outdated basic assumptions about reality. This leads to a decline in the model’s performance.

How does concept drift happen? Data can change gradually. For example, sensors get less accurate over a long time due to wear and tear and show increasing deviations from the actual value. Another example of slow changes is customer behavior and customer preferences. Image a model that makes recommendations for products in a fashion shop. If it isn’t updated and keeps recommending winter cloth in the summer to customers, then customer satisfaction in our shop system will significantly decrease. Recurring events such as seasons or holidays can also have an impact if we want to use our model to predict sales figures. But concept drift can also happen abruptly: If COVID-19 brings global air traffic to a screeching halt, then our carefully trained models for predicting daily passenger traffic will produce poor results. Or if the sales department launches an Instagram promotion without prior notice and doubles the sales of our vitamin supplement, that’s a great result, but it’s not something our model is good at predicting.

Another challenge is both technical and organizational. In many companies and projects, there is one team developing a machine learning model (data scientists) and another team bringing the models into production and supporting them (software engineers/DevOps engineers). The data science team spends a lot of time conceptualizing and selecting model architectures, feature engineering, and training the model. When the fully trained model is handed over to the software development team, it’s often implemented again. This implementation might differ from the actual, intended implementation. Even with small differences, this can lead to significant and unexpected effects. The separation of data science teams and software engineering teams can also lead to entirely different problems. Even if the data science team takes the model all the way to production, it’s often still used by different applications developed by other teams. These applications send input data to the model and receive predictions from it. If the data changes in the applications due to changes or errors, then the input data will fall short of the model’s expectations.

But challenges or threats to the model can even arise outside the organization itself. While actual security problems related to ML models have been rare up until now, it’s still possible for third parties to actively try and find vulnerabilities in the model as part of adversarial attacks. This danger shouldn’t be ignored, especially in fraud detection models.

Monitoring: DevOps vs. MLOps

To address any challenges in a productive model, we must first be aware of arising challenges. For this, we need monitoring. There are two approaches for monitoring traditional production software: First, we monitor business metrics (KPIs — key performance indicators). These can be metrics such as customer satisfaction, revenue, or how long customers stay on our site. We monitor service and infrastructure metrics to gain comprehensive insights into the state of our system. We also collect these metrics when we monitor a machine learning system. But this still isn’t sufficient. Unlike conventional software systems, ML systems behave partly non-deterministically and their performance depends significantly on the distribution of input data and the time of the most recent training. We need to collect further data. Because model hyperparameters, input feature selection, and architecture are not determined during deployment, but already in the training pipeline, the smallest error can lead to radically different system behavior that traditional software testing wouldn’t record. This is especially true in systems where models are constantly iterated and improved upon.

Monitoring for ML systems

First, we need to gather the traditional metrics that we’d monitor for any other software system. There are well-established best practices here. Google’s site reliability engineering manual [4] can serve as a reference. I’d like to mention the following as examples of DevOps metrics:

- Latency: How long does our model take to predict a new value? How long does our preprocessing pipeline take?

- Resource consumption: What are the CPU/GPU and RAM utilization of our model server? Do we need more servers?

- Events: When does the model server receive an HTTP request? When is a specific function called? When is an exception thrown?

- Calls: How often is a model requested?

For these metrics, it is worth looking at both the individual values and the trend of the values over time. If you see changes in either that is a reason to take a closer look at our model and the whole system. Let’s imagine that we’re looking at the number of predictions per hour. If these deviate considerably in our daily or weekly comparison—by 40%, for instance—then it would certainly make sense to send an e-mail to the team members involved. In addition to these classic metrics, we also need to keep an eye on metrics specific to machine learning. In any case, we need to collect and store the predictions of the model. Both the individual predicted values and the value distribution are of interest. If our system predicts numerical values, we have to check their numerical stability. If it predicts “NaNs” (non-numerical values) or “infinity”, then this might warrant alerting our data scientists. Does your model that should predict tomorrow’s user number return “-15” or “cat”? Then something is very wrong.

But the distribution of predictions is also of interest. Does the distribution match our expected values? We should also check what the minima and maxima of our predicted values are. Are they within an expected, reasonable range? What do the median, mean, and standard deviations look like over an hour or a day? If we find a large discrepancy between the predicted and observed classes, then we have a prediction bias. Then maybe the label distribution of our training data—the predicted values—is different from the distribution in the real world. This is a clear sign that we need to re-train our model.

When we monitor our model’s outputs, it’s only logical that we monitor the inputs too. What data does our model receive? We can determine the distributions and statistical key figures. Naturally, this is easier for structured data. But we can also determine various characteristic values for texts or images. For example, we can look at the length of texts or even word distributions within them. For images, we can capture their size or maybe even their brightness values. This can also help us to find errors in our pipeline and data pre-processing. Monitoring input makes it possible to infer problems in data sources. Some columns in the database are no longer populated with data due to a bug. The data’s definition or format in some columns might change, or the columns could be renamed. If we don’t notice these changes, then our models will still assume the original definitions and formats. To avoid this, we need to monitor the distribution of values of each feature extracted from a database table for a significant shift. You can detect a shift in the distribution input data and predictions by applying statistical tests like the chi-square test or the Kolmogorov-Smirnov test. If the tests detect a significant deviation in the distribution of the data, this may be an indicator of a change in the data structures. This requires a re-training of the model.

There are a few things to keep in mind when monitoring input data. If you’re working with particularly large data, it isn’t feasible to record it completely. In this case, it might be more practical to process the input independently of the model first using deterministic code, and then log a preprocessed and compressed version of the input data. Of course, you must take special care to create and test the preprocessing. We also need to use identical preprocessing during training and in the production environment. Otherwise, there could be major discrepancies between the training data and real data. Caution is also necessary when processing particularly sensitive business data or personal data. Naturally, we want to collect as little sensitive data as we can. But we also need to collect data to improve and debug the models. This opens up a large range of interesting problems. There are some exciting approaches for solving these challenges, such as Differential Privacy [5] for Machine Learning.

Finally, I’d like to mention one area that’s very easy to monitor, but still gets neglected often: tracking model versions. In our machine learning system, we must be able to always know which model is currently active in the production environment. Not only do we need to version our models, but in the best case, we also should be able to trace the complete experimental history that led to our model’s creation. We can use a tool like MLflow [6] for this. Even though there are some approaches that help debug machine learning models, right now deep learning models should be considered black boxes when it comes to the explainability and traceability of predictions. To shed some light on this, we need to be able to understand which features and data were used to train the model. Was there a bias introduced during training? Did someone mislabel data? Is there a bug in our data cleaning pipeline? These are all things that, in the worst case, we only discover in production. To have any chance of debugging in these cases, we need to version not just the model itself, but everything that led to its creation, monitor the currently active versions and make them transparent to all stakeholders. We use tools like DVC [7] or Feast [8] to track our data or features. But it’s also important that we version and test code in the data pipeline that we use to collect and process data.

The toolbox

There are many specialized tools in the field of MLOps like Sacred [9] for managing experiments or Kubeflow [10] for training models and managing data pipelines. But for monitoring machine learning systems, we can use the DevOps toolbox. We’ll use Elasticsearch [11], a classic tool from the Elastic Stack to capture, collect, and evaluate logs and input data. Elasticsearch is a key-value store commonly used to store logs from applications or containers. These logs can be visualized with Kibana [12] and used for error diagnosis.

For MLOps, we use Elasticsearch not just for logs, but to also store our model’s pre-processed inputs for further processing. We can also store transaction data and contexts for our users’ actions in Elasticsearch. In addition to basic data like timestamps and accessed pages, this can also be explicit actions from users. Both the interactions of our users performed before the model delivered them a prediction and their reactions to the predictions are of interest. We can use the time series store Prometheus [13] together with the visualization tool Grafana [14] to capture operational metrics and model predictions.

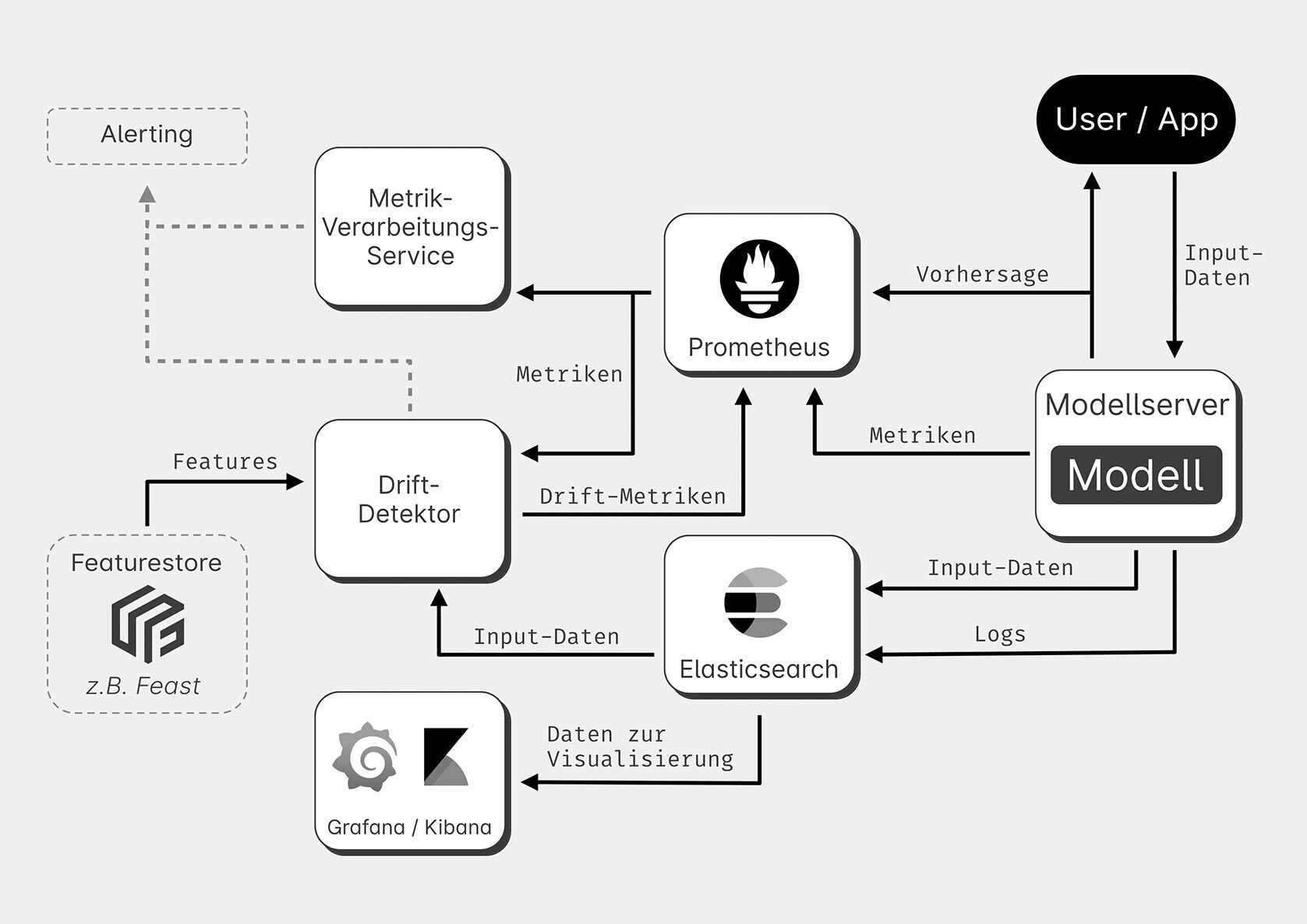

Fig. 1: Example architecture for monitoring ML systems

What can an architecture for monitoring ML systems look like? (Fig. 1) Our model is made available via a model server. This can be either a custom software solution or a standard tool like Seldon Core [15]. Input data is delivered to the model server as usual. But at the same time, it is also logged in Elasticsearch and visualized with Grafana or Kibana if required. Application logs and error messages from our model server are also stored in Elasticsearch. Now our model performs inferences and calculates predictions. These are returned to the requesting applications or users. Predictions are also stored in Prometheus. Standard metrics such as utilization, inference duration, and confidence are stored there too. These can also be visualized with Grafana or Kibana.

We’ll provide our own microservice for statistical analysis to collect statistical data such as distributions and standard deviations. It pulls data from Elasticsearch and Prometheus, performs analysis, and returns the data to Prometheus. If there are major deviations, then our microservice can send notifications to our team. In parallel, we can provide a drift detector as a second microservice, which handles detecting data drift and context drift. For this, it obtains the model’s input and output data from Elasticsearch and Prometheus. To compare the production data to a baseline we need to make the model’s training data available to the microservice. Ideally, we’ll provide this via a feature store like Feast. Metrics on drift are also stored in Prometheus. In this example, the drift detector and the service for statistically evaluating metrics are custom developments, since the tool selection in this category is currently very sparse.

An evolutionary approach

Of course, this is just an example architecture because. Building machine learning monitoring infrastructures and MLOps pipelines in general always needs to be an evolutionary process. Deployment can not happen with a one-off, big-bang approach. Each company has its own unique set of challenges and needs when developing machine learning strategies. Furthermore, there are different users in machine learning systems, different organizational structures, and existing application landscapes.

One possible approach here could be to implement an initial prototype without ML components. After that, you should start building the infrastructure and the simplest models, which you continuously monitor. The big advantage of this strategy is that you can start early with a collection of input data and especially with the collection of ground truth labels, i.e the real, correct results. It is fairly common for ground truth labels to become available a long time after the prediction was made. For example, if we want to predict a company’s quarterly results, then they will only be available after three months. Therefore, you should start collecting data and labels early.

With the help of your infrastructure, collected data, and simple models, you can begin moving towards more complicated models in small, incremental steps. After that, you can lift these into a production environment until you have achieved your desired level of predictive accuracy and performance. By doing this, your monitoring infrastructure gives you a constant overview of how your model is performing, and when it’s time to roll out a new model. And you can also determine when you have to roll back your model to its previous state after finding a bug in your feature pipeline that slipped into production.

Links & Literature

[1] https://www.redapt.com/blog/why-90-of-machine-learning-models-never-make-it-to-production

[2] https://venturebeat.com/2019/07/19/why-do-87-of-data-science-projects-never-make-it-into-production/

[3] A Chat with Andrew on MLOps:: https://www.youtube.com/watch?v=06-AZXmwHjo

[4] https://landing.google.com/sre/book.html

[5] Deep Learning with Differential Privacy: https://arxiv.org/abs/1607.00133

[7] https://dvc.org

[9] https://github.com/IDSIA/sacred

[10] https://www.kubeflow.org/

[11] https://www.elastic.co/de/elasticsearch/

[12] https://www.elastic.co/de/kibana/

[14] https://grafana.com