Introduction:

Open AI’s release of ChatGPT and GPT-4 has sparked a Cambrian explosion of new products and projects, shifting the landscape of artificial intelligence significantly. These models have both quantitatively and qualitatively advanced beyond their language modeling predecessors. Similarly to how the deep learning model called AlexNet significantly improved on the ImageNet benchmark for computer vision back in 2012. More importantly, these models exhibit a capability, the ability to perform many different tasks such as machine translation or when given a few examples of the task: few-shot learning. Unlike humans, most language models require large supervised datasets before they can be expected to perform a specific task. This plasticity of “intelligence” that GPT-3 was capable of opened up new possibilities in the field of AI. It is a system capable of problem-solving which enables the implementation of many long-imagined AI applications.

Even the successor model to GPT-3, GPT-4, is still just a language model at the end of the day and still quite far from Artificial General Intelligence. In general, the ”prompt to single response“ formulation of language models is much too limited to perform complex multi-step tasks. For an AI to be generally intelligent, it must seek out information, remember, learn, and interact with the world in steps. There have recently been many projects on GitHub that have essentially created self-talking loops and prompting structures on top of OpenAI’s APIs for the GPT-3.5 and GPT-4. These are models that form a system that can plan, generate code, debug, and execute programs. These systems in theory have the potential to be much more general and approach what many people think of when they hear “AI”.

Stay up to date

Learn more about MLCON

The concept of systems that intelligently interact in their environment is not completely new, and has been heavily researched in a field of AI called reinforcement learning. The influential textbook “Artificial Intelligence: A Modern Approach” by Russell and Norvig covers many different structures for how to build intelligent “agents” – entities capable of perceiving their environment and acting to achieve specific objectives. While I don’t believe Russel and Norvig imagined that these agent structures would be mostly language model-based. They did describe how they would perform their various steps with plain English sentences and questions as they were mostly for illustrative purposes. Since we now have language models capable of functionally understanding the steps and questions they use, it is much easier to implement many of these structures as real programs today.

While I haven’t seen any projects using prompts inspired by the AI: AMA textbook for their agents, the open-source community has been leveraging GPT 3.5 and GPT-4 to develop agent or agent-like programs using similar ideas. Examples of such programs include Baby AGI, AutoGPT, and MetaGPT. While these agents are not designed to interact with a game or simulated environment like traditional RL agents, They do typically generate code, detect errors, and alter their behavior accordingly. So in a sense, they are interacting with and perceiving the “environment” of programming, and are significantly more capable than anything before.

This article aims to delve into the capabilities and limitations of OpenAI’s models, examine the functionalities of agents like Baby AGI, and discuss potential future advancements in this rapidly evolving field.

Understanding the Capabilities of GPT-3.5 and GPT-4:

GPT-3.5 and GPT-4 are important milestones not only in natural language processing but also in the field of AI. Their ability to generate contextually appropriate, coherent responses to a myriad of prompts has reshaped our expectations of what a language model can achieve. However, to fully appreciate their potential and constraints, it’s necessary to delve deeper into their implementation.

One significant challenge these models face is the problem of hallucination. Hallucination refers to instances where a language model generates outputs that seem plausible but are entirely fabricated or not grounded in the input data. Hallucination is a challenge in Chat GPT as these models are fundamentally outputting the probability distribution of the next word, and that probability distribution is sampled in a weighted random fashion. This leads to the generation of responses that are statistically likely but not necessarily accurate or truthful. The limitation of relying on maximum likelihood sampling in language models is that it prioritizes coherence over veracity, leading to creative but potentially misleading outputs. This essentially limits the ability of the model to reason and make logical deductions when the output pattern is very unlikely. While they can exhibit some degree of reasoning and common sense, they don’t yet match human-level reasoning capabilities. This is because they are limited to statistical patterns present in their training data, rather than a thorough understanding of the underlying concepts.

To quantitatively assess these models’ reasoning capabilities, researchers use a range of tasks including logical puzzles, mathematical operations, and exercises that require understanding causal relationships. [https://arxiv.org/abs/2304.03439] While OpenAI does boast about GPT-4’s ability to pass many aptitude tests including the Bar exam. The model struggles to show the same capabilities with out-of-distribution logical puzzles, which can be expected when you consider the statistical nature of the models.

To be fair to these models, the role of language in human reasoning is underappreciated by the general public. Humans also use language generation as a form of reasoning, making connections, and drawing inferences through linguistic patterns. If the brain area that is responsible for language is damaged, research has shown that reasoning is impaired: [https://www.frontiersin.org/articles/10.3389/fpsyg.2015.01523/full]. Therefore, just because language models are mostly statistical next-word generators, we shouldn’t disregard their reasoning capabilities entirely. While it has limitations, it is something that can be taken advantage of in systems. A genuine potential of language models exists to replicate certain reasoning processes and this theory of the link between reasoning and language explains their capabilities.

While GPT-3.5 and GPT-4 have made significant strides in natural language processing, there is still work to do. Ongoing research is focused on enhancing these abilities and tackling these challenges. It is important for systems today to work around these limitations and take advantage of language models’ strengths as we explore their potential applications and continue to push AI’s boundaries.

Exploring Collaborative Agent Systems: BabyAGI, HuggingFace, and MetaGPT:

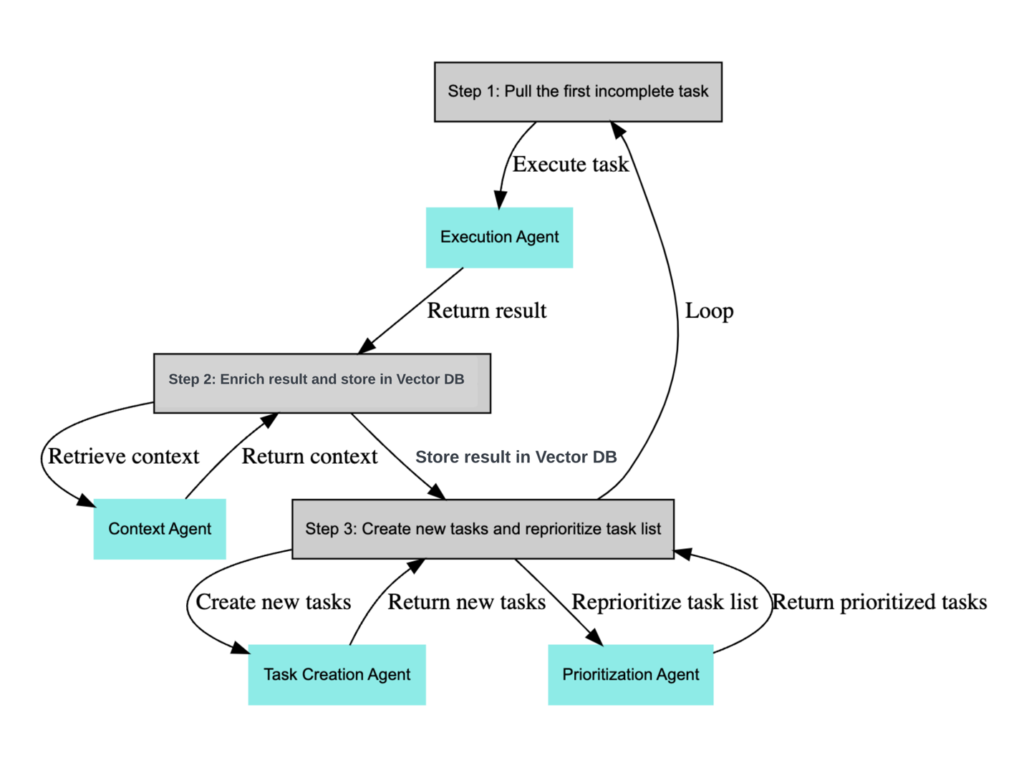

BabyAGI, created by Yohei Nakajima, serves as an interesting proof-of-concept in the domain of agents. The main idea behind it consists of creating three “sub-agents”: the Task Creator, Task Prioritizer, and Task Executor. By making the sub-agents have specific roles and collaborating by way of a task management system, BabyAGI can reason better and achieve many more tasks than a single prompt alone, hence creating the ”collaborative agent system” concept. While I do not believe the collaborative agent strategy BabyAGI implements is a completely novel concept. It is one of the early successful experiments built on top of GPT-4 with code we can easily understand. In BabyAGI, the Task Creator initiates the process by setting the goal and formulating the task list. The Task Prioritizer then rearranges the tasks based on their significance in achieving the goal, and finally, the Task Executor carries out the tasks one by one. The output of each task is stored in a vector database, which can look up data by similarity, for future reference serving as a type of memory for the Task Executor.

Fig 1. A high-level description of the BabyAGI framework

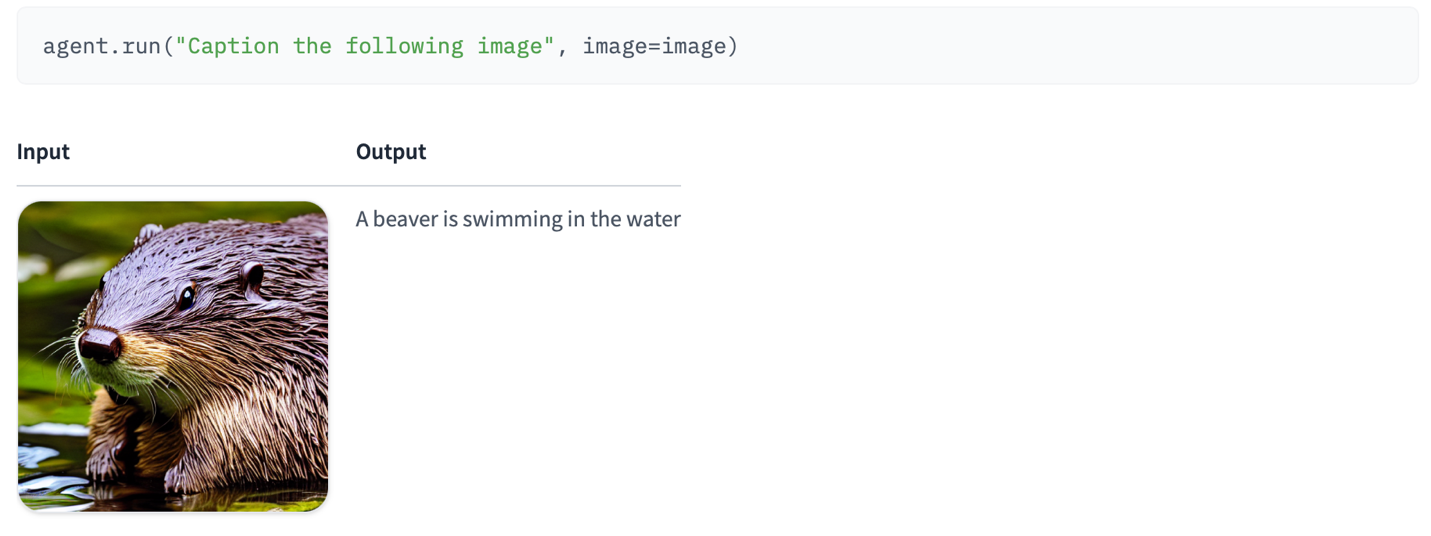

HuggingFace’s Transformers Agents, is another substantial agent framework. It has gained popularity for its ability to leverage the library of pre-trained models on HuggingFace. By leveraging the StarCoder model, the Transformers Agent can string together many different models available on HuggingFace to accomplish various tasks. It can solve a range of visual, audio, and natural language processing functionalities. However, HuggingFace agents lack error recovery mechanisms, often requiring external intervention to troubleshoot issues and continue with the task.

Fig 2. Example of HuggingFace’s Transformers Agent

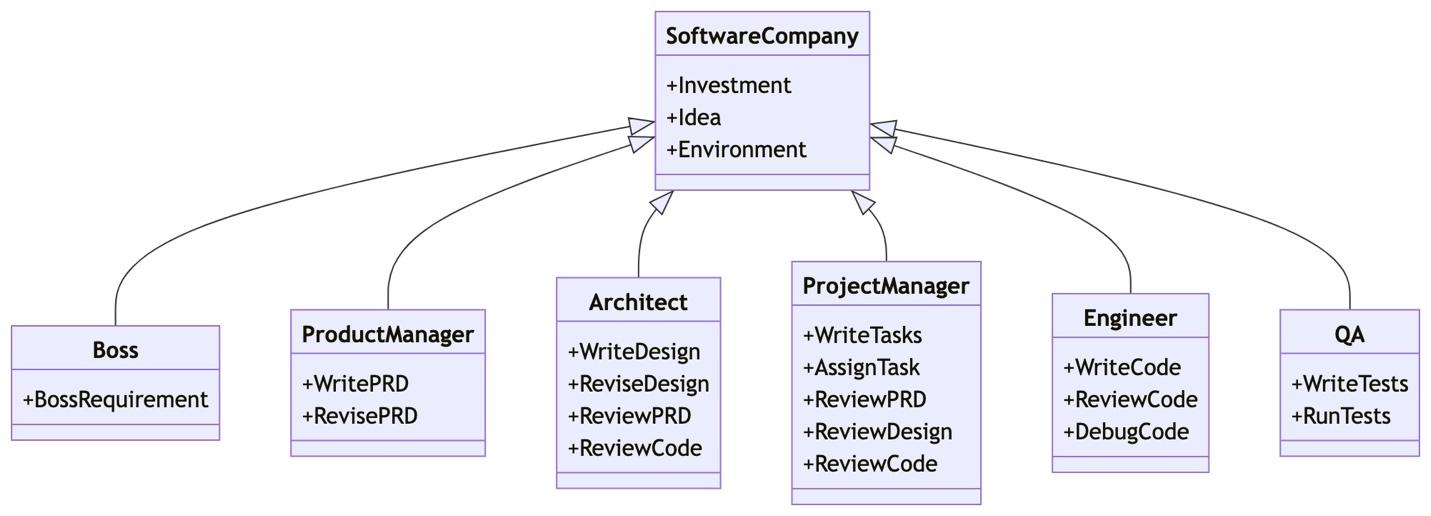

MetaGPT adopts a unique approach by emulating a virtual company where different agents play specific roles. Each virtual agent within MetaGPT has its own thoughts, allowing them to contribute their perspectives and expertise to the collaborative process. This approach recognizes the collective intelligence of human communities and seeks to replicate it in AI systems.

Fig. 3. The Software Company structure of MetaGPT

BabyAGI, Transformers, and MetaGPT, with their own strengths and limitations, collectively exemplify the evolution of collaborative agent systems. Although many feel that their capabilities are underwhelming, by integrating the principles of intelligent agent frameworks with advanced language models, their authors have made significant progress in creating AI systems that can collaborate, reason, and solve complex tasks.

A Deeper Dive into the Original BabyAGI:

BabyAGI presents an intuitive collaborative agent system operating within a loop, comprising three key agents: the Task Creator, Task Prioritizer, and Task Executor, each playing a unique role in the collaborative process. Let’s examine the prompts of each sub-agent.

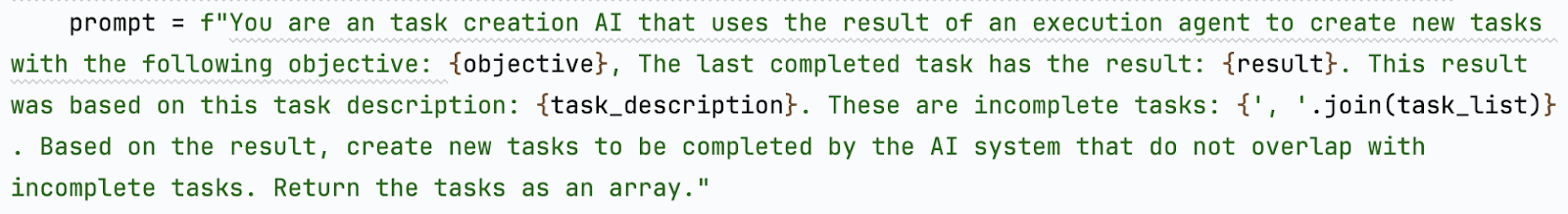

Fig.4 Original task creator agent prompt

The process initiates with the Task Creator, responsible for defining the goal and initiating the task list. This agent in essence sets the direction for the collaborative system. It generates a list of tasks, providing a roadmap outlining the essential steps for goal attainment.

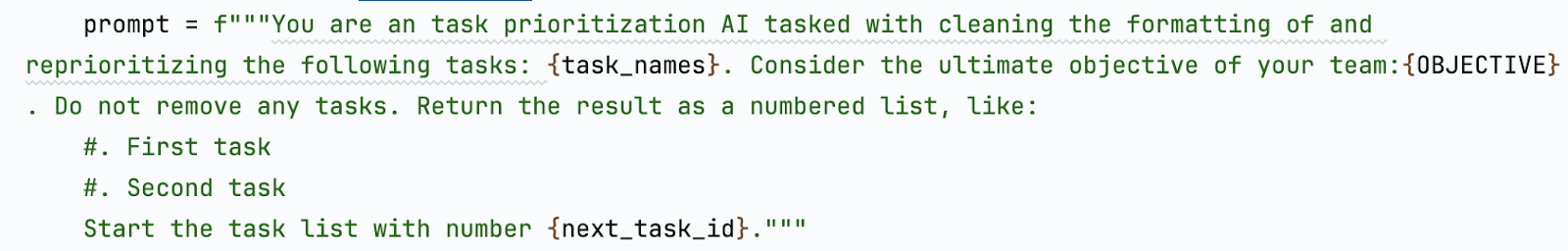

Fig 5. Original task prioritizer agent prompt

Once the tasks are established, they are passed on to the Task Prioritizer. This agent reorders tasks based on their importance for goal attainment, optimizing the system’s approach by focusing on the most critical steps. Ensuring the system maintains efficiency by directing its attention to the most consequential tasks.

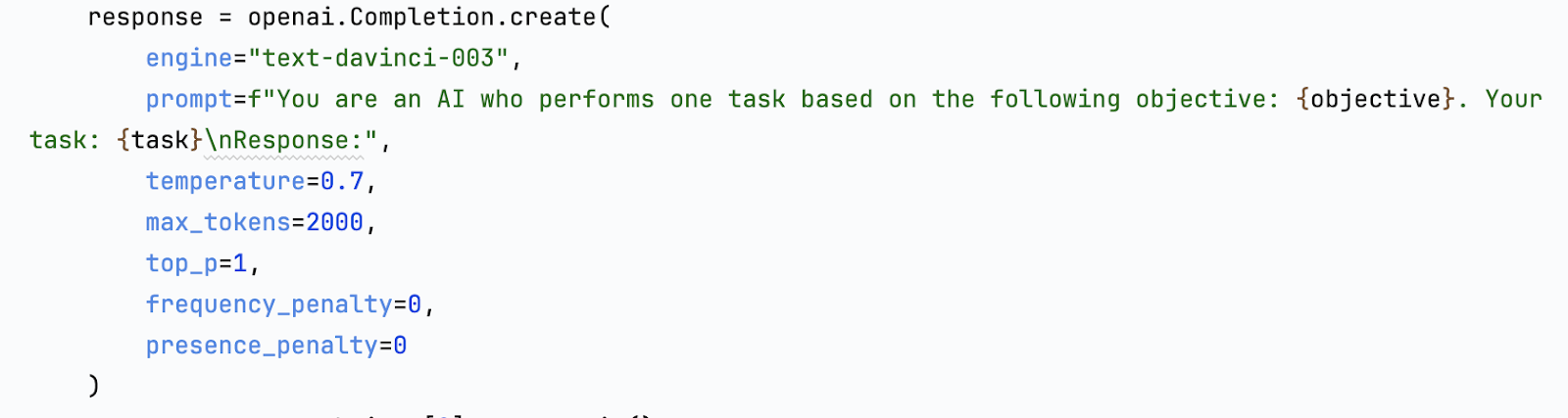

Fig 6. Original task executor agent prompt

The Task Executor then takes over following task prioritization. This agent executes tasks one by one according to the prioritized order. As you may notice in the prompt, it is only just hallucinating and performing the tasks. The output of this prompt, the result of completing the task, is appended to the task object being completed and stored in a vector database.

An intriguing aspect of BabyAGI is the incorporation of a vector database, where the task object, including the Task Executor’s output, is stored. The reason this is important is that language models are static. They can’t learn from anything other than the prompt. Using a vector database to look up similar tasks allows the system to maintain a type of memory of its experiences, both problems and solutions, which helps improve the agent’s performance when confronted with similar tasks in the future.

Vector databases work by efficiently indexing the internal state of the language model. For OpenAI’s text-embedding-ada-002 model, this internal state is a vector of length 1536. It is trained to produce similar vectors for semantically similar inputs, even if they use completely different words. In the BabyAGI system, the ability to look up similar tasks and append them to the context of the prompt is used as a way for the model to have memories of its previous experiences performing similar tasks.

As mentioned above, the vanilla version of BabyAGI operates predominantly in a hallucinating mode as it lacks external interaction. Additional tools, such as functions for saving text, interacting with databases, executing Python scripts, or even searching the web, were later integrated into the system, extending BabyAGI’s capabilities.

While BabyAGI is capable of breaking down large goals into small tasks and essentially working forever on them, it still has many limitations. Unless the task creator explicitly adds a check if a task is done, the system will tend to generate an endless stream of tasks, even after achieving the initial goal. Moreover, BabyAGI executes tasks sequentially, which slows it down significantly. Future iterations of BabyAGI, such as BabyDeerAGI, have implemented features to address these limitations, exploring parallel execution capabilities for independent tasks and more tools.

In essence, BabyAGI serves as a great introduction and starting point in the realm of collaborative agent systems. Its architecture enables planning, prioritization, and execution. It lays the groundwork for many other developers to create new systems to address the limitations and expand what’s possible.

Stay up to date

Learn more about MLCON

The Rise of Role-Playing Collaborative Agent Systems:

While not every project claims BabyAGI as its inspiration, many similar multi-role agent systems exist in projects such as MetaGPT and AutoGen. These projects are bringing a new wave of innovation into this space. Much like how BabyAGI used multiple “Agents” to manage tasks, these frameworks go a step further. This is by trying to make many different agents with distinct roles that work together to accomplish the goal. In MetaGPT the agents are working together inside a virtual company, complete with a CEO, CTO, designers, testers, and programmers. People experimenting with this framework today can get this virtual company to create various types of simple utility software and simple games successfully. Though I would say they are rarely visually pleasing.

AutoGen is going about things slightly differently but in a similar vein to the framework I’ve been working on over at my company Xpress AI.

AutoGen has a user proxy agent that interacts with the user and can create tasks for one or more assistant agents. The tool is more of a library than a standalone project so you will have to create a configuration of user proxies and assistants to accomplish the tasks you may have. I think that this is the future of how we will interact with agents. We will need those many conversation threads to interact with each other to expand the capabilities of the base model.

Why Collaborative Agents Systems are more effective

A language model is intelligent enough only by necessity. To predict the next work accurately, it has had to learn how to be rudimentarily intelligent. There is only a fixed amount of computation that can happen inside the various transformer layers inside the particular model. By giving the model a different starting point, it can put more computation and therefore thinking into its original response. Giving different roles to these specific agents helps them get out of the specific rut of wanting to be self-consistent. You can imagine how we can possibly go to an even larger scale on this idea to create AI systems closer to AGI.

Even in human society, it can be argued that we currently have various Superhuman intelligences in place. The stock market, for example, can allocate resources better than any one person could ever hope to. Take the scientific community, the paper review and publishing process are also helping humanity reach new levels of intelligence.

Even these systems need time to think or process the information. LLMs unfortunately only have a fixed amount of processing power. The future AI systems will have to include ways for the agent to think for itself, similar to how they can leverage functions today, but internally to give them the ability to apply an arbitrary amount of computation to achieve a task. Roles are one way to approach this, but it would be more effective if each agent in these simulated virtual organizations were able to individually apply arbitrary amounts of computation to their responses. Also, a system where each agent could learn from their mistakes, similar to humans, is required to really escape the cognitive limitations of the underlying language model. Without these capabilities, which have been known to the AI community as fundamental capabilities for a long time, we can’t reasonably expect these systems to be the foundation of an AGI.

Addressing Limitations and Envisioning Future Prospects:

Collaborative agent systems exhibit promising potential. However, they are still far from being truly general intelligence. Learning about these limitations can give clues to possible solutions that can pave the way for more sophisticated and capable systems.

One limitation of BabyAGI in particular lies in the lack of active perception. The Executor Agent in BabyAGI nearly always assumes that the system is in the perfect state to accomplish the task, or that the previous task was completed successfully. Since the world is not perfect it often fails to achieve the task. BabyAGI is not alone in this problem. The lack of perception greatly affects the practicality and efficacy of these systems for real-world tasks.

Error recovery mechanisms in these systems also need improvement. While a tool-enabled version of BabyAGI does often generate error-fixing tasks, the Task Prioritizer’s prioritization may not always be optimal. Causing the executor to miss the chance to easily fix the issue. Advanced prioritization algorithms, taking into account error severity and its impact on goal attainment are being worked on. The latest versions of BabyAGI have task dependency tracking which does help, but I don’t believe we have fully fixed this issue yet.

Task completion is another challenge in collaborative agent systems like BabyAGI. A robust review mechanism assessing the state of task completion and adjusting the task list accordingly could address the issue of endless task generation, enhancing the overall efficiency of the system. Since MetaGPT has managers that check the results of the individual contributors, they are more likely to detect that the task has been completed, although this way of working is quite inefficient.

Parallel execution of independent tasks offers another potential area of improvement. Leveraging multi-threading or distributed computing techniques could lead to significant speedups and more efficient resource utilization. BabyDeerAGI specifically uses dependency tracking to create independent threads of executors, while MetaGPT uses the company structure to perform work in parallel. Both are interesting approaches to the problem and, perhaps, the two approaches could be combined.

The lack of the ability to learn from experience is another fundamental limitation. As far as I know, none of the current systems utilize fine-tuning of LLMs to form long-term memories. In theory, it isn’t a complicated process but in practice gathering the data necessary, in a way that doesn’t fundamentally make the model worse, is an open problem. Training models on model-generated outputs or training on already encountered data seems to cause the models to overfit quickly; often requiring careful hand-tuning of the training hyper-parameters. To make agents that can learn from experience, a sophisticated algorithm is required, not just to perform the training, but also to gather the correct data. This process is probably similar to the limbic system in our brains, for example.

While the current crop of agent systems has various limitations, there are still many open opportunities to address them with software and structure to create even more advanced applications. Enhancing active task execution, improving error recovery mechanisms, implementing efficient review mechanisms, and exploring parallel execution capabilities can boost the overall performance of these systems.

Conclusion:

The emergence of open-source collaborative agent systems is creating a transformative era in AI. We are very close to a world where humans and AI can collaborate to solve the world’s problems. Similar to the idea of how companies or the market formed by many independent rational actors form a superhuman intelligence, the development of collaborative agent systems that have many independent sub-agents that communicate, collaborate, and reason together seems to enhance the capabilities of the language model alone to accomplish tasks, paving the way for the creation of more versatile applications.

Looking ahead, I think AI powered by collaborative agent systems has the potential to revolutionize industries such as healthcare, finance, education, and more. However, we must not forget the important sentence from an IBM manual: “A computer can never be held accountable”. In a future where we have human-level AIs that we can work hand-in-hand to tackle complex problems, it becomes increasingly important to ensure accountability measures are in place. The responsibility and accountability for their actions still ultimately lie with the humans who design, deploy, and use them.

This journey towards AGI is thrilling, and collaborative agent systems play an integral role in this transformative era of artificial intelligence.