[lwptoc]

The team at Three.ie, recognized that customers were having difficulty finding answers to basic questions on our website. To improve the user experience, we decided to utilize AI to create a more efficient and user-friendly experience with a chatbot. Building the chatbot posed several challenges, such as effectively managing the expanding context of each chat session and maintaining high-quality data. This article details our journey from concept to implementation and how we overcome these challenges. Anyone interested in AI, data management, and customer experience improvements should find valuable insights in this article.

While the chatbot project is still in progress, this article outlines the steps taken and key takeaways from the journey thus far. Stay tuned for subsequent installments and the project’s resolution.

Stay up to date

Learn more about MLCON

Identifying the Problem

Hi there, I’m a Senior PHP Developer at Three.ie, a company in the Telecom Industry. Today, I’d like to address the problem of our customers’ challenge with locating answers to basic questions on our website. Information like understanding bill details, how to top up, and more relevant information is available but isn’t easy to find, because it’s tucked away within our forums.

{.caption}

Community Page {.caption}

The AI Solution

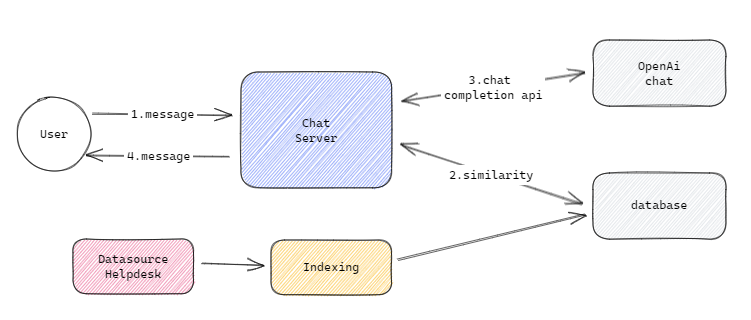

The rise of AI chatbots and the impressive capabilities of GPT-3 presented us with an opportunity to tackle this issue head-on. The idea was simple, why not leverage AI to create a more user-friendly way for customers to find the information they need? Our tool of choice for this task was OpenAI’s API, which we planned to integrate into a chat interface.

To make this chatbot truly useful, it needed access to the right data and that’s where Pinecone came in. Using this vector database, we were able to generate embeddings from the OpenAI API, creating an efficient search system for our chatbot.

This laid groundwork for our proof of concept: a simple yet effective solution for a problem faced by many businesses. Let’s dive deeper into how we brought this concept to life.

{.figure}

First POC {.caption}

Challenges and AI’s Role

With our proof of concept in place, the next step was to ensure the chatbot was interacting with the right data and providing the most accurate search results possible. While Pinecone served as an excellent solution for storing data and enabling efficient search during the early stages. In the long term, we realized it might not be the most cost-effective choice for a full-fledged product.

While Pinecone is an excellent solution easy to integrate and straightforward to use. The free tier only allows you to have a single pod with a single project. We would need to create small indexes but separated into multiple products. The starting plan costs around $70/month/pod. Aiming to keep the project within budget was a priority, and we knew that continuing with Pinecone would soon become difficult, since we wanted to split our data.

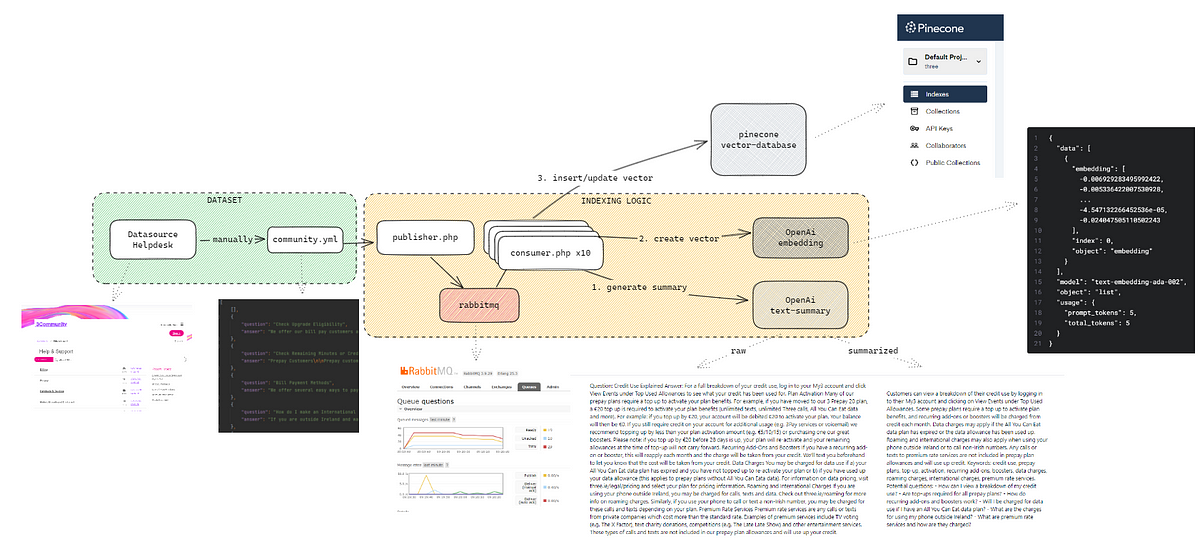

The initial data used in the chatbot was extracted directly from our website and stored in separate files. This setup allowed us to create embeddings and feed them to our chatbot. To streamline this process, we developed a ‘data import’ script. The script works by taking a file, adding it to the database, creating an embedding using the content, and finally it stores the embedding in Pinecone, using the database ID as a reference.

Unfortunately, we faced a hurdle with the structure and quality of our data. Some of the extracted data was not well-structured, which led to issues with the chatbot’s responses. To address this challenge, we once again turned to AI, this time to enhance our data quality. Employing the GPT-3.5 model, we optimized the content of each file before generating the vector. By doing so, we were able to harness the power of AI not only for answering customer queries but also for improving the quality of our data.

As the process grew more complex, the need for more efficient automation became evident. To reduce the time taken by the data import script, we incorporated queues and utilized parallel processing. This allowed us to manage the increasingly complex data import process more effectively and keep the system efficient.

{.figure}

Data Ingress Flow {.caption}

Data Integration

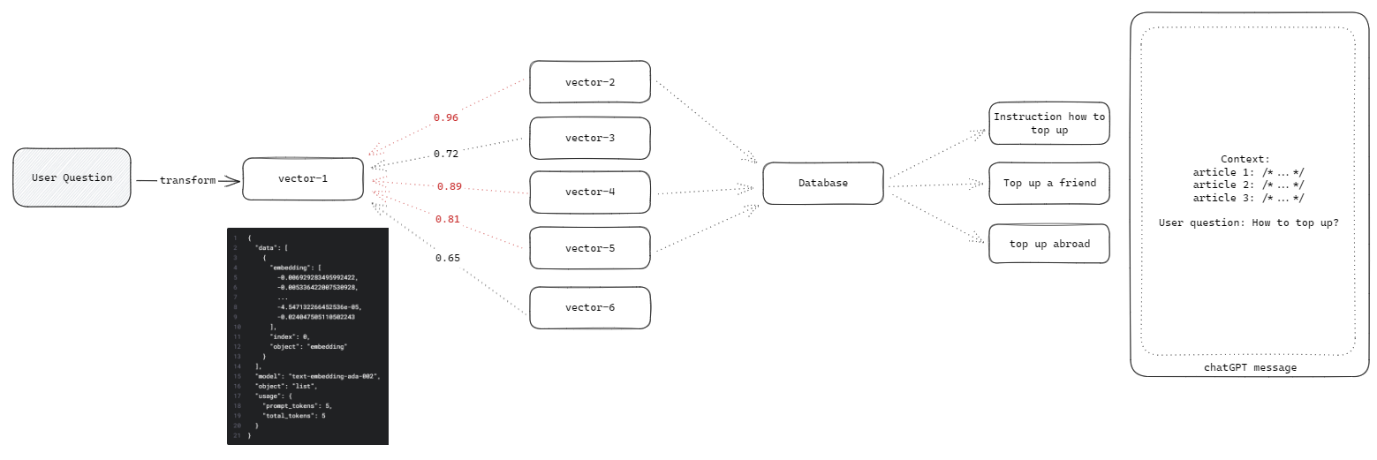

With our data stored and the API ready to handle chats, the next step was to bring everything together. The initial plan was to use Pinecone to retrieve the top three results matching the customer’s query. For instance, if a user inquired, “How can I top up by text message?”, we would generate an embedding for this question and then use Pinecone to fetch the three most relevant records. These matches were determined based on cosine similarity, ensuring the retrieved information was highly pertinent to the user’s query.

Cosine similarity is a key part of our search algorithm. Think of it like this: imagine each question and answer is a point in space. Cosine similarity measures how close these points are to each other. For example, if a user asks, “How do I top up my account?”, and we have a database entry that says, “Top up your account by going to Settings”, these two are closely related and would have a high cosine similarity score, close to 1. On the other hand, if the database entry says something about “changing profile picture”, the score would be low, closer to 0, indicating they’re not related.

This way, we can quickly find the best matches to a customer’s query, making the chatbot’s answers more relevant and useful.

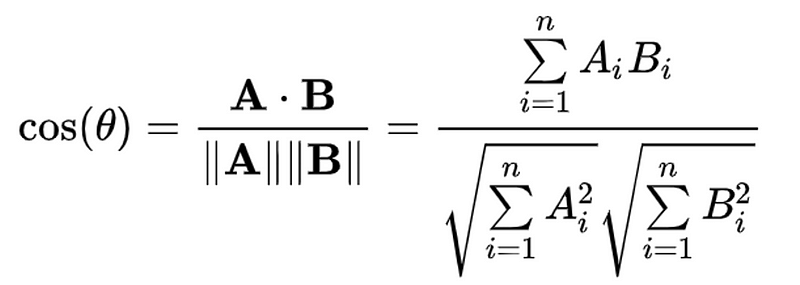

For those who understand a bit of math, this is how cosine similarity works. You represent each sentence as a vector in multi-dimensional space. The cosine similarity is calculated as the dot product of two vectors divided by the product of their magnitudes. Mathematically, it looks like this:

{.figure}

Cosine Similarity {.caption}

This formula gives us a value between -1 and 1. A value close to 1 means the sentences are very similar, and a value close to -1 means they are dissimilar. Zero means they are not related.

{.figure}

Simplified Workflow {.caption}

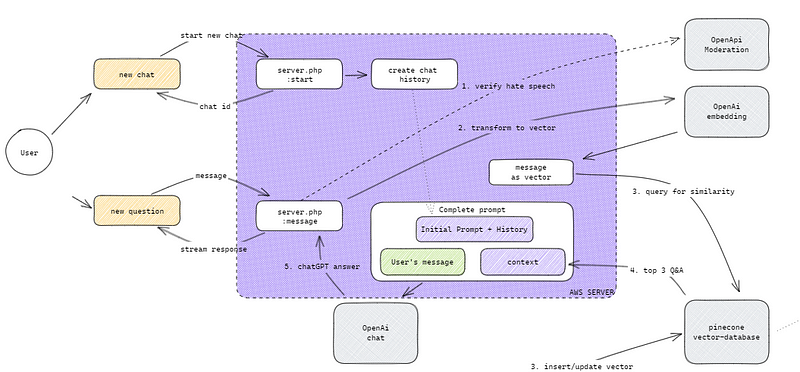

Next, we used these top three records as a context in the OpenAI chat API. We merged everything together: the chat history, Three’s base prompt instructions, the current question, and the top three contexts.

{.figure}

Vector Comparison Logic {.caption}

Initially, this approach was fantastic and provided accurate and informative answers. However, there was a looming issue, as we were using OpenAI’s first 4k model, and the entire context was sent for every request. Furthermore, the context was treated as “history” for the following message, meaning that each new message added the boilerplate text plus three more contexts. As you can imagine, this led to rapid growth of the context.

To manage this complexity, we decided to keep track of the context. We started storing each message from the user (along with the chatbot’s responses) and the selected contexts. As a result, each chat session now had two separate artifacts: messages and contexts. This ensured that if a user’s next message related to the same context, it wouldn’t be duplicated and we could keep track of what had been used before.

Progress so Far

To put it simply, our system starts with a manual input of questions and answers (Q&A) which is then enhanced by our AI. To ensure efficient data handling we use queues to store data quickly. In the chat, when a user asks a question, we add a “context group” that includes all the data we got from Pinecone. To maintain system organization and efficiency, older messages are removed from longer chats.

{.figure}

Chat Workflow {.caption}

{.figure}

Chat Workflow {.caption}

Automating Data Collection

Acknowledging the manual input as a bottleneck, we set out to streamline the process through automation. I started by trying out scrappers using different languages like PHP and Python. However, to be honest, none of them were good enough and we faced issues with both speed and accuracy. While this component of the system is still in its formative stages, we’re committed to overcoming this challenge. We are currently evaluating the possibility of utilizing an external service to manage this task, aiming to streamline and simplify the overall process.

While working towards data automation, I dedicated my efforts to improving our existing system. I developed a backend admin page, replacing the manual data input process with a streamlined interface. This admin panel provides additional control over the chatbot, enabling adjustments to parameters like the ‘temperature’ setting and initial prompt, further optimizing the customer experience. So, although we have challenges ahead, we’re making improvements every step of the way.

#

A Week of Intense Progress

The week was a whirlwind of AI-fueled excitement, and we eagerly jumped in. After sending an email to my department, the feedback came flooding in. Our team was truly collaborative: a skilled designer supplied Figma templates and a copywriter crafted the app’s text. We even had volunteers who stress-tested our tool with unconventional prompts. It felt like everything was coming together quickly.

However, this initial enthusiasm came to a screeching halt due to security concerns becoming the new focus. A recent data breach at OpenAI, unrelated to our project, shifted our priorities. Though frustrating, it necessitated a comprehensive security check of all projects, causing a temporary halt to our progress.

The breach occurred during a specific nine-hour window on March 20, between 1 a.m. and 10 a.m. Pacific Time. OpenAI confirmed that around 1.2% of active ChatGPT Plus subscribers had their data compromised during this period. They were using the Redis client library (redis-py), which allowed them to maintain a pool of connections between their Python server and Redis. This meant they didn’t need to query the main database for every request, but it became a point of vulnerability.

In the end, it’s good to put security at the forefront and not treat it as an afterthought, especially in the wake of a data breach. While the delay is frustrating, we all agree that making sure our project is secure is worth the wait. Now, our primary focus is to meet all security guidelines before progressing further.

The Move to Microsoft Azure

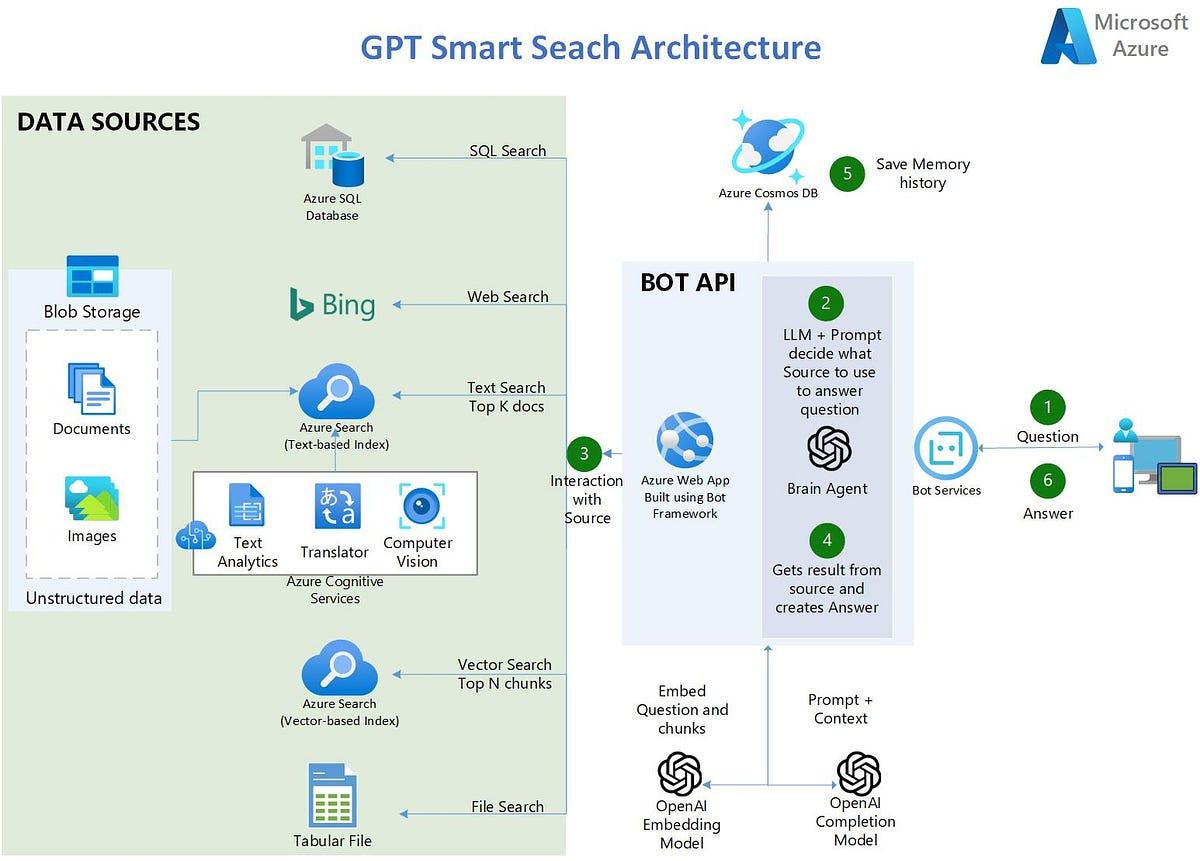

In just one week, the board made a big decision to move from OpenAI and Pinecone to Microsoft’s Azure. At first glance, it looks like a smart choice as Azure is known for solid security but the plug-and-play aspect can be difficult.

What stood out in Azure was having our own dedicated GPT-3.5 Turbo model. Unlike OpenAI, where the general GPT-3.5 model is shared, Azure gives you a model exclusive to your company. You can train it, fine-tune it, all in a very secure environment, a big plus for us.

The hard part? Setting up the data storage was not an easy feat. Everything in Azure is different from what we were used to. So, we are now investing time to understand these new services, a learning curve we’re currently climbing.

Azure Cognitive Search

In our move to Microsoft Azure, security was a key focus. We looked into using Azure Cognitive Search for our data management. Azure offers advanced security features like end-to-end encryption and multi-factor authentication. This aligns well with our company’s heightened focus on safeguarding customer data.

The idea was simple: you upload your data into Azure, create an index, and then you can search it just like a database. You define what’s called “fields” for indexing and then Azure Cognitive Search organizes it for quick searching. But the truth is, setting it up wasn’t easy because creating the indexes was more complex than we thought. So, we didn’t end up using it in our project. It’s a powerful tool, but difficult to implement. This was the idea:

{.figure}

Azure Structure {.caption}

The Long Road of Discovery

So, what did we really learn from this whole experience? First, improving the customer journey isn’t a walk in the park; it’s a full-on challenge. AI brings a lot of potential to the table, but it’s not a magic fix. We’re still deep in the process of getting this application ready for the public, and it’s still a work in progress.

One of the most crucial points for me has been the importance of clear objectives. Knowing exactly what you aim to achieve can steer the project in the right direction from the start. Don’t wait around — get a proof of concept (POC) out as fast as you can. Test the raw idea before diving into complexities.

Also, don’t try to solve issues that haven’t cropped up yet, this is something we learned the hard way. Transitioning to Azure seemed like a move towards a more robust infrastructure. But it ended up complicating things and setting us back significantly. The added layers of complexity postponed our timeline for future releases. Sometimes, ‘better’ solutions can end up being obstacles if they divert you from your main goal.

Stay up to date

Learn more about MLCON

In summary, this project has been a rollercoaster of both challenges and valuable lessons learned. We’re optimistic about the future, but caution has become our new mantra. We’ve come to understand that a straightforward approach is often the most effective, and introducing unnecessary complexities can lead to unforeseen problems. With these lessons in hand, we are in the process of recalibrating our strategies and setting our sights on the next development phase.

Although we have encountered setbacks, particularly in the area of security, these experiences have better equipped us for the journey ahead. The ultimate goal remains unchanged: to provide an exceptional experience for our customers. We are fully committed to achieving this goal, one carefully considered step at a time.

Stay tuned for further updates as we continue to make progress. This project is far from complete, and we are excited to share the next chapters of our story with you.