OpenSearch brings users the same enterprise-grade core features and advanced add-ons as its predecessor. Key benefits include a horizontally-scalable distributed architecture ready to handle thousands of nodes and petabytes of data, high availability, extremely fast and powerful text search, and analytics with faceting and aggregations. OpenSearch also features a rich ecosystem with language specific clients such as Python, Node, Java and more. OpenSearch also supports data shippers such as Logstash, beats, and Fluentd.

Migrating from Elasticsearch to OpenSearch enables you to continue to utilize the same powerful capabilities that your organization is already accustomed to, while safeguarding your technology against the potential for future proprietary lock-in and limitations that come with a solution that is under a proprietary license that is no longer truly open source. At the same time, making the leap to OpenSearch ensures that organizations are positioned to take advantage of all new features introduced by the open source community as the technology evolves moving forward.

To successfully migrate from Elasticsearch to OpenSearch, on cloud or on-prem systems or through a managed platform, follow these eight steps:

Make sure you’re running Elasticsearch 7.10.2, and upgrade if necessary

Enterprises should be running Elasticsearch 7.10.2 for maximum compatibility before migrating to OpenSearch. Upgrade to client libraries compatible with Elasticsearch 7.10.2, and be sure to use OpenSearch versions of libraries when available (all of which also work with Elasticsearch clusters). If the existing cluster is on a newer version than 7.12.0 then downgrade to 7.10.2 via reindex. Also be on alert for potential breaking changes or the need to re-index (between v5.6 and v6.8) that can occur when upgrading between Elasticsearch versions.

Stay up to date

Learn more about MLCON

Build a migration testing environment

Create an Elasticsearch test cluster that emulates your production environment as closely as possible. Run the same Elasticsearch version client libraries, and any other data shippers such as Logstash or Fluentd. Benchmark the test environment’s search and indexing performance based on realistic data. Next, create a test OpenSearch cluster with an equivalent number and types of nodes, for a fair and simple comparison.

Check your tool and client libraries for OpenSearch compatibility

It’s crucial to verify the interoperability of all tools and libraries prior to upgrading. For example, recent builds of tools like Logstash and others include version checks that make them incompatible with OpenSearch. While the community rapidly develops open source versions of popular tools and clients for use with OpenSearch – and many are already available and production-ready – it still pays to implement a deliberate compatibility strategy.

The tenets of such a strategy: first, use clients and tools provided by OpenSearch whenever possible. Where OpenSearch-specific options aren’t available, use tool or client versions compatible with Elasticsearch OSS 7.10.2. As a last alternative, use the OpenSearch compatibility setting to override version issues, using either the opensearch.yaml or cluster-wide settings like this:

In the opensearch.yaml (Restarting the OpenSearch cluster is necessary to change the opensearch.yaml):

<style="text-align: left;">compatibility.override_main_response_version: true

In the cluster settings:

PUT _cluster/settings { "persistent": { "compatibility": { "override_main_response_version": true } } }

Compatibility verification example: Filebeat

Here we’ll check that the Filebeat module we have running on an Apache HTTP server is compatible with our OpenSearch cluster. First, we’ll point the Filebeat configuration to the OpenSearch endpoint:

# ---------------------------- Elasticsearch Output ---------------------------- output.elasticsearch: # Array of hosts to connect to. hosts: ["search.cxxxxxxxxxxx.cnodes.io:9200"] # Protocol - either `http` (default) or `https`. protocol: "https" # Authentication credentials - either API key or username/password. #api_key: "id:api_key" username: "icopensearch" password: "***************************"

Make sure that the OpenSearch cluster can receive logs. Unfortunately (in this example), the non-OSS version of Filebeat cannot connect to OpenSearch, but does sends logs to the cluster thanks to the compatibility version check:

2021-11-30T23:28:32.514Z ERROR

[publisher_pipeline_output] pipeline/output.go:154 Failed to

connect to

backoff(elasticsearch(https://search.xxxxxxxxxxxxxxxxxxxxxx.cnodes

.io:9200)): Connection marked as failed because the onConnect

callback failed: could not connect to a compatible version of

Elasticsearch: 400 Bad Request:

{"error":{"root_cause":[{"type":"invalid_index_name_exception","re

ason":"Invalid index name [_license], must not start with

'_'.","index":"_license","index_uuid":"_na_"}],"type":"invalid_ind

ex_name_exception","reason":"Invalid index name [_license], must

not start with

'_'.","index":"_license","index_uuid":"_na_"},"status":400}

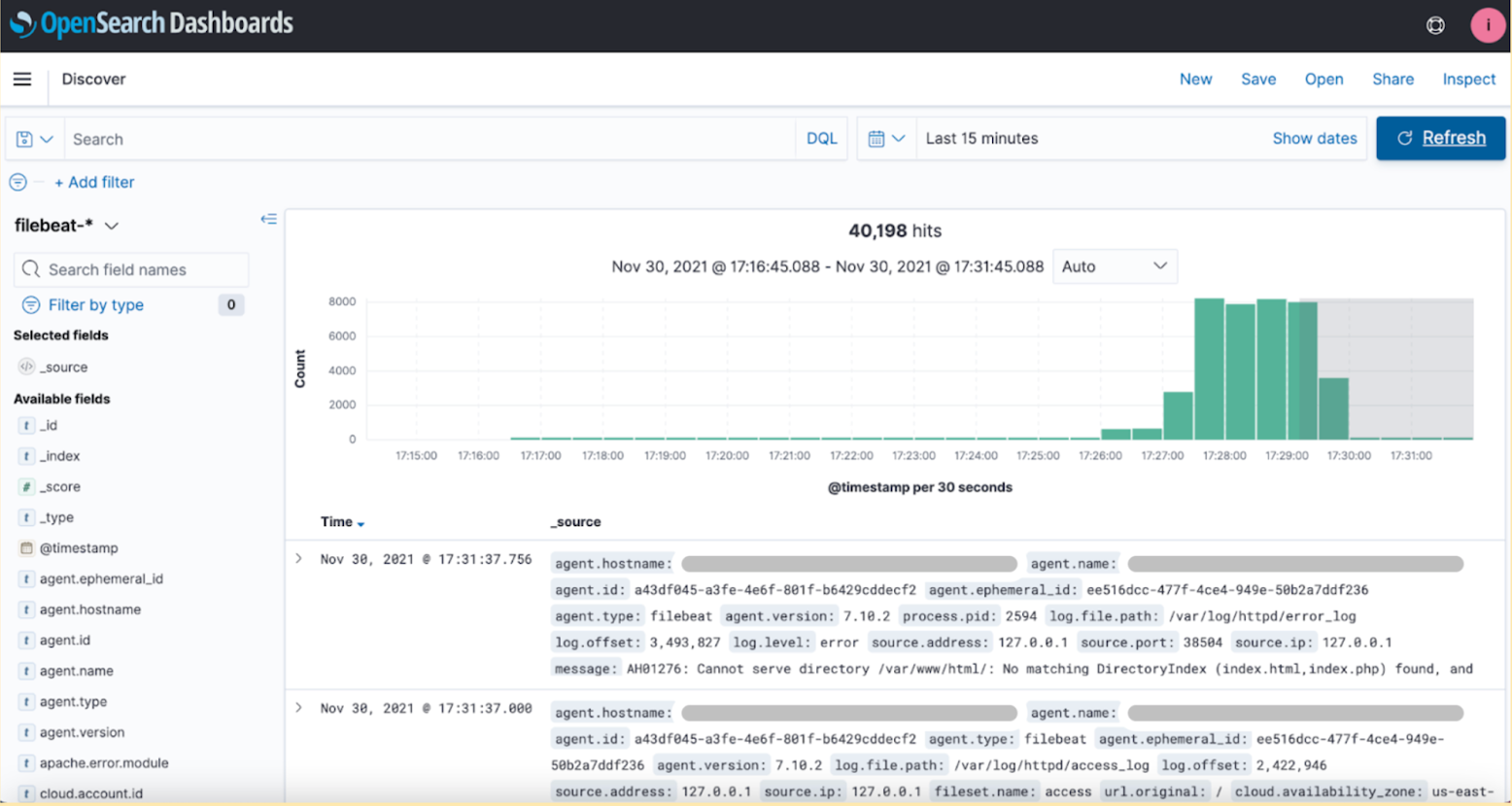

Replacing non-OSS Filebeat with the open source version 7.10.2 solves these compatibility issues. You can verify that you are receiving the filebeat logs by checking for agent.type: filebeat

In addition to ensuring that all tools and clients function with OpenSearch, monitor tools and clients to see that performance in the OpenSearch cluster is similar to Elasticsearch.

Back up your data

Before going ahead with the bulk of the migration, be sure to back up all important data. While the migration to OpenSearch shouldn’t cause data loss, it never hurts to play it safe. Backups are especially crucial when performing rolling, restart, or other in-place upgrades. With Elasticsearch snapshots, you can backup your data to a filesystem repository or to cloud repositories such as S3, GCS, Microsoft Azure.

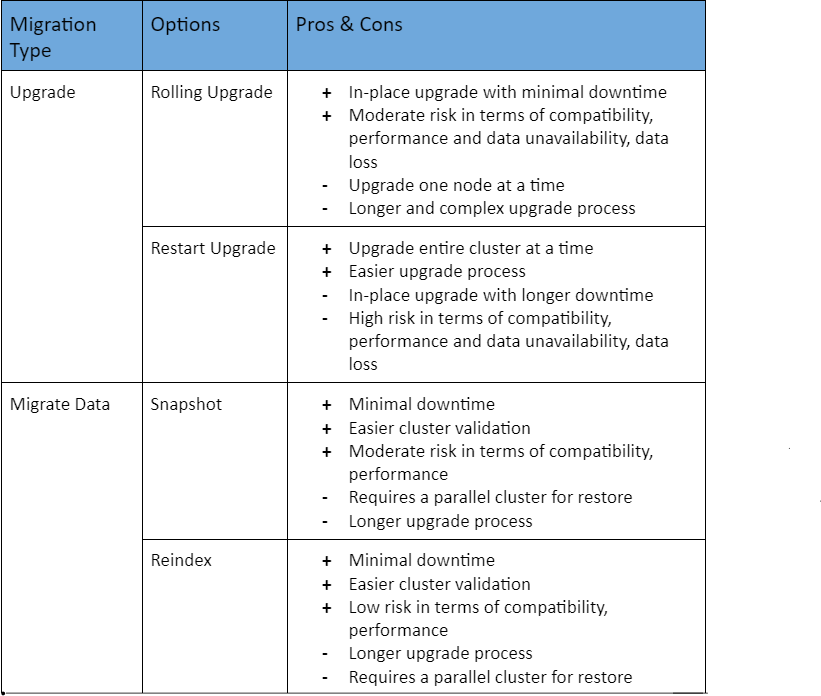

Migrate data

Migrating from Elasticsearch to OpenSearch can be done in a few different ways which vary in ease of migration, required downtime, level of compatibility, etc.

Migrating with reindex provides the highest level of compatibility and we will be focusing on reindex migration for the rest of the document.

Migrate data via reindex

To begin, identify all indices you’ll migrate to OpenSearch (don’t migrate system indices). Then copy all needed index mappings, settings, and templates, and apply them to your OpenSearch cluster. Make as few changes as possible in the interest of a seamless migration.

For example, the following code takes the index sample_http_responses and copies and applies settings and mappings to OpenSearch:

PUT sample_http_responses { "mappings": { "properties": { "@timestamp": {"type": "date"}, "http_1xx": {"type": "long"}, "http_2xx": {"type": "long"}, "http_3xx": {"type": "long"}, "http_4xx": {"type": "long"}, "http_5xx": {"type": "long"}, "status_code": {"type": "long"} } }, "settings": { "index": { "number_of_shards": "3", "number_of_replicas": "1" } } }

Prior to reindexing, you’ll ideally want to stop any new indexing to the source index. Not possible for your use case? Then perform incremental reindexing to handle newer documents, if you have a timestamp or incremental id available to facilitate that strategy.

You’ll also need to whitelist your remote cluster endpoint in OpenSearch’s settings before beginning a reindex. Edit the opensearch.yaml file, and add the whitelist config for the remote IP. It’s also possible to list <ip-addr>:<port> configurations you’d like to white list.

reindex.remote.whitelist: "xxx.xxx.xxx.xxx:9200"

Next, submit the reindex request, specifying remote endpoint details such as ssl parameters and remote credentials. The following submits the reindexing operation as an async request, a useful technique since moving a lot of data can lengthen completion time.

To avoid overloading the remote cluster, it’s also possible to throttle the number of requests per second.

POST _reindex?wait_for_completion=false&requests_per_second=-1 { "source": { span style="font-weight: 400;"> "remote": { "host": "http://xxx.xxx.xxx.xxx:9200", "socket_timeout": "2m", "connect_timeout": "60s" }, "index": "sample_http_responses" }, "dest": { "index": "sample_http_responses" } }

The _task/<task-id> end point lets you check the reindex operation’s progress.

{ "completed" : true, "task" : { "node" : "o-qCCzE-RZOK1_nDS3ItmA", "id" : 1858, "type" : "transport", "action" : "indices:data/write/reindex", "status" : { "total" : 1000, "updated" : 0, "created" : 1000, "deleted" : 0, "batches" : 1, "version_conflicts" : 0, "noops" : 0, "retries" : { "bulk" : 0, "search" : 0 }, "throttled_millis" : 0, "requests_per_second" : -1.0, "throttled_until_millis" : 0 }, "description" : """reindex from [host=xxx.xxx.xxx.xxx port=9200 query={ "match_all" : { "boost" : 1.0 }

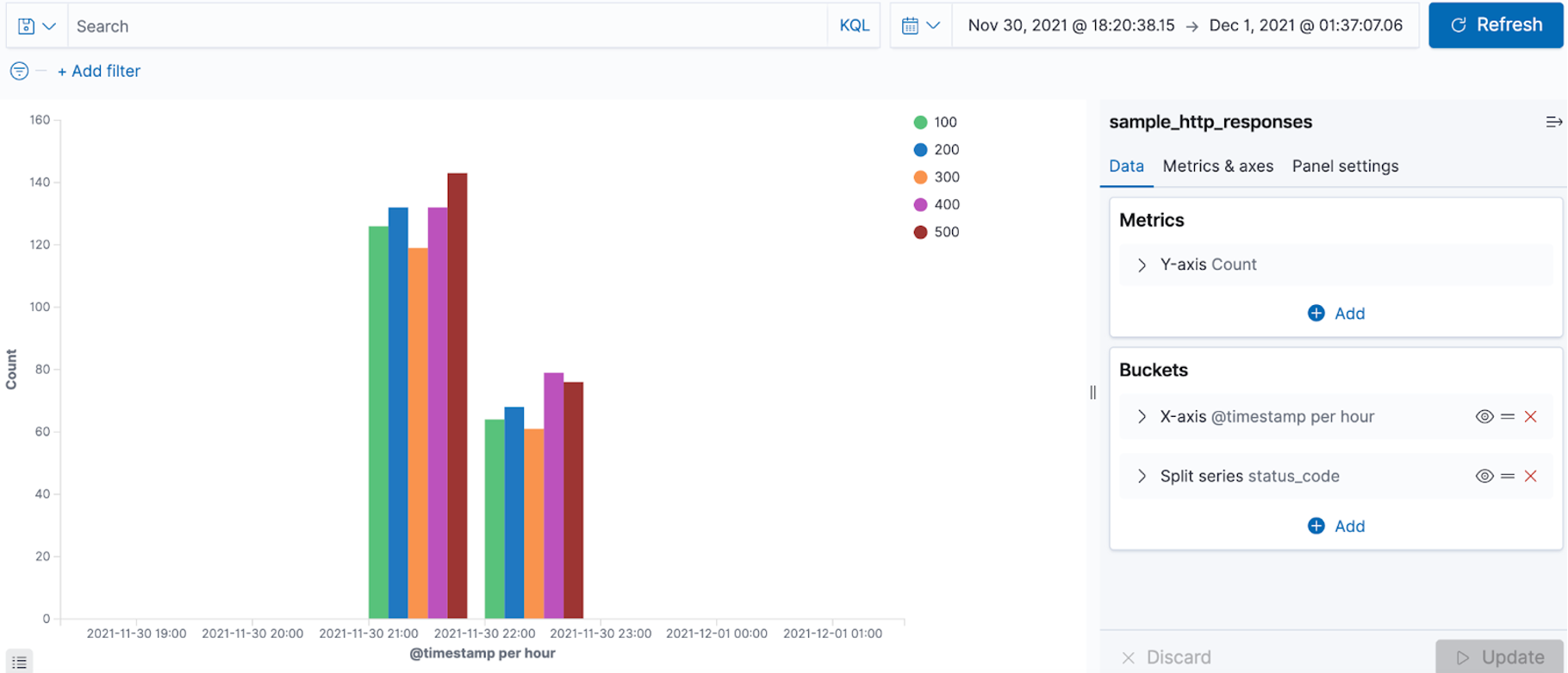

Migrate dashboards and visualizations

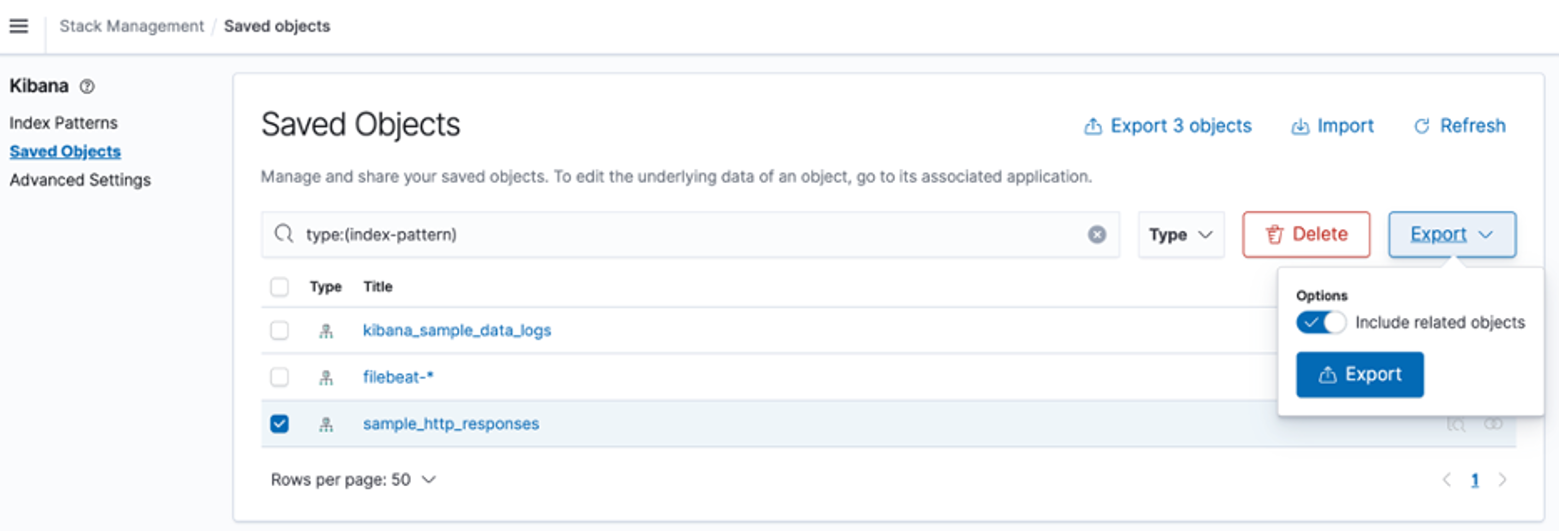

Exporting dashboards and visualizations from Kibana as saved objects, and reimporting them to the OpenSearch dashboard offers the simplest approach for migrating these items. Maintaining the same index names across reindexed data will allow dashboards to work seamlessly post-migration.

First, migrate index patterns by selecting them under Kibana > Stack Management > Saved Objects, and exporting them as ndjson objects.

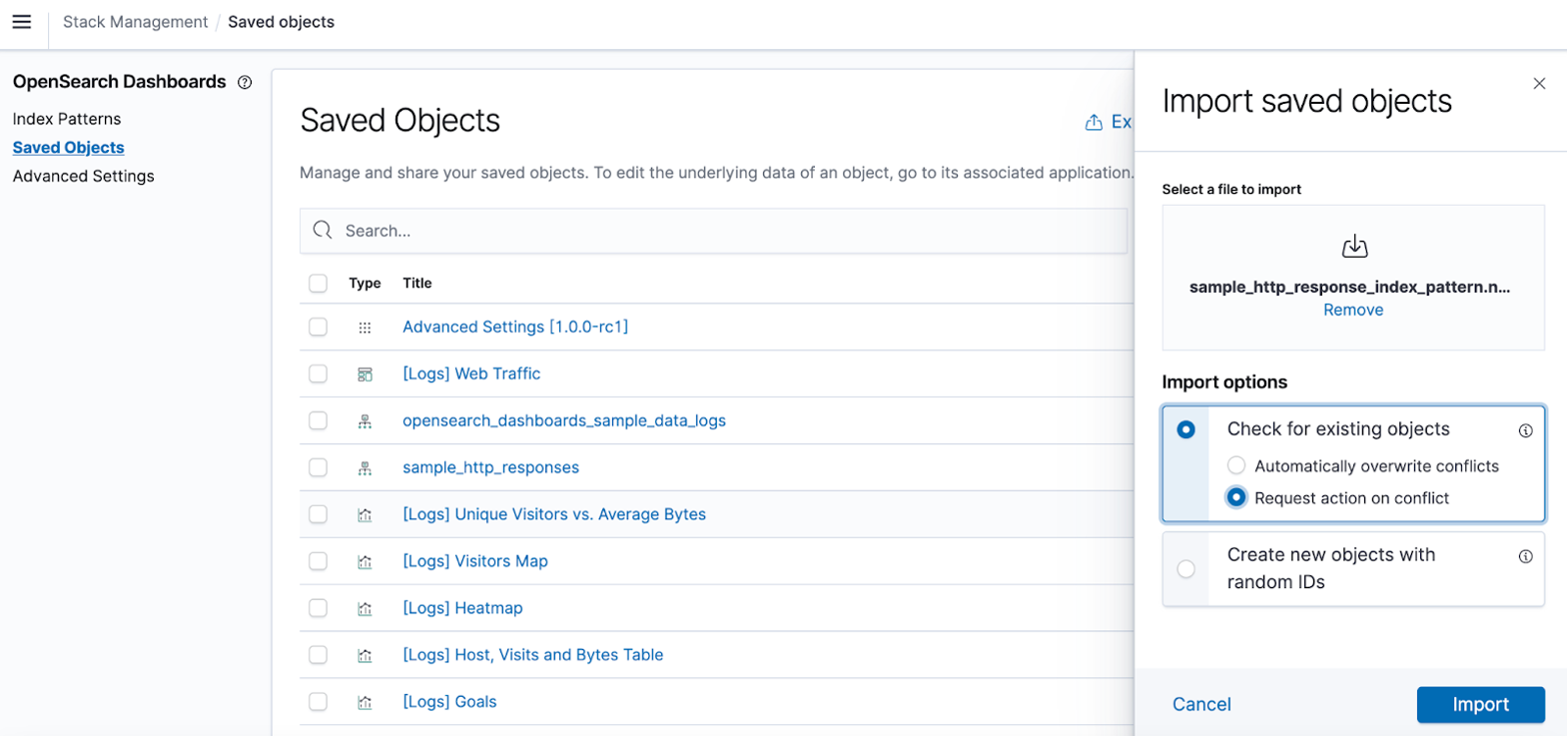

Next, import the index patterns from the OpenSearch Dashboard under Stack Management > Saved Objects.

Now migrate dashboards and visualizations using the same process, and check that they function correctly.

Validate the functionality and performance of your new OpenSearch cluster

With the migration to OpenSearch complete, check that everything is working as it should. Verify the functionality of search queries and aggregations your applications depend upon, and check performance versus the previous Elasticsearch cluster.

Do it all again in production

With your migration strategy now successfully proven out in test environments, it’s time to repeat the steps above, and to migrate your production Elasticsearch environment to realize the benefits of fully open source OpenSearch.