Data has always played a central role in the development of software solutions. One of the biggest challenges in this area is the processing and interpretation of unstructured data such as text, images, or audio files. This is where embedding vectors (called embeddings for short) come into play – a technology that is becoming increasingly important in the development of software solutions with the integration of AI functions.

Stay up to date

Learn more about MLCON

Embeddings are essentially a technique for converting unstructured data into a structure that can be easily processed by software. They are used to transform complex data such as words, sentences, or even entire documents into a vector space, with similar elements close to each other. These vector representations allow machines to recognize and exploit nuances and relationships in the data. Which is essential for a variety of applications such as natural language processing (NLP), image recognition, and recommendation systems.

OpenAI, the company behind ChatGPT, offers models for creating embeddings for texts, among other things. At the end of January 2024, OpenAI presented new versions of these embeddings models, which are more powerful and cost-effective than their predecessors. In this article, after a brief introduction to embeddings, we’ll take a closer look at the OpenAI embeddings and the recently introduced innovations, discuss how they work, and examine how they can be used in various software development projects.

Embeddings briefly explained

Imagine you’re in a room full of people and your task is to group these people based on their personality. To do this, you could start asking questions about different personality traits. For example, you could ask how open someone is to new experiences and rate the answer on a scale from 0 to 1. Each person is then assigned a number that represents their openness.

Next, you could ask about another personality trait, such as the level of sense of duty, and again give a score between 0 and 1. Now each person has two numbers that together form a vector in a two-dimensional space. By asking more questions about different personality traits and rating them in a similar way, you can create a multidimensional vector for each person. In this vector space, people who have similar vectors can then be considered similar in terms of their personality.

In the world of artificial intelligence, we use embeddings to transform unstructured data into an n-dimensional vector space. Similarly how a person’s personality traits are represented in the vector space, each point in this vector space represents an element of the original data (such as a word or phrase) in a way that is understandable and processable by computers.

OpenAI Embeddings

OpenAI embeddings extend this basic concept. Instead of using simple features like personality traits, OpenAI models use advanced algorithms and big data to achieve a much deeper and more nuanced representation of the data. The model not only analyzes individual words, but also looks at the context in which those words are used, resulting in more accurate and meaningful vector representations.

Another important difference is that OpenAI embeddings are based on sophisticated machine learning models that can learn from a huge amount of data. This means that they can recognize subtle patterns and relationships in the data that go far beyond what could be achieved by simple scaling and dimensioning, as in the initial analogy. This leads to a significantly improved ability to recognize and exploit similarities and differences in the data.

Individual values are not meaningful

While in the personality trait analogy, each individual value of a vector can be directly related to a specific characteristic – for example openness to new experiences or a sense of duty – this direct relationship no longer exists with OpenAI embeddings. In these embeddings, you cannot simply look at a single value of the vector in isolation and draw conclusions about specific properties of the input data. For example, a specific value in the embedding vector of a sentence cannot be used to directly deduce how friendly or not this sentence is.

The reason for this lies in the way machine learning models, especially those used to create embeddings, encode information. These models work with complex, multi-dimensional representations where the meaning of a single element (such as a word in a sentence) is determined by the interaction of many dimensions in vector space. Each aspect of the original data – be it the tone of a text, the mood of an image, or the intent behind a spoken utterance – is captured by the entire spectrum of the vector rather than by individual values within that vector.

Therefore, when working with OpenAI embeddings, it’s important to understand that the interpretation of these vectors is not intuitive or direct. You need algorithms and analysis to draw meaningful conclusions from these high-dimensional and densely coded vectors.

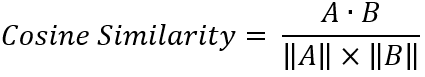

Comparison of vectors with cosine similarity

A central element in dealing with embeddings is measuring the similarity between different vectors. One of the most common methods for this is cosine similarity. This measure is used to determine how similar two vectors are and therefore the data they represent.

To illustrate the concept, let’s start with a simple example in two dimensions. Imagine two vectors in a plane, each represented by a point in the coordinate system. The cosine similarity between these two vectors is determined by the cosine of the angle between them. If the vectors point in the same direction, the angle between them is 0 degrees and the cosine of this angle is 1, indicating maximum similarity. If the vectors are orthogonal (i.e. the angle is 90 degrees), the cosine is 0, indicating no similarity. If they are opposite (180 degrees), the cosine is -1, indicating maximum dissimilarity.

Figure 1 -Cosine similarity

Accompanying this article is a Google Colab Python Notebook which you can use to try out many of the examples shown here. Colab, short for Colaboratory, is a free cloud service offered by Google. Colab makes it possible to write and execute Python code in the browser. It’s based on Jupyter Notebooks, a popular open-source web application that makes it possible to combine code, equations, visualizations, and text in a single document-like format. The Colab service is well suited for exploring and experimenting with the OpenAI API using Python.

A Python Notebook to try out

Accompanying this article is a Google Colab Python Notebook which you can use to try out many of the examples shown here. Colab, short for Colaboratory, is a free cloud service offered by Google. Colab makes it possible to write and execute Python code in the browser. It’s based on Jupyter Notebooks, a popular open-source web application that makes it possible to combine code, equations, visualizations, and text in a single document-like format. The Colab service is well suited for exploring and experimenting with the OpenAI API using Python.

In practice, especially when working with embeddings, we are dealing with n-dimensional vectors. The calculation of the cosine similarity remains conceptually the same, even if the calculation is more complex in higher dimensions. Formally, the cosine similarity of two vectors A and B in an n-dimensional space is calculated by the scalar product (dot product) of these vectors divided by the product of their lengths:

Figure 2 – Calculation of cosine similarity

The normalization of vectors plays an important role in the calculation of cosine similarity. If a vector is normalized, this means that its length (norm) is set to 1. For normalized vectors, the scalar product of two vectors is directly equal to the cosine similarity since the denominators in the formula from Figure 2 are both 1. OpenAI embeddings are normalized, which means that to calculate the similarity between two embeddings, only their scalar product needs to be calculated. This not only simplifies the calculation, but also increases efficiency when processing large quantities of embeddings.

OpenAI Embeddings API

OpenAI offers a web API for creating embeddings. The exact structure of this API, including code examples for curl, Python and Node.js, can be found in the OpenAI reference documentation.

OpenAI does not use the LLM from ChatGPT to create embeddings, but rather specialized models. They were developed specifically for the creation of embeddings and are optimized for this task. Their development was geared towards generating high-dimensional vectors that represent the input data as well as possible. In contrast, ChatGPT is primarily optimized for generating and processing text in a conversational form. The embedding models are also more efficient in terms of memory and computing requirements than more extensive language models such as ChatGPT. As a result, they are not only faster but much more cost-effective.

New embedding models from OpenAI

Until recently, OpenAI recommended the use of the text-embedding-ada-002 model for creating embeddings. This model converts text into a sequence of floating point numbers (vectors) that represent the concepts within the content. The ada v2 model generated embeddings with a size of 1536 dimensions and delivered solid performance in benchmarks such as MIRACL and MTEB, which are used to evaluate model performance in different languages and tasks.

At the end of January 2024, OpenAI presented new, improved models for embeddings:

text-embedding-3-small: A smaller, more efficient model with improved performance compared to its predecessor. It performs better in benchmarks and is significantly cheaper.

text-embedding-3-large: A larger model that is more powerful and creates embeddings with up to 3072 dimensions. It shows the best performance in the benchmarks but is slightly more expensive than ada v2.

A new function of the two new models allows developers to adjust the size of the embeddings when generating them without significantly losing their concept-representing properties. This enables flexible adaptation, especially for applications that are limited in terms of available memory and computing power.

Readers who are interested in the details of the new models can find them in the announcement on the OpenAI blog. The exact costs of the various embedding models can be found here.

New embeddings models

At the end of January 2024, OpenAI introduced new models for creating embeddings. All code examples and result values contained in this article already refer to the new text-embedding-3-large model.

Create embeddings with Python

In the following section, the use of embeddings is demonstrated using a few code examples with Python. The code examples are designed so that they can be tried out in Python Notebooks. They are also available in a similar form in the previously mentioned accompanying Google Colab notebook mentioned above.

Listing 1 shows how to create embeddings with the Python SDK from OpenAI. In addition, numpy is used to show that the embeddings generated by OpenAI are normalized.

Listing 1

from openai import OpenAI

from google.colab import userdata

import numpy as np

# Create OpenAI client

client = OpenAI(

api_key=userdata.get('openaiKey'),

)

# Define a helper function to calculate embeddings

def get_embedding_vec(input):

"""Returns the embeddings vector for a given input"""

return client.embeddings.create(

input=input,

model="text-embedding-3-large", # We use the new embeddings model here (announced end of Jan 2024)

# dimensions=... # You could limit the number of output dimensions with the new embeddings models

).data[0].embedding

# Calculate the embedding vector for a sample sentence

vec = get_embedding_vec("King")

print(vec[:10])

# Calculate the magnitude of the vector. I should be 1 as

# embedding vectors from OpenAI are always normalized.

magnitude = np.linalg.norm(vec)

magnitude

Similarity analysis with embeddings

In practice, OpenAI embeddings are often used for similarity analysis of texts (e.g. searching for duplicates, finding relevant text sections in relation to a customer query, and grouping text). Embeddings are very well suited for this, as they work in a fundamentally different way to comparison methods based on characters, such as Levenshtein distance. While it measures the similarity between texts by counting the minimum number of single-character operations (insert, delete, replace) required to transform one text into another, embeddings capture the meaning and context of words or sentences. They consider the semantic and contextual relationships between words, going far beyond a simple character-based level of comparison.

As a first example, let’s look at the following three sentences (the following examples are in English, but embeddings work analogously for other languages and cross-language comparisons are also possible without any problems):

I enjoy playing soccer on weekends.

Football is my favorite sport. Playing it on weekends with friends helps me to relax.

In Austria, people often watch soccer on TV on weekends.

In the first and second sentence, two different words are used for the same topic: Soccer and football. The third sentence contains the original soccer, but it has a fundamentally different meaning from the first two sentences. If you calculate the similarity of sentence 1 to 2, you get 0.75. The similarity of sentence 1 to 3 is only 0.51. The embeddings have therefore reflected the meaning of the sentence and not the choice of words.

Here is another example that requires an understanding of the context in which words are used:

He is interested in Java programming.

He visited Java last summer.

He recently started learning Python programming.

In sentence 2, Java refers to a place, while sentences 1 and 3 have something to do with software development. The similarity of sentence 1 to 2 is 0.536, but that of 1 to 3 is 0.587. As expected, the different meaning of the word Java has an effect on the similarity.

The next example deals with the treatment of negations:

I like going to the gym.

I don’t like going to the gym.

I don’t dislike going to the gym.

Sentences 1 and 2 say the opposite, while sentence 3 expresses something similar to sentence 1. This content is reflected in the similarities of the embeddings. Sentence 1 to sentence 2 yields a cosine similarity of 0.714 while sentence 1 compared to sentence 3 yields 0.773. It is perhaps surprising that there is no major difference between the embeddings. However, it’s important to remember that all three sets are about the same topic: The question of whether you like going to the gym to work out.

The last example shows that the OpenAI embeddings models, just like ChatGPT, have built in a certain “knowledge” of concepts and contexts through training with texts about the real world.

I need to get better slicing skills to make the most of my Voron.

3D printing is a worthwhile hobby.

Can I have a slice of bread?

In order to compare these sentences in a meaningful way, it’s important to know that Voron is the name of a well-known open-source project in the field of 3D printing. It’s also important to note that slicing is a term that plays an important role in 3D printing. The third sentence also mentions slicing, but in a completely different context to sentence 1. Sentence 2 mentions neither slicing nor Voron. However, the trained knowledge enables the OpenAI Embeddings model to recognize that sentences 1 and 2 have a thematic connection, but sentence 3 means something completely different. The similarity of sentence 1 and 2 is 0.333 while the comparison of sentence 1 and 3 is only 0.263.

Similarity values are not percentages

The similarity values from the comparisons shown above are the cosine similarity of the respective embeddings. Although the cosine similarity values range from -1 to 1, with 1 being the maximum similarity and -1 the maximum dissimilarity, they are not to be interpreted directly as percentages of agreement. Instead, these values should be considered in the context of their relative comparisons. In applications such as searching text sections in a knowledge base, the cosine similarity values are used to sort the text sections in terms of their similarity to a given query. It is important to see the values in relation to each other. A higher value indicates a greater similarity, but the exact meaning of the value can only be determined by comparing it with other similarity values. This relative approach makes it possible to effectively identify and prioritize the most relevant and similar text sections.

Embeddings and RAG solutions

Embeddings play a crucial role in Retrieval Augmented Generation (RAG) solutions, an approach in artificial intelligence that combines the capabilities of information retrieval and text generation. Embeddings are used in RAG systems to retrieve relevant information from large data sets or knowledge databases. It is not necessary for these databases to have been included in the original training of the embedding models. They can be internal databases that are not available on the public Internet.

With RAG solutions, queries or input texts are converted into embeddings. The cosine similarity to the existing document embeddings in the database is then calculated to identify the most relevant text sections from the database. This retrieved information is then used by a text generation model such as ChatGPT to generate contextually relevant responses or content.

Vector databases play a central role in the functioning of RAG systems. They are designed to efficiently store, index and query high-dimensional vectors. In the context of RAG solutions and similar systems, vector databases serve as storage for the embeddings of documents or pieces of data that originate from a large amount of information. When a user makes a request, this request is first transformed into an embedding vector. The vector database is then used to quickly find the vectors that correspond most closely to this query vector – i.e. those documents or pieces of information that have the highest similarity. This process of quickly finding similar vectors in large data sets is known as Nearest Neighbor Search.

Challenge: Splitting documents

A detailed explanation of how RAG solutions work is beyond the scope of this article. However, the explanations regarding embeddings are hopefully helpful for getting started with further research on the topic of RAGs.

However, one specific point should be pointed out at the end of this article: A particular and often underestimated challenge in the development of RAG systems that go beyond Hello World prototypes is the splitting of longer texts. Splitting is necessary because the OpenAI embeddings models are limited to just over 8,000 tokens. One token corresponds to approximately 4 characters in the English language (see also).

It’s not easy finding a good strategy for splitting documents. Naive approaches such as splitting after a certain number of characters can lead to the context of text sections being lost or distorted. Anaphoric links are a typical example of this. The following two sentences are an example:

VX-2000 requires regular lubrication to maintain its smooth operation.

The machine requires the DX97 oil, as specified in the maintenance section of this manual.

The machine in the second sentence is an anaphoric link to the first sentence. If the text were to be split up after the first sentence, the essential context would be lost, namely that the DX97 oil is necessary for the VX-2000 machine.

There are various approaches to solving this problem, which will not be discussed here to keep this article concise. However, it is essential for developers of such software systems to be aware of the problem and understand how splitting large texts affects embeddings.

Stay up to date

Learn more about MLCON

Summary

Embeddings play a fundamental role in the modern AI landscape, especially in the field of natural language processing. By transforming complex, unstructured data into high-dimensional vector spaces, embeddings enable in-depth understanding and efficient processing of information. They form the basis for advanced technologies such as RAG systems and facilitate tasks such as information retrieval, context analysis, and data-driven decision-making.

OpenAI’s latest innovations in the field of embeddings, introduced at the end of January 2024, mark a significant advance in this technology. With the introduction of the new text-embedding-3-small and text-embedding-3-large models, OpenAI now offers more powerful and cost-efficient options for developers. These models not only show improved performance in standardized benchmarks, but also offer the ability to find the right balance between performance and memory requirements on a project-specific basis through customizable embedding sizes.

Embeddings are a key component in the development of intelligent systems that aim to achieve useful processing of speech information.

Links and Literature: