OpenAI’s CLIP Model

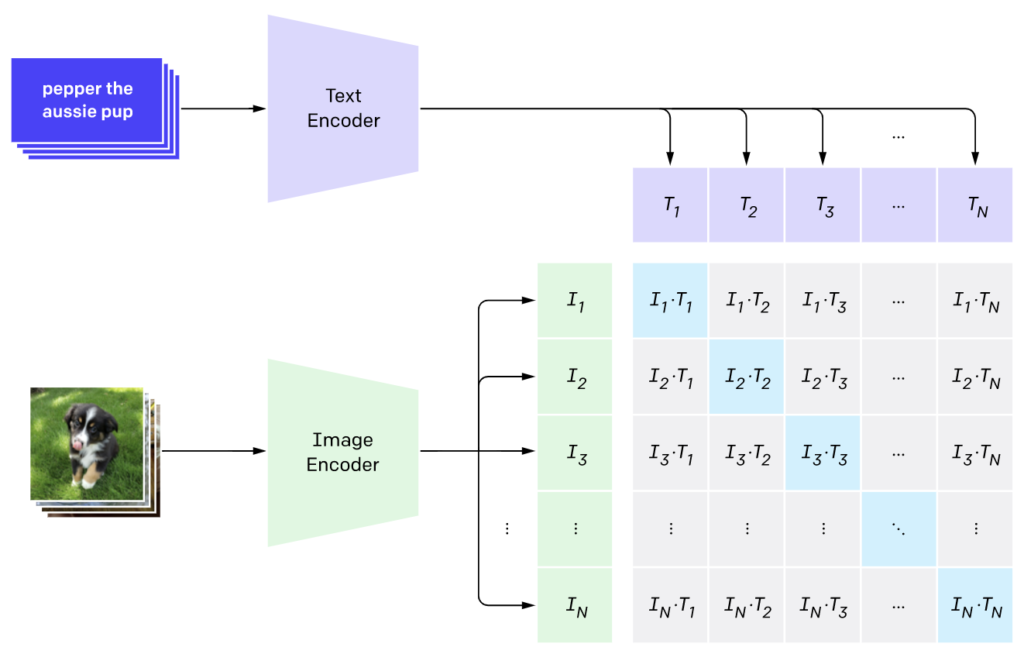

I first encountered the CLIP model in early 2022 while experimenting with the AI drawing model. CLIP (Contrastive Language-Image Pre-Training) is a model proposed by OpenAI in 2021. CLIP can encode images and text into representations that can be compared in the same space. CLIP is the basis for many text-to-image models (e.g. Stable Diffusion) to calculate the distance between the generated image and the prompt during training.

As shown above, the CLIP model consists of two components: Text Encoder and Image Encoder. Let’s take the ViT-B-32 (different models have different output vector sizes) version as an example:

- Text Encoder can encode any text (length <77 tokens) into a 1×512 dimensional vector.

- Image Encoder can encode any image into a 1×512 dimensional vector.

By calculating the distance or cosine similarity between the two vectors, we can compare the similarity between a piece of text and an image.

Stay up to date

Learn more about MLCON

Image Search on a Server

I found this to be quite fascinating, as it was the first time images and text could be compared in this way. Based on this principle, I quickly set up an image search tool on a server. First, process all images through CLIP, obtaining their image vectors, they should be a list of 1×512 vectors.

# get all images list.

img_lst = glob.glob(‘imgs/*jpg’)

img_features = []

# calculate vector for every image.

for img_path in img_lst:

image = preprocess(Image.open(img_path)).unsqueeze(0).to(device)

image_features = model.encode_image(image)

img_features.append(image_features)

Then, given the search text query, calculate its text vector (with a size of 1×512) and compare similarity with each image vector in a for-loop.

text_query = ‘lonely’

# tokenize the query then put it into the CLIP model.

text = clip.tokenize([text_query]).to(device)

text_feature = model.encode_text(text)

# compare vector similary with each image vector

sims_lst = []

for img_feature in img_features:

sim = cosin_similarity(text_feature, img_feature)

sims_lst.append(sim.item())

Finally, display the top K results in order. Here I return the top3 ranked image files, and display the most relevant result.

K = 3

# sort by score with np.argsort

sims_lst_np = np.array(sims_lst)

idxs = np.argsort(sims_lst_np)[-K:]

# display the most relevant result.

imagedisplay(filename=img_lst[idxs[-1]])

I discovered that its image search results were far superior to those of Google, here are the top 3 results when I search for the keyword “lonely”:

Integrating CLIP into iOS with Swift

After marveling at the results, I wondered: Is there a way to bring CLIP to mobile devices? After all, the place where I store the most photos is neither my MacBook Air nor my server, but rather my iPhone.

To port a large GPU-based model to the iPhone, operator support and execution efficiency are the two most critical factors.

1. Operator Support

Fortunately, in December 2022, Apple demonstrated the feasibility of porting Stable Diffusion to iOS, proving that the deep learning operators needed for CLIP are supported in iOS 16.0.

2. Execution Efficiency

Even with operator support, if the execution efficiency is extremely slow (for example, calculating vectors for 10,000 images takes half an hour, or searching takes 1 minute), porting CLIP to mobile devices would lose its meaning. These factors can only be determined through hands-on experimentation.

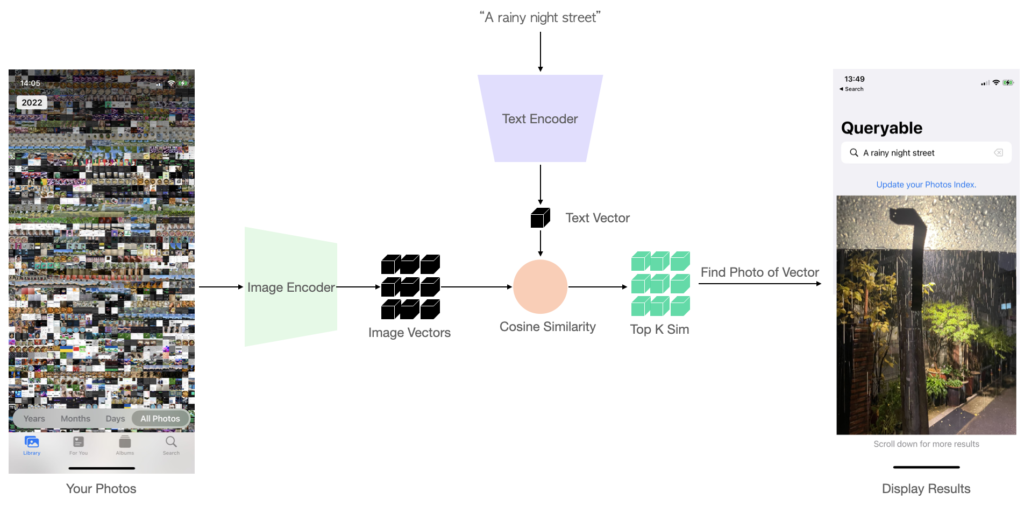

I exported the Text Encoder and Image Encoder to the CoreML model using the coremltools library. The final models has a total file size of 300MB. Then, I started writing Swift code.

I use Swift to load the Text/Image Encoder models and calculate all the image vectors. When users input a search keyword, the model first calculates the text vector and then computes its cosine similarity with each of the image vectors individually.

The core code is as follows:

// load the Text/Image Encoder model.

let text_encoder = try MLModel(contentsOf: TextEncoderURL, configuration: config)

let image_encoder = try MLModel(contentsOf: ImageEncoderURL, configuration: config)

// given a prompt/photo, calculate the CLIP vector for it.

let text_feature = text_encoder.encode(“a dog”)

let image_feature = image_encoder.encode(“a dog”)

// compute the cosine similarity.

let sim = cosin_similarity(img_feature, text_feature)

As a SwiftUI beginner, I found that Swift doesn’t have a specific implementation for cosine similarity. Therefore, I used Accelerate to write one myself, the code below is a Swift translation of cosine similarity from Wikipedia.

import Accelerate

func cosine_similarity(A: MLShapedArray<Float32>, B: MLShapedArray<Float32>) -> Float {

let magnitude = vDSP.rootMeanSquare(A.scalars) * vDSP.rootMeanSquare(B.scalars)

let dotarray = vDSP.dot(A.scalars, B.scalars)

return dotarray / magnitude

}

The reason I split Text Encoder and Image Encoder into two models is because, when actually using this Photos search app, your input text will always change, but the content of the Photos library is fixed. So all your image vectors can be computed once and saved in advance. Then, the text vector is computed for each of your searches.

Furthermore, I implemented multi-core parallelism when calculating similarity, significantly increasing search speed: a single search for less than 10,000 images takes less than 1 second. Thus, real-time text searching from tens of thousands of Photos library becomes possible.

Below is a flowchart of how Queryable works:

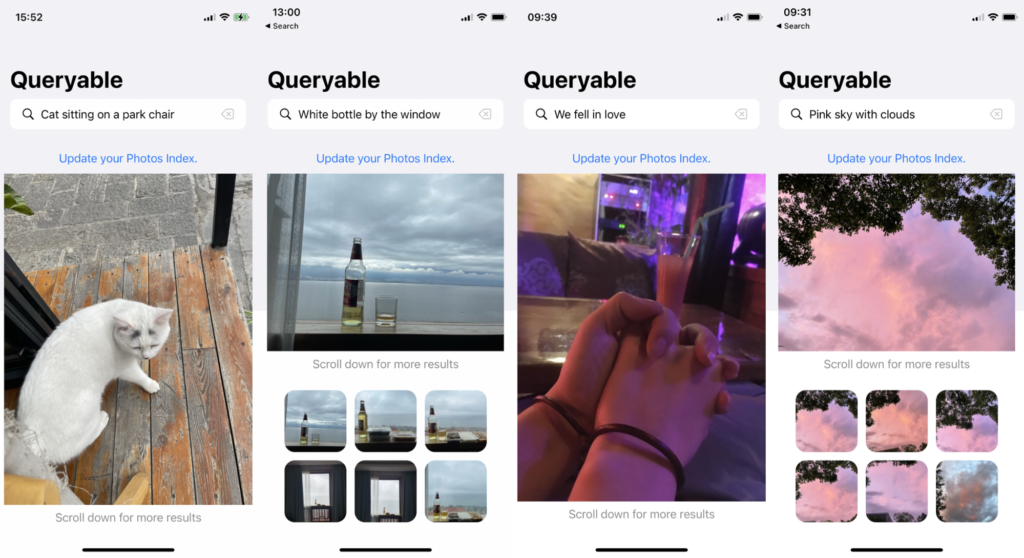

Performance

But, compared to the search function of the iPhone Photos, how much does the CLIP-based album search capability improve? The answer is: overwhelmingly better. With CLIP, you can search for a scene in your mind, a tone, an object, or even an emotion conveyed by the image.

To use Queryable, you need to first build the index, which will traverse your album, calculate all the image vectors and store them. This takes place only once, the total time required for building the index depends on the number of your photos, the speed of indexing is ~2000 photos per minute on the iPhone 12 mini. When you have new photos, you can manually update the index, which is very fast.

In the latest version, you have the option to grant the app access to the network in order to download photos stored on iCloud. This will only occur when the photo is included in your search results, the original version is stored on iCloud, and you have navigated to the details page and clicked the download icon. Once you grant the permissions, you can close the app, reopen it, and the photos will be automatically downloaded from iCloud.

3. Any requirements for the device?

- iOS 16.0 or above

- iPhone 11 (A13 chip) or later models

The time cost for a search also depends on your number of photos: for <10,000 photos it takes less than 1s. For me, an iPhone 12 mini user with 35,000 photos, each search takes about 2.8s.

Q&A on Queryable

1.On Privacy and security issues.

Queryable is designed as an OFFLINE app that does not require a network connection and will never request network access, thereby avoiding privacy issues.

2. What if my pictures are stored on iCloud?

Due to the inability to connect to a network, Queryable can only use the cache of the low-definition version of your local Photos album. However, the CLIP model itself resizes the input image to a very small size (e.g. ViT-B-32 is 224×224), so if your image is stored on iCloud, it actually does not affect search accuracy except that you cannot view its original image in search result.