Microscopic blood counts include an analysis of the six types of white blood cells. These include: Neutrophils, Eosinophils, Basophilic Granulocytes, Monocytes, and Lymphocytes. Based on the number, maturity, and distribution of these white blood cells, you can obtain valuable information about possible diseases. However, here we will not focus on the handling of the blood smears, but on the recognition of the blood cells.

For the tests described, the Bresser Trino microscope with a MikrOkular was used and connected to a computer (HP Z600). The program presented in this article was used for image analysis. The software is based on neural networks using the Java frameworks Neuroph and Deep Learning for Java (DL4J). Staining of smears for the microscope were made with Löffler solution.

Training data

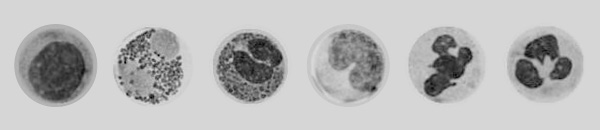

For neural network training, the images of the blood cells were centered, converted to grayscale format, and normalized. After preparation, the images looked as shown in Figure 1.

A dataset of 663 images with 6 labels – ly, bg, eog, mo, sng, seg – was compiled for training. For Neuroph, the imageLabels shown in Listing 1 were set.

List<String> imageLabels = new ArrayList();

imageLabels.add("ly");

imageLabels.add("bg");

imageLabels.add("eog");

imageLabels.add("mo");

imageLabels.add("sng");

imageLabels.add("seg");

After that, the directory for the input data looks like Figure 2.

For DL4J the directory for the input data (your data location) is composed differently (Fig. 3).

Most of the images in the dataset came from our own photographs. However, there were also images from open and free internet sources. In addition, the dataset contained the images multiple times as they were also rotated 90, 180, and 270 degrees respectively and stored.

Stay up to date

Learn more about MLCON

Neuroph MLP Network

The main dependencies for the Neuroph project in pom.xml are shown in Listing 2.

<dependency> <groupId>org.neuroph</groupId> <artifactId>neuroph-core</artifactId> <version>2.96</version> </dependency> <dependency> <groupId>org.neuroph</groupId> <artifactId>neuroph-imgrec</artifactId> <version>2.96</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> </dependency>

A multilayer perceptron was set with the parameters shown in Listing 3.

private static final double LEARNINGRATE = 0.05;

private static final double MAXERROR = 0.05;

private static final int HIDDENLAYERS = 13;

//Open Network

Map<String, FractionRgbData> map;

try {

map = ImageRecognitionHelper.getFractionRgbDataForDirectory(new File(imageDir), new Dimension(10, 10));

dataSet = ImageRecognitionHelper.createRGBTrainingSet(image-Labes, map);

// create neural network

List<Integer> hiddenLayers = new ArrayList<>();

hiddenLayers.add(HIDDENLAYERS);/

NeuralNetwork nnet = ImageRecognitionHelper.createNewNeuralNet-work("leukos", new Dimension(10, 10), ColorMode.COLOR_RGB, imageLabels, hiddenLayers, TransferFunctionType.SIGMOID);

// set learning rule parameters

BackPropagation mb = (BackPropagation) nnet.getLearningRule();

mb.setLearningRate(LEARNINGRATE);

mb.setMaxError(MAXERROR);

nnet.save("leukos.net");

} catch (IOException ex) {

Logger.getLogger(Neuroph.class.getName()).log(Level.SEVERE, null, ex);

}

Example

The implementation of a test can look like the one shown in Listing 4.

HashMap<String, Double> output;

String fileName = "leukos112.seg";

NeuralNetwork nnetTest = NeuralNetwork.createFromFile("leukos.net");

// get the image recognition plugin from neural network

ImageRecognitionPlugin imageRecognition = (ImageRecognitionPlugin) nnetTest.getPlugin(ImageRecognitionPlugin.class);

output = imageRecognition.recognizeImage(new File(fileName);

Client

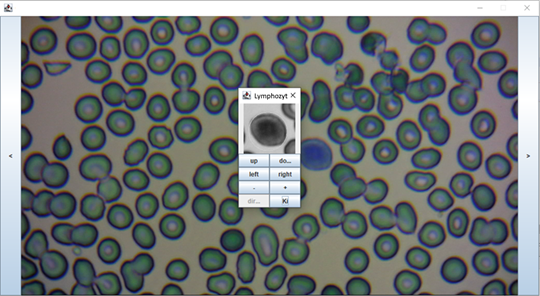

A simple SWING interface was developed for graphical cell recognition. An example of the recognition of a lymphocyte is shown in Figure 4.

DL4J MLP network

The main dependencies for the DL4J project in pom.xml are shown in Listing 5.

<dependency> <groupId>org.deeplearning4j</groupId> <artifactId>deeplearning4j-core</artifactId> <version>1.0.0-beta4</version> </dependency> <dependency> <groupId>org.nd4j</groupId> <artifactId>nd4j-native-platform</artifactId> <version>1.0.0-beta4</version> </dependency>

Again, a multilayer perceptron was used with the parameters shown in Listing 6.

protected static int height = 100; protected static int width = 100; protected static int channels = 1; protected static int batchSize = 20; protected static long seed = 42; protected static Random rng = new Random(seed); protected static int epochs = 100; protected static boolean save = true;

//DataSet String dataLocalPath = "your data location"; ParentPathLabelGenerator labelMaker = new ParentPathLabelGenerator(); File mainPath = new File(dataLocalPath); FileSplit fileSplit = new FileSplit(mainPath, NativeImageLoader.ALLOWED_FORMATS, rng); int numExamples = toIntExact(fileSplit.length()); numLabels = Objects.requireNonNull(fileSplit.getRootDir().listFiles(File::isDirectory)).length; int maxPathsPerLabel = 18; BalancedPathFilter pathFilter = new BalancedPathFilter(rng, labelMaker, numExamples, numLabels, maxPathsPerLabel); //training – Share test double splitTrainTest = 0.8; InputSplit[] inputSplit = fileSplit.sample(pathFilter, splitTrainTest, 1 - splitTrainTest); InputSplit trainData = inputSplit[0]; InputSplit testData = inputSplit[1]; //Open Network MultiLayerNetwork network = lenetModel(); network.init(); ImageRecordReader trainRR = new ImageRecordReader(height, width, channels, labelMaker); trainRR.initialize(trainData, null); DataSetIterator trainIter = new RecordReaderDataSetIterator(trainRR, batchSize, 1, numLabels); scaler.fit(trainIter); trainIter.setPreProcessor(scaler); network.fit(trainIter, epochs);

LeNet Model

This model is a kind of forward neural network for image processing (Listing 8).

private MultiLayerNetwork lenetModel() {

/*

* Revisde Lenet Model approach developed by ramgo2 achieves slightly above random

* Reference: https://gist.github.com/ramgo2/833f12e92359a2da9e5c2fb6333351c5

*/

MultiLayerConfiguration conf = new NeuralNetConfiguration.Builder()

.seed(seed)

.l2(0.005)

.activation(Activation.RELU)

.weightInit(WeightInit.XAVIER)

.updater(new AdaDelta())

.list()

.layer(0, convInit("cnn1", channels, 50, new int[]{5, 5}, new int[]{1, 1}, new int[]{0, 0}, 0))

.layer(1, maxPool("maxpool1", new int[]{2, 2}))

.layer(2, conv5x5("cnn2", 100, new int[]{5, 5}, new int[]{1, 1}, 0))

.layer(3, maxPool("maxool2", new int[]{2, 2}))

.layer(4, new DenseLayer.Builder().nOut(500).build())

.layer(5, new OutputLayer.Builder(LossFunctions.LossFunction.NEGATIVELOGLIKELIHOOD)

.nOut(numLabels)

.activation(Activation.SOFTMAX)

.build())

.setInputType(InputType.convolutional(height, width, channels))

.build();

return new MultiLayerNetwork(conf);

}

Example

A test of the Lenet model might look like the one shown in Listing 9.

trainIter.reset();

DataSet testDataSet = trainIter.next();

List<String> allClassLabels = trainRR.getLabels();

int labelIndex;

int[] predictedClasses;

String expectedResult;

String modelPrediction;

int n = allClassLabels.size();

System.out.println("n = " + n);

for (int i = 0; i < n; i = i + 1) {

labelIndex = testDataSet.getLabels().argMax(1).getInt(i);

System.out.println("labelIndex=" + labelIndex);

INDArray ia = testDataSet.getFeatures();

predictedClasses = network.predict(ia);

expectedResult = allClassLabels.get(labelIndex);

modelPrediction = allClassLabels.get(predictedClasses[i]);

System.out.println("For a single example that is labeled " + expectedResult + " the model predicted " + modelPrediction);

}

Results

After a few test runs, the results shown in Table 1 are obtained.

| Lymphocytes (ly) | 87 | 85 |

| Basophils (bg) | 96 | 63 |

| Eosinophils (eog) | 93 | 54 |

| Monocytes (mo) | 86 | 60 |

| Rod nuclear neutrophils (sng) | 71 | 46 |

| Segment nucleated neutrophils (seg) | 92 | 81 |

As can be seen, the results using Neuroph are slightly better than those using DL4J, but it is important to note that the results are dependent on the quality of the input data and the network’s topology. We plan to investigate this issue further in the near future.

However, with these results, we have already been able to show that image recognition can be used for medical purposes with not one, but two sound and potentially complementary Java frameworks.

Acknowledgments

At this point, we would like to thank Mr. A. Klinger (Management Devoteam GmbH Germany) and Ms. M. Steinhauer (Bioinformatician) for their support.