devmio: Thanks for the interview! Let me just get right into it and ask about the Stable Diffusion model and other diffusion models: How do they work?

Pieter Buteneers: I propose we stay high level, because nitty-gritty details will take us too far. These diffusion models combine language models with image models. The language models try to represent the meaning of a text in a specific way. You basically translate human language into something that holds meaning for a machine. First, researchers and developers make language models based on available texts on the internet.

Next, you do the same for images. You take an image and try to create a representation of it that holds meaning to a machine. Then, you scrape images that also contain these short summaries of what’s in the image. For example, you can take a specific Van Gogh painting name or something like “self-portrait by Van Gogh”. Pictures like these have a definition, similar to stock photos captioned “woman talking on the phone with an angry expression”.

Images created by DreamStudio.ai with the prompt: “Woman talking on the phone with an angry expression.”

Once you have the text representation and image representation linked up, you can simulate one or the other to get a result.

Stable Diffusion is the most interesting model right now because it’s fully open source. Everybody can play with it. You start off with a blank image, then you just put in a bit of text and it generates a representation of what the text means.

devmio: You mentioned a blank image. What is the starting point for image generation?

Pieter Buteneers: Actually, what you are doing is creating a random image from noise. The type of noise is rather irrelevant. Some noise works better than others, so that’s why Gaussian noise is used more often, but in practice it doesn’t really matter.

The random image you created is just speckles of different colors. You start changing the image content to come closer to the meaning of the sentence you entered. Since you randomly initialized it, the result can be different every time, just as there can be many stock images of a woman on the phone talking angrily. Any random initialization is going to generate a different image. The AI is just trying to change the random noise you start with and match it closely with an image that fits the representation in the least number of steps.

devmio: Is the image is always unique? Does the AI try to find an existing picture or anything in the training data matching the description?

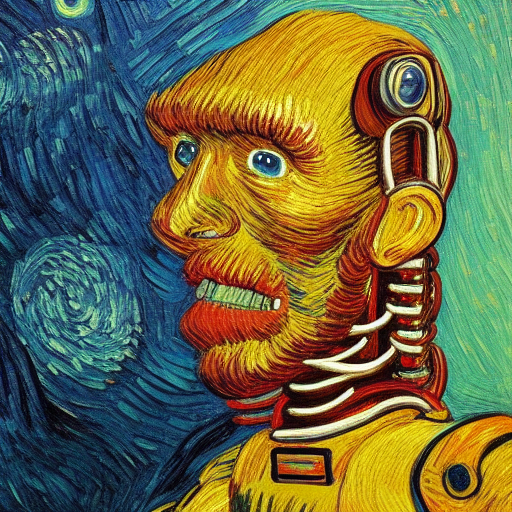

Pieter Buteneers: Even though these models are huge, at this point, they cannot store all of the images they were trained on. The AI only stores characteristics of these images, for example, Van Gogh’s style. The same is true for other styles and artists, like Monet or Picasso. It has some “understanding”, not in the human sense of the word, but it has a representation of what it means to be a Van Gogh or a Picasso painting.

Image created by DreamStudio.ai with the prompt: “A painting of a robot in the style of Van Gogh.”

devmio: What does this representation look like?

Pieter Buteneers: It looks like ones and zeros, the language your computer understands. Typically, it’s like a vector, which is just a list of numbers. This representation doesn’t really have any meaning to us. Those representations are different for different kinds of models. If you train the algorithm again on a slightly different data set, the representation will be different, since these algorithms also start from a random initialization.

Our representation of a picture is natural language: “a woman on the phone with an angry expression”. For us, that sentence means something. But just like with different natural languages, the representation in ones and zeros only makes sense to this one algorithm. That’s how it was trained and so, it created a representation that made sense to it. It didn’t make a representation by speaking to other algorithms. It’s unique to this model.

The importance of language models

devmio: Natural language inputs seem to be very difficult for an AI to understand. In an article you called it one of the next frontiers in AI. Could you elaborate on that?

Pieter Buteneers: Image recognition is something we’ve been very good at for many years. If you post a photo on Facebook and friends tag you in other photos, it will recognize you. It works much better than what you see the FBI use in movies. It’s insanely good and has been since 2016.

What’s happening in recent years is that we’re doing the same steps for language understanding, and in some cases, even language generation. These language models become much better at getting a good representation of what text means.

There have been two different approaches. There’s the approach that started with the BERT model. There were other models before this, but BERT was a big breakthrough where developers trained the model to fill in missing pieces of text. If you want to fill in missing pieces of text, you need to really understand what the text is about, or you’ll end up simply putting in random characters on a page like a monkey. That’s one stretch of models.

The other approach is actually very similar, but instead of filling in missing pieces of text, you let it produce the next character or the next word in a sentence. This second model lets you generate and produce text. The first model can also be used to generate text, but it’s not really designed for it and doesn’t work as easily.

What you see happening in this area is our models are coming closer to human performance for specific tasks. There are more and more tasks that these language models can handle at the human performance level, or even exceed it. However, most of these tasks are fairly academic. The models don’t perform as well in other tasks, but we see steady progress.

devmio: Natural language input is so complicated because it’s more than just representation. It’s dependent on context and it’s meaningful. Is that why it’s hard to grasp for AI and computers?

Pieter Buteneers: Yes, you need a lot of context in order to understand what’s happening in a text. That’s why we only recently started seeing these breakthroughs, because now we have enough compute power for training. Some of these models can cost millions, so training them is also extremely expensive.

These models are now possible because, at least on supercomputer scale, we’ve reached a point where we can train with the massive amounts of available text on the internet. The only thing you need is say, “OK, here’s a bit of text. I removed some words, try to figure out what those words are”. In doing so, you force the algorithms to figure out what that bit of text is about. These models start to understand how human language is structured.

You also have language models that you can train to fill out missing pieces of a text in hundreds of languages. It can do this almost as well as if you trained it on English-language text only. Since the AI can understand a few different languages, additional languages become very easy for it to understand.

It even works for what we call low-resource languages. Low-resource languages are languages where there isn’t much text available on the Internet. Even here, we receive very similar performance, close to the level of performance for English.

These models are capable of making an abstraction of human language. They are very good at picking up language without you having to tell it: “This is what grammar looks like”. They become a universal language approximator and can understand human language because of its own structure. It doesn’t matter which language it is; the model doesn’t care whether it’s English, German, Arabic, or Mandarin.

devmio: So, the AI understands the underlying principle of language itself, not necessarily specific languages?

Pieter Buteneers: Yes, indeed. Language researchers have tried to come up with universal grammar, but they haven’t found it yet. There are always exceptions and there’s always something missing. But it seems these AI models have been able to pick it up.

Major breakthroughs in the last few years

devmio: You said one big thing in AI development currently is computing power. Is that why image generation AIs are popping up now? Or was there any recent technological advancement?

Pieter Buteneers: The largest breakthroughs come from these language models. Without these language models, you wouldn’t have a proper representation of what something means. Since these language models have become so much better, you can start giving the AI commands to create a representation and start matching an image representation to it.

These language models go back many, many years. But it’s only recently that we’ve figured out how to implement them on a GPU in a computing-friendly way. The major breakthrough BERT brought transforms these old models that work well on a CPU into a new architecture that computes fast on a GPU.

This is how we’ve been able to make these massive jumps forward. Since 2018, these models have become bigger and more intelligent over time, so you can do more interesting things with them.

devmio: Are the current developments that impressive, or are they simply what was expected?

Pieter Buteneers: It’s a massive leap forward compared to where we were four years ago. Back then, a task might give us 10% or 20% accuracy compared to human performance. Where we are now is at or above human performance.

The same happened around 2013 and 2014 with image recognition. Before then, these AI algorithms improved a few percent increase in accuracy per year. Then, someone figured out how to run things on a GPU and we made a jump of 10 to 15% a year, which got the ball rolling.

What we had with language, now we have with images. At the time, image recognition models were based on our understanding of how our visual cortex and eyes process images. We made assumptions and turned them into a neural network. I think the first design of that happened around 1999, which is still the Stone Age in terms of AI. This structure was then optimized to run on a GPU, and suddenly there was a massive breakthrough.

devmio: What’s next? What are the biggest hurdles to overcome and what are you most excited about in AI development?

Pieter Buteneers: As far as hurdles go, let me give a little bit of context first. It might be interesting to know that the amount of language that a language model has to process is several orders of magnitude, maybe 10,000 to 100,000 times larger, than the language input a child needs to pick up a language.

There are two reasons for this. One is that these models are still very inefficient at learning. The other thing is much harder to overcome. Our brain is hardwired to a form of universal grammar. It is preprogrammed in our brains, and we’re pre-wired to grasp the concept of grammar. That pre-wiring is very hard to recreate and requires a lot of engineering. We’ve been working on it for years and it seems almost impossible.

Coming back to the idea of AI being inefficient at learning, if AI wants to cross the bridge further, being able to learn faster with less data would be a massive breakthrough. That’s where GPT3 has been the most impressive to me. You can simply show it a few examples, such as “translate this word to French”, and fill in the next word. The AI figures out “I need to translate this” and will translate it correctly.

These models get better and better at understanding what a sentence means and continuing what you’ve asked it. Sometimes you need examples, but the number of examples needed for good results has become much, much smaller. We gotten closer to embedding human common sense into these models.

There’s another problem with these models. For example, they can be misogynistic. Even if there is only a small hint of misogyny in every other sentence these models use, it becomes a standard pattern. These models have this issue because it’s simply a pattern it detects and recreates. Overcoming these kinds of simple patterns in language is another hurdle.

I’m excited for these models to get closer towards grasping a basic form of human common sense. Does that mean artificial intelligence will think about a topic on its own? That’s definitely not the case. The algorithms are good at reproducing what they’ve read in a similar context, but they aren’t as good outside this context or when trying to assemble new insights. It will take a bit longer before we get there, but we are going to get incredibly close to human common sense with these language models. So much of how we reason as humans is embedded into language and therefore, into the training data.

On language, reason and art

devmio: Language is one of the mediums that humans reason in. Almost everything that has to do with reason and our human understanding of the world happens with language, in some way. Are the language models actually getting closer to human reasoning?

Pieter Buteneers: I’m not convinced it’s entirely language that’s the medium of reasoning. I think the voice we hear inside our head is also an emerging phenomenon of other reasoning happening below the line. But the nice thing about language is it allows us to express our reasoning, which many people do.

Even on Reddit posts, which are used to train these language models, it’s a kind of reasoning with language that we can rebuild, since it’s written down and accessible.

It will bring us scarily close to human intelligence, but there are still gaps in this solution. I don’t think that necessarily, these gaps are super complex and I won’t argue that human intelligence is massively better than that of a monkey or a mouse. It is higher, but the difference is smaller than you would think.

We have a pre-programmed, hardwired brain for processing language. That makes it really easy for us to pick up a new language, especially when we’re born with a blank slate.

The same is true for image and face recognition. Human brains — mine is a clear exception, because I’m awful at recognizing faces — are pre-wired to recognize faces and are very good at it. Most people can look at a crowd of over 100 people and pick out who they know with just a glance. Parts of our brain may be pre-wired for certain forms of reasoning that are hard to learn, and we haven’t figured out a way to get training for it yet.

devmio: Let’s return to the topic of image generating AI. When they become more publicly available, will there be a shift in art and culture, how we perceive art, what constitutes art, and so on? Does this concern you? Do you think this is a valid concern?

Pieter Buteneers: Well, from a personal perspective, I like to go to museums and I appreciate art. But give me a pen or pencil and let me draw something and my 7-year-old son gets better results than I do. I’m not good at drawing, I’m not good at creating art. But tools like Photoshop allow me, as someone without much handiness, to still build something I can visually appreciate. From my personal perspective, this technology will allow us to democratize art even more. It will let more and more people generate aesthetically pleasing images.

Does that mean that groundbreaking artists’ controversial, outspoken art is going away? Definitely not. New artists will use these tools to create nice images, but there will always be artists who really push the boundaries and do something special. It’s like what the Impressionists did. Instead of being photorealistic, they painted seemingly random strokes on a canvas that were just dots or blurs. But if you take a step back, it becomes an image and comes to life. They figured out a new way of expressing art. Later, people began generating aesthetically pleasing things by basically throwing paint on a canvas. Think about Jackson Pollock.

But these tools, and that’s how I see DALL-E or Stable Diffusion, are just tools that let you create art. There will be a massive shift because now it’s much easier to generate a stock image, so I don’t have to buy it. For art itself, it won’t change much but it democratizes it, so more people will be able to do it.