Two positions can be identified in the AI discourse. First, “We’ll worry about that later, when the time comes” and second, “This is a problem for nerds who have no ethical values anyway”. Both positions are misguided, as the problem has existed for a long time and, moreover, there are certainly ways of setting boundaries for AI. Rather, there is a lack of consensus on what those boundaries should be.

AI Alignment [1] is concerned with aligning AI to desired goals. The first challenge here is to agree on these goals in the first place. The next difficulty is that it is not (yet?) possible to give these goals directly and explicitly to an AI system.

For example, Amazon developed a system several years ago that helps select suitable applicants for open positions ([2], [3]). For this, resumes of accepted and unaccepted applicants were used to train an AI system. Although they contained no explicit information about gender, male applicants were systematically preferred. We will discuss how this came about in more detail later. But first, this raises several questions: Is this desirable, or at least acceptable? And if not, how do you align the AI system so that it behaves as you want it to? In other words, how do you successfully engage in AI alignment?

Stay up to date

Learn more about MLCON

For some people, AI Alignment is an issue that will become more important in the future when machines are so intelligent and powerful that they might think the world would be better without humans [4]. Nuclear war provoked by supervillains is mentioned as another possibility of AI’s fatal importance. Whether these fears could ever become realistic remains speculation.

The claims being discussed as part of the EU’s emerging AI regulation are more realistic. Depending on what risk is realistically inherent in an AI system, different regulations may be applied here. This is shown in Figure 1, which is based on a presentation for the EU [5]. Four ranges from “no risk” to “unacceptable risk” are distinguished. In this context, a system with no significant risk only has the recommendation of a “Code of Conduct”, while a social credit system, as applied in China [6], is simply not allowed. However, this scheme only comes into effect if there is no specific law.

Fig. 1: Regulation based on outgoing risk, adapted from [5]

Alignment in Machine Learning Systems

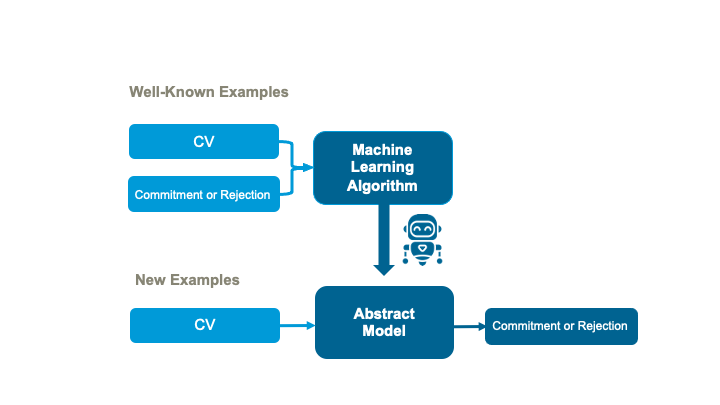

A machine learning system is trained using sample data. It learns to mimic this sample data. In the best and most desirable case, the system can generalize beyond this sample data and recognizes an abstract pattern behind it. If this succeeds, the system can also react meaningfully to data that it has never seen before. Only then can we speak of learning or even a kind of understanding that goes beyond memorization.

This also happened in the example of Amazon’s applicant selection, as shown in a simplified form in Figure 2.

Fig. 2: How to learn from examples, also known as supervised learning

Here is another example. We use images of dogs and cats as sample data for a system, training it to distinguish between them. In the best case, after training, the system also recognizes cats that are not contained in the training data set. It has learned an abstract pattern of cats, which is still based on the given training data, however.

Therefore, this system can only reproduce what already exists. It is descriptive or representative, but hardly normative. In the Amazon example, it replicates past decisions. These decisions seemed to be that men simply had a better chance of being accepted. So, at least the abstract model would be accurate. Alternatively, perhaps there were just more examples of male applicants, or some other unfortunate circumstance caused the abstract model not to be a good generalization of the example data.

At its best, however, such an approach is analytical in nature. It shows the patterns of our sample data and their backgrounds, meaning that men performed better on job applications. If that matches our desired orientation, there is no further problem. But what if it doesn’t? That’s what we’re assuming and Amazon was of that opinion as well, since they scrapped the system.

Pre-assumptions, aka: Priors

How to provide a machine learning system additional information about our desired alignment in addition to sample data has been commonly understood for a long time. This is used to provide world or domain knowledge to the system to guide and potentially simplify or accelerate training. You support the learning process by specifying which domain to look for abstract patterns in the data. Therefore, a good abstract pattern can be learned even if the sample data describes it inadequately. In machine learning, data being an inadequate description of the desired abstract model is the rule, rather than the exception. Yann LeCun, a celebrity on the scene, vividly elaborates on this in a Twitter thread [7].

This kind of previous assumption is also called a prior. An illustrative example of a prior is linearity. As an explanation, let’s take another application example. For car insurance, estimating accident risk is crucial. For an estimation, characteristics of the drivers and vehicles to be insured are collected. These characteristics are correlated with existing data on accident frequency in a machine-learning model. The method used for this is called supervised learning, and it is the same as described above.

For this purpose, let us assume that the accident frequency increases linearly with increased distance driven. The more one drives, the more accidents occur. This domain knowledge can be incorporated into the training process. This way, you can hope for a simpler model and potentially even less complex training. In the simplest case, linear regression [8] can be used here, which produces a reasonable model even with little training data or effort. Essentially, training consists of choosing the parameters for a straight line, slope, and displacement, to best fit the training data. Because of its simplicity, the advantage of this model is its good explainability and low resource requirement. This is because a linear relationship, “one-to-one”, is intellectually easy, and a straight-line equation can be calculated on a modern computer with extremely little effort.

However, it is also possible to describe the pattern contained in the training data and correct it normatively. For this, let us assume that the relationship between age and driving ability is clearly over-linear. Driving ability does not decline in proportion to age, but at a much faster rate. Or, to put it another way, the risk of accidents increases disproportionately with age. That’s how it is in the world, and that’s what the data reflects. Let’s assume that we don’t want to give up on this important influence completely. However, we equally want to avoid excessive age discrimination. Therefore, we decide to allow a linear dependence at most. We can support the model and align it with our needs. This relationship is illustrated in Figure 3. The simplest way to implement this is the aforementioned linear regression.

Fig. 3: Normative alignment of training outcomes

Now, you could also argue that models usually have not only one input, but many, which act in combination on the prediction. Moreover, in our example, the linear relationship between distance driven and accident frequency does not need to be immediately plausible. Don’t drivers with little driving experience have a higher risk? In that case, you could imagine a partial linear relationship. In the beginning, the risk decreases in relation to the distance driven, but then it increases again after a certain point and remains linear. There are also tools for these kinds of complex correlations. In the deep learning field, TensorFlow Lattice [9] offers the possibility of specifying a separate set of alignments for each individual influencing factor. This is also possible in a nonlinear or only partially linear way.

In addition to these relatively simple methods, there are other ways to influence. These include the learning algorithms you choose, the sample data selected, and, especially in deep learning, the neural network’s architecture and learning approach. These interventions in the training process are technically challenging and must be performed sparingly under supervision. Depending on the training data, otherwise, it may become impossible to train a good model with the desired priors.

Is all this not enough? Causal Inference

The field of classical machine learning is often accused of falling short. People say that these techniques are suitable for fitting straight lines and curves to sample data, but not for producing intelligent systems that behave as we want them to. In a Twitter thread by Pedro Domingos [10], typical representatives of a more radical course such as Gary Marcus and Judea Pearl also come forward. They agree that without modeling causality (Causal Inference), there will be no really intelligent system or AI Alignment.

In general, this movement can be accused of criticizing existing approaches but not having any executable systems to show for themselves. Nevertheless, Causal Inference has been a hyped topic for a while now and you should at least be aware of this critical position.

ChatGPT, or why 2023 is a special year for AI and AI Alignment.

Regardless of whether someone welcomes current developments in AI or is more fearful or dismissive of them, one thing seems certain: 2023 will be a special year in the history of AI. For the first time, an AI-based system, ChatGPT [11], managed to create a veritable boom of enthusiasm among a broad mass of the population. ChatGPT is a kind of chatbot that you can converse about any topic with, and not just in English. There are further articles for a general introduction to ChatGPT.

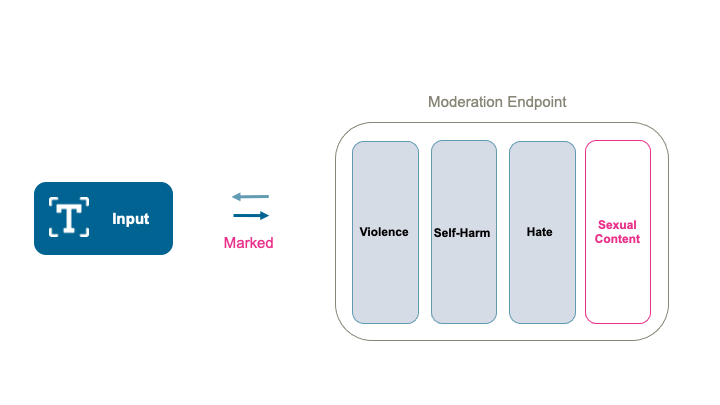

ChatGPT is simply the most prominent example of a variety of systems already in use in many places. They all share the same challenge: how do we ensure that the system does not issue inappropriate responses? One obvious approach is to check each response from the system for appropriateness. To do this, we can train a system using sample data. This data consists of pairs of texts and a categorization of whether they match our alignment or not. Operating this kind of system is shown in Figure 4. OpenAI, the producer of ChatGPT, offers this functionality already trained and directly usable as an API [12].

This approach can be applied to any AI setting. The system’s output is not directly returned, but first checked for your desired alignment. When in doubt, a new output can be generated by the same system, another system can be consulted, or the output can be denied completely. ChatGPT is a system that works with probabilities and is able to give any number of different answers to the same input. Most AI systems cannot do this and must choose one of the other options.

As mentioned at the beginning, we as a society still need to clarify which systems we consider risky. Where do we want to demand transparency or even regulation? Technically, this is already possible for a system like ChatGPT by inserting a kind of watermark [13] into generated text. This works by selecting words from a restricted list and assuming that a human making this specific combination has an extremely low probability. This can be used to establish the machine as the author. Additionally, the risk of plagiarism is greatly reduced because the machine – imperceivable to us – does not write exactly like a human. In fact, OpenAI is considering using these watermarks in ChatGPT [14]. There are other methods that work without watermarks to find out whether a text comes from a particular language model [15]. This only requires access to the model under suspicion. The obvious weakness is knowing or guessing the model under suspicion.

Fig. 4: A moderation system filters out undesirable categories

Conclusion

As AI systems become more intelligent, the areas where they can be used become more important and therefore, riskier. On the one hand, this is an issue that affects us directly today. On the other hand, an AI that wipes out humanity is just material for a science fiction movie.

However, targeting these systems for specific goals can only be achieved indirectly. This is done by selecting sample data and Priors that are introduced into these systems. Therefore, it may also be useful to subject the system’s results to further scrutiny. These are issues that are already being discussed at both the policy and technical levels. Neither group, those who see AI as a huge problem, and those who think no one cares, are correct.

Links & References

[1] https://en.wikipedia.org/wiki/AI_alignment

[2] https://www.bbc.com/news/technology-45809919

[3] https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G

[5] https://www.ceps.eu/wp-content/uploads/2021/04/AI-Presentation-CEPS-Webinar-L.-Sioli-23.4.21.pdf

[6] https://en.wikipedia.org/wiki/Social_Credit_System

[7] https://twitter.com/ylecun/status/1591463668612730880?t=eyUG-2osacHHE3fDMDgO3g

[8] https://en.wikipedia.org/wiki/Linear_regression

[9] https://www.tensorflow.org/lattice/overview

[10] https://twitter.com/pmddomingos/status/1576665689326116864

[11] https://openai.com/blog/chatgpt/

[12] https://openai.com/blog/new-and-improved-content-moderation-tooling/

[13] https://arxiv.org/abs/2301.10226 and https://twitter.com/tomgoldsteincs/status/1618287665006403585