These are crazy times we have been living in since the beginning of 2020; a pandemic has turned public life upside down. News sites offer live tickers with the latest news on infections, recoveries and death rates, and there is no medium that does not use a chart for visualization. Institutes like the Robert Koch Institute (RKI) or the Johns Hopkins University provide dashboards. We live in a world dominated by data, even during a pandemic.

The good thing is that most data on the pandemic is publicly available. Johns Hopkins University, for example, makes its data available in an open GitHub repository. So what could be more obvious than to create your own dashboard with this freely accessible data? This article uses coronavirus data to illustrate how to get from data cleansing to enriching data from other sources to creating a dashboard using Plotly’s dash. First of all, an important note: The data is not interpreted or analyzed in any way. This must be left to experts such as virologists, otherwise false conclusions may be drawn. Even if data is available for almost all countries, it is not necessarily comparable; each country uses different methods for testing infections. Some countries even have too few tests, so that no uniform picture can be drawn. The data set serves only as an example.

First, the work

In order to be able to use the data, we need to get it in a standardized form for our purposes. The data of Johns Hopkins University is stored in a GitHub repository. Basically, it is divided into two categories: first, continuously as time-series data and second, as a daily report in a separate CSV file. For the dashboard we need both sources. With the time-series data, it is easy to create line charts and plot gradients, curves, etc. From this we later generate the temporal course of the case numbers as line charts. Furthermore, we can calculate and display the growth rates from the data.

Set up environment

To prepare the data we use Python and the library pandas. pandas is the Swiss army knife for Python in terms of data analysis and cleanup. Since we need to install some modules for Python, I recommend creating a multi-environment setup for Python. Personally I use Anaconda, but there are also alternatives like Virtualenv. Using isolated environments does not change anything in the system-wide installation of Python, so I strongly recommend it. Furthermore you can work with different Python versions and export the dependencies for deployment more easily. Regardless of the system used, the first step is to activate the environment. With Anaconda and the command line tool conda this works as follows:

$ conda create -n corona-dashboard python=3.8.2

The environment called corona-dashboard is created, and Python 3.8.2 and the necessary modules are installed. To use the environment, we activate it with

$ conda activate corona-dashboard

We do not want to go deeper into Anaconda here, but for more information you can refer to the official documentation.

Once we have activated our environment, we install the necessary modules. In the first step these are pandas and Jupyter notebook. A Jupyter notebook is, simply put, a digital notebook. The notebooks contain markdown and code snippets that can be executed immediately. They are perfectly suited for iterative data cleansing and for developing the necessary steps. The notebooks can also be used to develop diagrams before they are transferred into the final scripts.

$ conda install pandas

$ conda install -c conda-forge notebook

In the following we will perform all steps in Jupyter notebooks. The notebooks can be found in the repository for this article on GitHub. To use them, the server must be started:

$ jupyter notebook

After it’s up and running, the browser will display the overview in the current directory. Click on new and choose your desired environment to open a new Jupyter notebook. Now we can start on cleaning up the data.

Cleaning time-based data

In the first step, we import pandas, which provides methods for reading and manipulating data. Here we need a parser for CSV files, for which the method read_csv is provided. At least one path to a file or a buffer is expected as a parameter. If you specify a URL to a CSV as a parameter, pandas will read and process it without any problems. To ensure consistent, traceable data, we access a downloaded file that is available in the repository.

df = pd.read_csv("time_series_covid19_confirmed_global.csv")

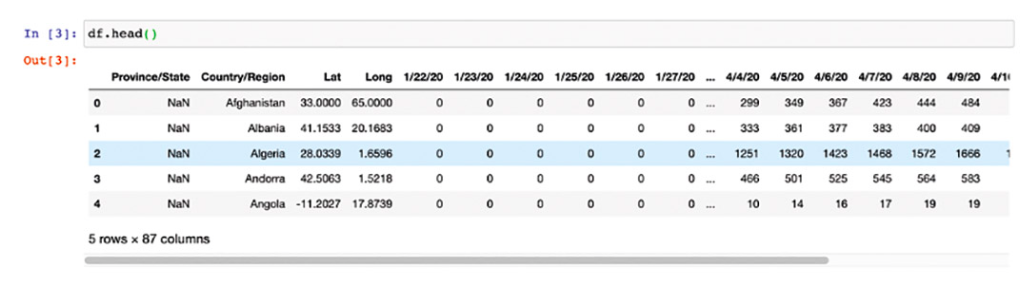

To check this, we use the instruction df.head() to output the first five lines of the DataFrame (fig. 1).

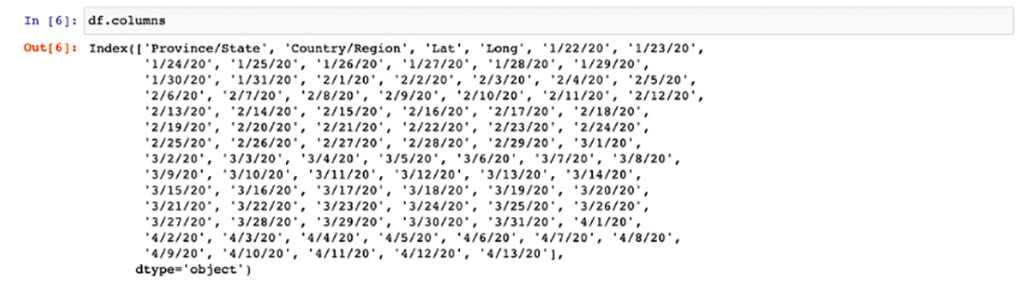

To become aware of the structure, we can have the column names printed. This is done with the statement df.columns. You can see in figure 2 that there is one column for each day in the table. Furthermore there are geo coordinates for the respective countries. The countries are partly divided into provinces and federal states. For the time-based data, we do not need geo-coordinates, and we can also remove the column with the states. We achieve this in pandas with the following methods on the data frame:

df.drop(columns=['Lat', 'Long', 'Province/State'], inplace = True)

The method drop expects as parameter the data we want to get rid of. In this case, there are three columns: Lat, Long and Province/State. It is important that the names with upper/lower case and any spaces are specified exactly. The second parameter inplace is used to apply the operation directly to our DataFrame. Without this parameter, pandas returns the modified DataFrame to us without changing the original. If you look at the frame with df.head(), you will see that the desired columns have been discarded.

The division of some countries into provinces or states results in multiple entries for some. An example is China. Therefore, it makes sense to group the data by country. For this, pandas provides a powerful grouping function.

df_grouped = df.groupby(['Country/Region'], as_index=False).sum()

Using the groupby function and parameters, which say in which column the rows should be grouped, the rows are combined. The concatenated .sum() sums the values of the respective combined groups. The return is a new DataFrame with the grouped and summed data, so that we can access the time-related data for all countries. So we swap rows and columns to get one entry for each country (columns) for each day (row).

df_grouped.reset_index(level=0, inplace=True)

df_grouped.rename(columns={'index': 'Date'}, inplace=True)

Before transposing, we set the index to Country/Region to get a clean frame. The disadvantage of this is that the new index is then called Country/Region.

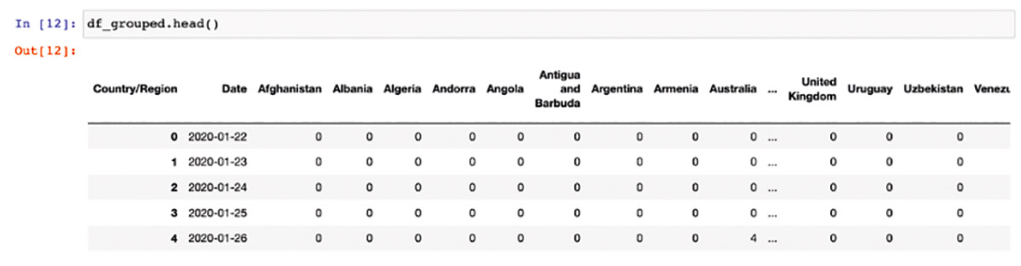

The next adjustment is to set the date in a separate column. To correct this, we reset the index again. This will turn our old index (Country/Region) into a column named Index. This column contains the date specifications and must be renamed and set to the correct data type. The cleanup is complete (Fig. 3).

df_grouped.reset_index(level=0, inplace=True)

df_grouped.rename(columns={'index': 'Date'}, inplace=True)

df_grouped['Date'] = pd.to_datetime(df_grouped['Date'])

The fact that the index continues to be called Country/Region won’t bother us from now on because the final CSV file is saved without any index.

df_grouped.to_csv('../data/worldwide_timeseries.csv', index=False)

This means that the time-series data are adjusted and can be used for each country. If, for example, we only want to use the data for Germany, a new DataFrame can be created as a copy by selecting the desired columns.

df_germany = df_grouped[['Date', 'Germany']].copy()

Packed into a function we get the source code from Listing 1 for the cleanup of the time-series data.

def clean_and_save_timeseries(df):

drop_columns = ['Lat',

'Long',

'Province/State']

df.drop(columns=drop_columns, inplace = True)

df_grouped = df.groupby(['Country/Region'], as_index=False).sum()

df_grouped = df_grouped.set_index('Country/Region').transpose()

df_grouped.reset_index(level=0, inplace=True)

df_grouped.rename(columns={'index': 'Date'}, inplace=True)

df_grouped['Date'] = pd.to_datetime(df_grouped['Date'])

df_grouped.to_csv('../data/worldwide_timeseries.csv',index=False)

The function expects to receive the DataFrame to be cleaned as a parameter. Furthermore, all steps described above are applied and the CSV file is saved accordingly.

Clean up case numbers per country

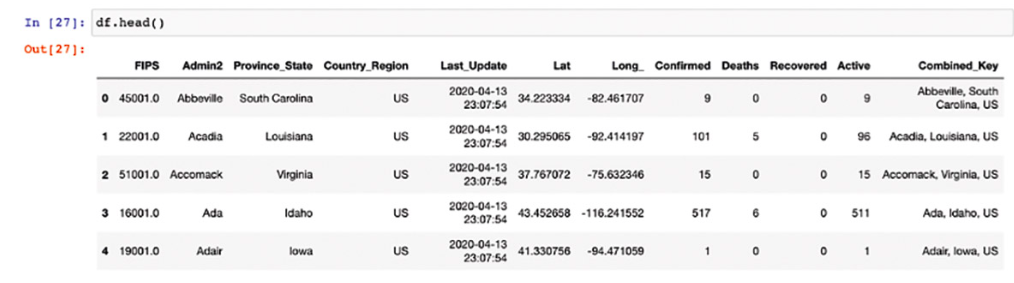

In the repository of Johns Hopkins University you can find more CSVs with case numbers per country, divided into provinces/states. Additionally, the administrations for North America and the geographic coordinates are listed. With this data, we can generate an overview on a world map. The adjustment is less complex. As with the cleanup of the temporal data, we read the CSV into a pandas DataFrame and look at the first lines (Fig. 4).

import pandas as pd

df = pd.read_csv('04-13-2020.csv')

df.head()

In addition to the actual case numbers, information on provinces/states, geo-coordinates and other metadata are included that we do not need in the dashboard. Therefore we remove the columns from our DataFrame.

df.drop(columns=['FIPS','Lat', 'Long_', 'Combined_Key', 'Admin2', 'Province_State'], inplace=True)

To get the summed up figures for the respective countries, we group the data by Country_Region, assign it to a new DataFrame and sum it up.

df_cases = df.groupby(['Country_Region'], as_index=False).sum()

We have thus completed the clean-up operation. We will save the cleaned CSV for later use. Packaged into a function, the steps look like those shown in Listing 2.

def clean_and_save_worldwide(df):

drop_columns = ['FIPS',

'Lat',

'Long_',

'Combined_Key',

'Admin2',

'Province_State']

df.drop(columns=drop_columns, inplace=True)

df_cases = df.groupby(['Country_Region'], as_index=False).sum()

df_cases.to_csv('Total_cases_wordlwide.csv')

The function receives the DataFrame and applies the steps described above. At the end a cleaned CSV file is saved.

Clean up case numbers for Germany

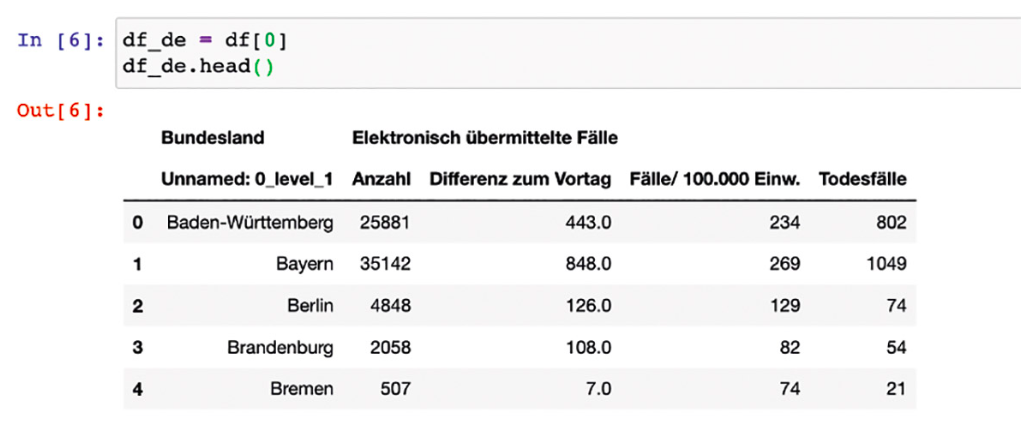

In addition to the data from Johns Hopkins University, we want to use the data from the RKI with case numbers from Germany, broken down by federal state. This will allow us to create a detailed view of the German case numbers. The RKI does not make this data available as CSV in a repository; instead, the figures are displayed in an HTML table on a separate web page and updated daily. pandas provides the function read_html() for such cases. If you pass a URL to a web page, it is loaded and parsed. All tables found are returned as DataFrames in a list. I do not recommend accessing the web page directly and reading the tables. On the one hand, there are pages (as well as those of the RKI) which prevent this, on the other hand, the requests should be kept low, especially during development. For our purposes we therefore store the website locally with a wget https://www.rki.de/DE/Content/InfAZ/N/Neuartiges_Coronavirus/Fallzahlen.html. To ensure that the examples work consistently, this page is part of the article’s repository. pandas doesn’t care whether we read the page remotely or pass it as a file path.

import pandas as pd

df = pd.read_html('../Fallzahlen.html', decimal=',', thousands='.')

Since the numbers on the web page are formatted, we pass some information about them to read_html. We inform you that the decimal separation is done with commas and the separation of the thousands with a dot. Pandas thus interprets the correct data types when reading the data. To see how many tables were found, we check the length of the list with a simple len(df). In this case this returns a 1, which means that there was exactly one interpretable table. We save this DataFrame into a new variable for further cleanup:

df_de = df[0]

Since the table (Fig. 5) has column headings that are difficult to process, we rename them. The number of columns is known, so we do this step pragmatically:

df_de.columns = ['Bundesland', 'Anzahl', 'diff', 'Pro_Tsd', 'Gestorben']

Note: The number of columns must match exactly. Should the format of the table change, this must be adjusted. This makes the DataFrame look much more usable. We don’t need the column of the previous day’s increments in the dashboard, so it will be removed.

Furthermore, the last line of the table contains the grand total. It is also not necessary and will be removed. We directly access the index (16) of the line and remove it. This gives us our final dataframe with the numbers for the individual states of Germany.

df_de.drop(columns=['diff'], index=[16], inplace=True)

We will now save this data in a new CSV for further use.

df_de.to_csv('cases_germany_states.csv')

A resulting function looks as follows:

def clean_and_save_german_states(df):

df.columns = ['Bundesland', 'Anzahl', 'diff', 'Pro_Tsd', 'Gestorben']

df.drop(columns=['diff'], index=[16], inplace=True)

df.to_csv('cases_germany_states.csv')

As before, the function expects the dataframe as a transfer value. So we have cleaned up all existing data and saved it in new CSV files. In the next step, we will start developing the diagrams with Plotly.

Creating the visualizations

Since we will later use Dash from Plotly to develop the dashboard, we will create the desired visualizations in advance in a Jupyter notebook. Plotly is a library for creating interactive diagrams and maps for Python, R and JavaScript. The output of the diagrams is done automatically with Plotly.js. This gives all diagrams additional functions (zoom, save as PNG, etc.) without creating them yourself. Before that we have to install some more required modules.

# Anaconda Benutzer

conda install -c plotly plotly

# Pip

pip install plotly

In order to create diagrams with Plotly as quickly and easily as possible, we will use Plotly Express, which is now part of Plotly. Plotly Express is the easy-to-use high-level interface to Plotly, which works with “tidy” data and considerably simplifies the creation of diagrams. Since we are working with pandas DataFrames again, we import pandas and Plotly Express at the beginning.

import pandas as pd

import plotly.express as px

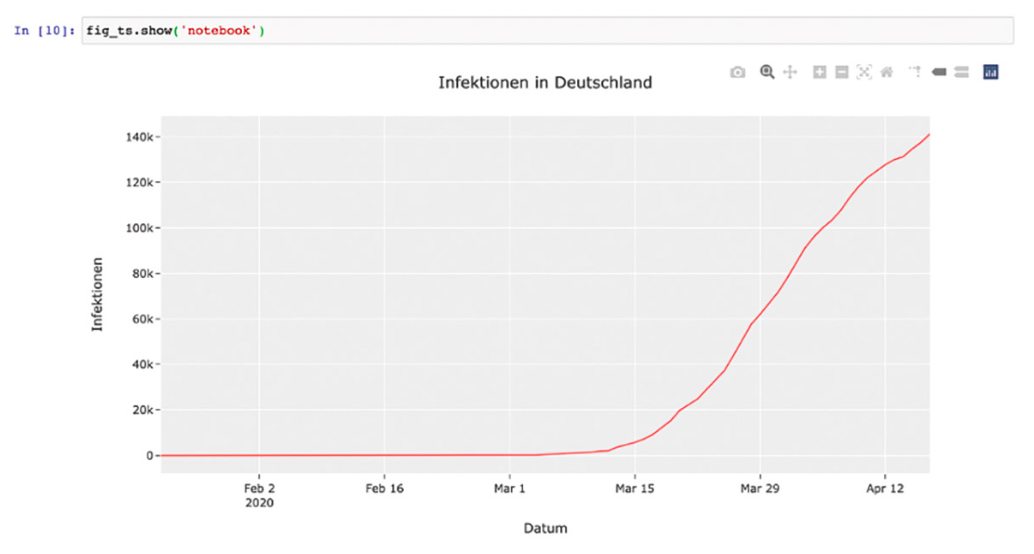

Starting with the presentation of the development of infections over time in a line chart, we will import the previously cleaned and saved dataframe.

df_ts = pd.read_csv(‘../data/worldwide_timeseries.csv’)

Thanks to the data cleanup, creating a line chart with Plotly is very easy:

fig_ts = px.line(df_ts,

x="Date",

y="Germany")

The parameters are mostly self-explanatory. The first parameter tells you which DataFrame is used. With x='Date' and y='Germany' we determine the columns to be used from the DataFrame. On the horizontal the date and vertically the country. To make the diagram understandable, we set more parameters for the title and label of the axes. For the y-axis we define a linear division. If we want a logarithmic representation, we can set ‘log‘ instead of ‘linear‘.

fig_ts.update_layout(xaxis_type='date',

xaxis={

'title': 'Datum'

},

yaxis={

'title': 'Infektionen',

'type': 'linear',

},

title_text='Infektionen in Deutschland')

To display diagrams in Jupyter notebooks, we need to tell the show() method that we are working in a notebook (Fig. 6).

fig_ts.show('notebook')

That’s all there is to it. The visualizations can be configured in many ways. For this I refer to Plotly’s extensive documentation.

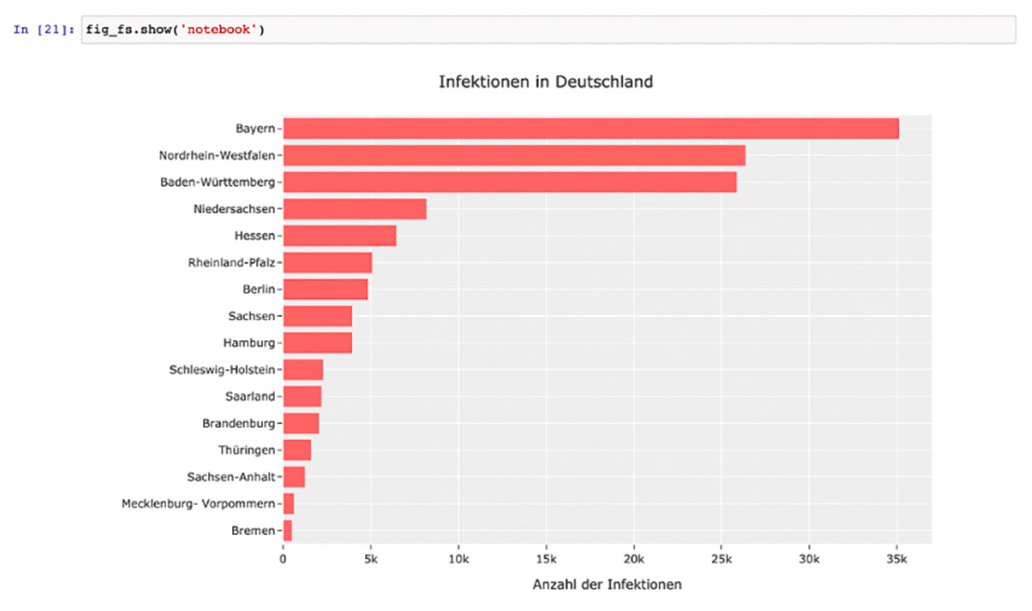

Let us continue with the creation of further visualizations. For the dashboard, cases from Germany are to be presented. For this purpose, the likewise cleaned DataFrame is read in and then sorted in ascending order.

df_fs = pd.read_csv('../data/cases_germany_states.csv')

df_fs.sort_values(by=['Anzahl'], ascending=True, inplace=True)

The first representation is a simple horizontal bar chart (Fig. 7). The code can be seen in Listing 3.

fig_fs = px.bar(df_fs, x='Anzahl',

y='Bundesland',

hover_data=['Gestorben'],

height=600,

orientation='h',

labels={'Gestorben':'Bereits verstorben'},

template='ggplot2')

fig_fs.update_layout(xaxis={

'title': 'Anzahl der Infektionen'

},

yaxis={

'title': '',

},

title_text='Infektionen in Deutschland')

The DataFrame is expected as the first parameter. Then we configure which columns represent the x and y axis. With hover_data we can determine which data is additionally displayed by a bar when hovering. To ensure a comprehensible description of the parameter, labels can be used to determine what the label for data in the frontend should be. Since “Died” sounds a bit strange, we set it to “Already deceased”. The parameter orientation specifies whether we want to create a vertical (v) or horizontal (h) bar chart. With height we set the height to 600 pixels. Finally, we must update the layout parameters, as we did for the line chart.

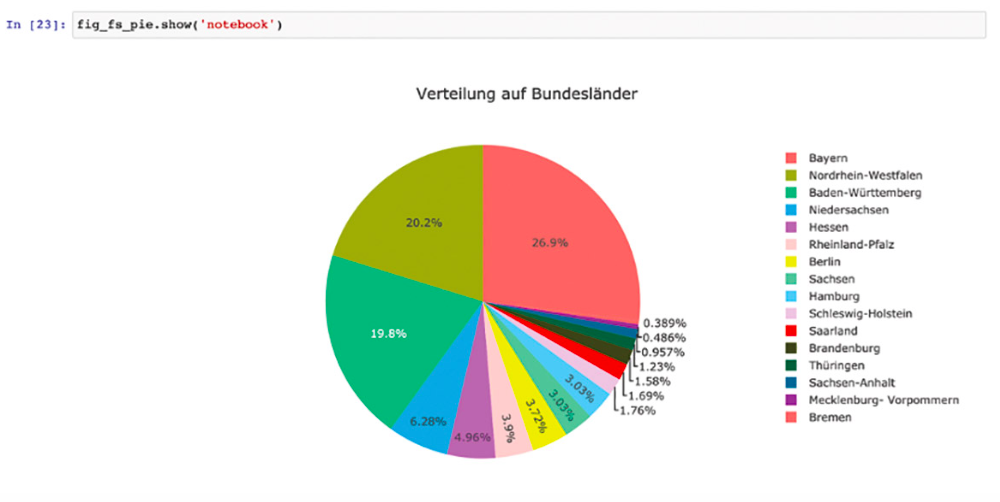

To make the distribution easier to see, we create a pie chart.

fig_fs_pie = px.pie(df_fs,

values='Anzahl',

names='Bundesland',

title='Verteilung auf Bundesländer',

template='ggplot2')

The parameters are mostly self-explanatory. values defines which column of the DataFrame is used, and names, which column contains the labels. In our case these are the names of the federal states (Fig. 8).

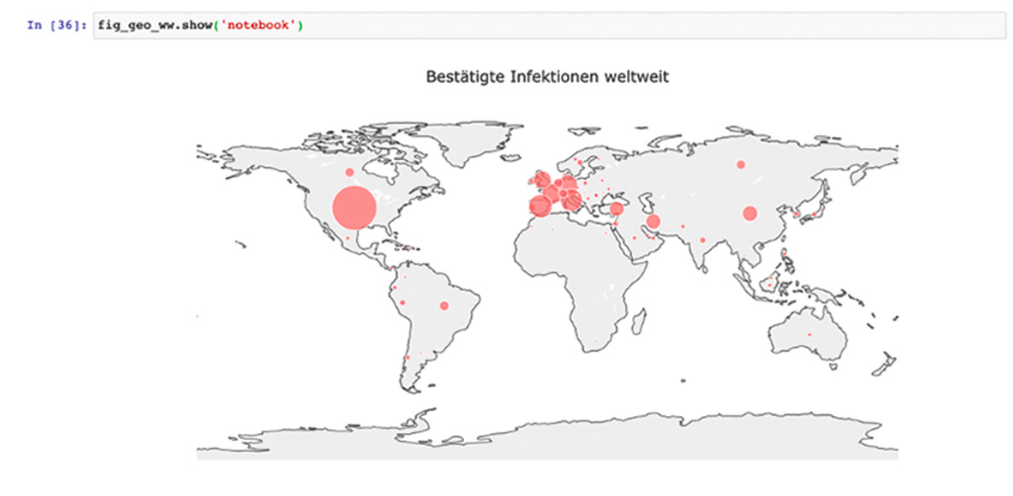

Finally, we generate a world map with the distribution of cases per country. We import the adjusted data of the worldwide infections.

df_ww_cases = pd.read_csv('../data/Total_cases_wordlwide.csv')

Then we create a scatter-geo-plot. Simply put, this will draw a bubble for each country, the size of which corresponds to the number of cases. The code can be seen in Listing 4.

fig_geo_ww = px.scatter_geo(df_ww_cases,

locations="Country_Region",

hover_name="Country_Region",

hover_data=['Confirmed', 'Recovered', 'Deaths'],

size="Confirmed",

locationmode='country names',

text='Country_Region',

scope='world',

labels={

'Country_Region':'Land',

'Confirmed':'Bestätigte Fälle',

'Recovered':'Wieder geheilt',

'Deaths':'Verstorbene',

},

projection="equirectangular",

size_max=35,

template='ggplot2',)

The parameters are somewhat more extensive, but no less easy to understand. In principle, it is an assignment of the fields from the DataFrame. The parameters labels and hover_data have the same functions as before. With locations we tell which column of our DataFrame contains the locations/countries. So that Plotly Express knows how to assign them on a world map, we set locationmode to country names. Plotly can thus carry out the assignment for this dataframe at country level without having to specify exact geo-coordinates. text determines which heading the mouseovers have. The size of the bubbles is calculated from the confirmed cases in the DataFrame. We pass this to size. We can define the maximum size of the bubbles with size_max (in this case 35 pixels). With scope we control the focus on the world map. Possibilities are ‘usa‘, ‘europe‘, ‘asia‘, ‘africa‘, ‘north america‘, and ‘south america‘. This means that the map is not only focused on a certain region, but also limited to it. The appropriate labels and other metaparameters for the display are applied when the layout is updated:

fig_geo_ww.update_layout(

title_text = 'Bestätigte Infektionen weltweit',

title_x = 0.5,

geo = dict(

showframe = False,

showcoastlines = True,

projection_type = 'equirectangular'

)

)

geo defines the representations of the world map. The parameter projection_type is worth mentioning, since it can be used to control the visualization of the world map. For the dashboard we use equirectangular, better known as isosceles. The finished map is shown in figure 9.

With this we have created all the necessary images to be used in our dashboard. In the next step we will come to the dashboard itself. Since Dash is used by Plotly, we can reuse, configure and interactively design our diagrams relatively easily.

Creating the dashboard with Dash from Plotly

Dash is a Python framework for building web applications. It is based on Flask, Plotly.js and React.js. Dash is very well suited for creating data visualization applications with highly customized user interfaces in Python. It is especially interesting for anyone working with data in Python. Since we have already created all diagrams with Plotly in advance, the next step to a web-based dashboard is straightforward.

We create an interactive dashboard from the data in our cleaned up CSV files – without a single line of HTML and JavaScript. We want to limit ourselves to basic functions and not go deeper into special features, as this would go beyond the scope of this article. For this I refer to the excellent documentation of Dash and the tutorial contained therein. Dash creates the website from a declarative description of the Python structure. To work with Dash, we need the Python module dash. It is installed with conda or pip:

$ conda install -c conda-forge dash

# or

$ pip install dash

For the dashboard we create a Python script called app.py. The first step is to import the required modules. Since we are importing the data with pandas in addition to Dash, we need to import the following packages:

import pandas as pd

import dash

import dash_core_components as dcc

import dash_html_components as html

# Module with help functions

import dashboard_figures as mf

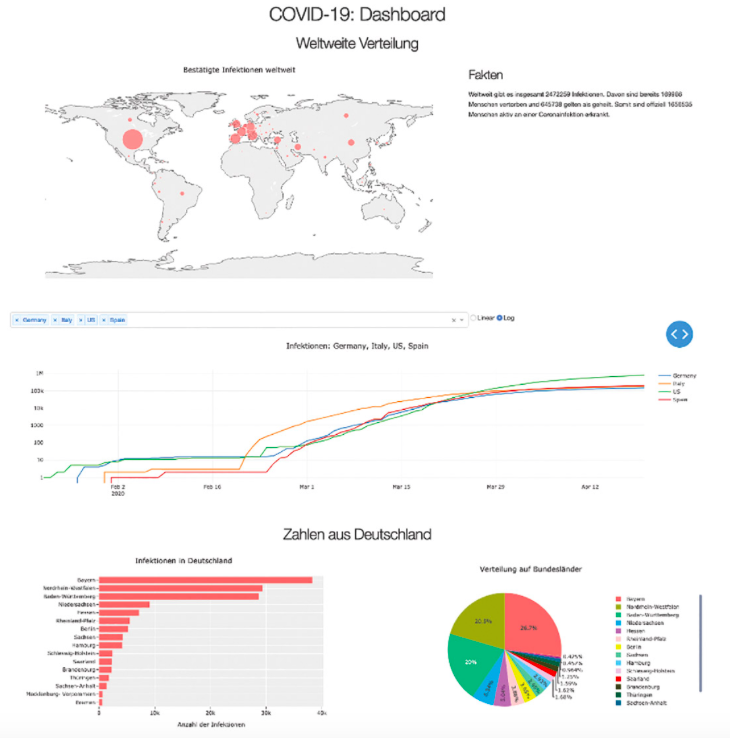

Besides the actual module for Dash, we need the core components as well as the HTML components. The former contain all important components we need for the diagrams. HTML components contain the HTML parts. Once the modules are installed, the dashboard can be developed (Figure 10).

Let’s start with the global data. The breakdown is relatively simple: a heading, with two rows below it, each with two columns. We place the world map with facts as text to the right. Then we want a combo box to select one or more countries for display in the line chart below. We also have the choice of whether we prefer a linear or logarithmic representation. The data on infections in Germany are a bit extensive and include the bar chart and the distribution as a pie chart.

In Dash the layout is described in code. Since we have already generated all diagrams with Plotly Express, we outsource them to an external module with helper functions. I won’t go into this code in detail, because it contains the diagrams we have already created. The code is stored in the GitHub repository. Before the diagrams can be displayed, we need to import the CSV files and have the diagrams created (Listing 5).

df_ww = pd.read_csv('../data/worldwide_timeseries.csv')

df_total = pd.read_csv('../data/Total_cases_wordlwide.csv')

df_germany = pd.read_csv('../data/cases_germany_states.csv')

fig_geo_ww = mf.get_wordlwide_cases(df_total)

fig_germany_bar = mf.get_german_barchart(df_germany)

fig_germany_pie = mf.get_german_piechart(df_germany)

fig_line = mf.get_lineplot('Germany', df_ww)

ww_facts = mf.get_worldwide_facts(df_total)

fact_string = ‚'''Weltweit gibt es insgesamt {} Infektionen.

Davon sind bereits {} Menschen vertorben und {} gelten als geheilt.

Somit sind offiziell {} Menschen aktiv an einer Coronainfektion erkrankt.'''

fact_string = fact_string.format(ww_facts['total'],

ww_facts['deaths'],

ww_facts['recovered'],

ww_facts['active'])

countries = mf.get_country_names(df_ww)

Further help functions return a list of all countries and aggregated data. For the combo box, a list of options must be created that contains all countries.

dd_options = []

for key in countries:

dd_options.append({

'label': key,

'value': key

})

That was all the preparation that was necessary. The layout of the web application consists of the components provided by Dash. The complete description of the layout can be seen in Listing 6.

app.layout = html.Div(children=[

html.H1(children='COVID-19: Dashboard', style={'textAlign': 'center'}),

html.H2(children='Weltweite Verteilung', style={'textAlign': 'center'}),

# World and Facts

html.Div(children=[

html.Div(children=[

dcc.Graph(figure=fig_geo_ww),

], style={'display': 'flex',

'flexDirection': 'column',

'width': '66%'}),

html.Div(children=[

html.H3(children='Fakten'),

html.P(children=fact_string)

], style={'display': 'flex',

'flexDirection': 'column',

'width': '33%'})

], style={'display': 'flex',

'flexDirection': 'row',

'flexwrap': 'wrap',

'width': '100%'}),

# Combobox and Checkbox

html.Div(children=[

html.Div(children=[

# combobox

dcc.Dropdown(

id='country-dropdown',

options=dd_options,

value=['Germany'],

multi=True

),

], style={'display': 'flex',

'flexDirection': 'column',

'width': '66%'}),

html.Div(children=[

# Radio-Buttons

dcc.RadioItems(

id='yaxis-type',

options=[{'label': i, 'value': i} for i in ['Linear', 'Log']],

value='Linear',

labelStyle={'display':'inline-block'}

),

], style={'display': 'flex',

'flexDirection': 'column',

'width': '33%'})

], style={'display': 'flex',

'flexDirection': 'row',

'flexwrap': 'wrap',

'width': '100%'}),

# Lineplot and Facts

html.Div(children=[

html.Div(children=[

#Line plot: Infections

dcc.Graph(figure=fig_line, id='infections_line'),

], style={'display': 'flex',

'flexDirection': 'column',

'width': '100%'}),

], style={'display': 'flex',

'flexDirection': 'row',

'flexwrap': 'wrap',

'width': '100%'}),

# Germany

html.H2(children=‘Zahlen aus Deutschland‘, style={‚textAlign‘: ‚center‘}),

html.Div(children=[

html.Div(children=[

# Barchart Germany

dcc.Graph(figure=fig_germany_bar),

], style={'display': 'flex',

'flexDirection': 'column',

'width': '50%'}),

html.Div(children=[

# Pie Chart Germany

dcc.Graph(figure=fig_germany_pie),

], style={'display': 'flex',

'flexDirection': 'column',

'width': '50%'})

], style={'display': 'flex',

'flexDirection': 'row',

'flexwrap': 'wrap',

'width': '100%'})

])

The layout is described by Divs, or the respective wrappers for Divs, as code. There is a wrapper function for each HTML element. These functions can be nested however you like to create the desired layout. Instead of using inline styles, you can also work with your own classes and with a stylesheet. For our purposes, however, the inline styles and the external stylesheet read in at the beginning are sufficient. The approach of a declarative description for layouts has the advantage that we do not have to leave our code and do not have to be an expert in JavaScript or HTML. The focus is on dashboard development. If you look closely, you will find core components for the visualizations in addition to HTML components.

...

# Bar chart Germany

dcc.Graph(figure=fig_germany_bar),

....

The respective diagrams are inserted at these positions. For the line chart, we assign an ID so that we can access the chart later.

dcc.Graph(figure=fig_line, id='infections_line'),

Using the selected values from the combo box and radio buttons, we can adjust the display of the lines. In the combo box we want to provide a selection of countries. Multiple selection is also possible if you want to be able to see several countries in one diagram (see section # combo box in Listing 6). Furthermore, the radio buttons control the division of the y-axis according to linear or logarithmic display (see section # Radio-Buttons in Listing 6). In order to apply the selected options to the chart, we need to create an update function. We annotate this function with an @app.callback decorator (Listing 7).

# Interactive Line Chart

@app.callback(

Output('infections_line', 'figure'),

[Input('country-dropdown', 'value'), Input('yaxis-type', 'value')])

def update_graph(countries, axis_type):

countries = countries if len(countries) > 0 else ['Germany']

data_value = []

for country in countries:

data_value.append(dict(

x= df_ww['Date'],

y= df_ww[country],

type= 'lines',

name= str(country)

))

title = ', '.join(countries)

title = 'Infektionen: ' + title

return {

'data': data_value,

'layout': dict(

yaxis={

'type': 'linear' if axis_type == 'Linear' else 'log'

},

hovermode='closest',

title = title

)

}

The decorator has inputs and outputs. Output defines to which element the return is applied and what kind of element it is. In our case we want to access the line chart with the ID infections_line, which is of the type figure. Input describes which input values are needed in the function. In our case, these are the values from the combo box and the selection via the radio buttons. The corresponding function gets these values and we can work with them.

Since the countries are returned as a list, the line to be drawn must be configured in each case. In our example this is implemented with a simple for-in loop. For each country it is determined which columns from the dataframe are necessary. Finally, we return the new configuration of the diagram. When we return, we still define the division of the y-axis, depending on the selection, whether linear or logarithmic. Finally, we start a debug server:

if __name__ == '__main__':

app.run_server(debug=True)

If we start the Python script, a server is started and the application can be called in the browser. If one or more countries are selected, they are displayed as a line chart. The development server supports hot reloading: If we change something in the code and save it, the page is automatically reloaded. With this we have created our own coronavirus/Covid-19 dashboard with interactive diagrams. All without having to write HTML, JavaScript and CSS. Congratulations!

Summary

Together we have worked through a simple data project. From cleaning up data and the creation of visualizations to the provision of a dashboard. Even with small projects, you can see that the cleanup and visualization part takes up most of the time. If the data is poor, the end product is guaranteed not to be better. In the end, the dashboard is created quickly.

Charts can be created very quickly with Plotly and are interactive out of the box, so they are not just static images. The ability to create dashboards quickly is especially helpful in the prototypical development of larger data visualization projects. There is no need for a large team of data scientists and developers, ideas can be tried out quickly and discarded if necessary. Plotly and Dash are more than sufficient for many purposes. If the data is cleaned up right from the start, the next steps are much easier. If you are dealing with data and its visualization, you should take a look at Plotly and not ignore Dash.

One final remark: In this example I have not used predictions and predictive algorithms and models. On the one hand, the amount of data is too small. On the other hand, a prediction with exponential data is always difficult and should be treated with great care, otherwise wrong conclusions will be drawn.