As a programming language, R is strongly but dynamically typed, functional and interpreted (therefore not compiled). Among other things, it is popular amongst data scientists, because there are (free) packages with which statistical calculations (such as matrix calculations or descriptive statistics) can be performed. In addition, machine learning (such as linear regression, clustering, neural networks, etc.) can be implemented. The results of the calculations can be visualized efficiently and effectively.

R is the open source successor to the S programming language. A very active community is driving the development of the language and the packages available. At the time this article was submitted, over 14,000 (in words: fourteen thousand!) packages were available on the Comprehensive R Archive Network (CRAN). This is both a blessing (because, for example, new machine-learning models developed in the academic world or by companies are being made available very quickly), as well as a curse (because, of course, no human being can possess even a roughly complete view of all the packages). In the course of this article, you will become acquainted with a handful of packages.

One thing is certain at any rate: If you work with data, you will appreciate R. It gives you a number of possibilities to access, transform, and clean your data, which you can ultimately analyze. And starting with SQL Server 2016, Microsoft has integrated the R programming language into their Data Platform.

R: Create and run scripts

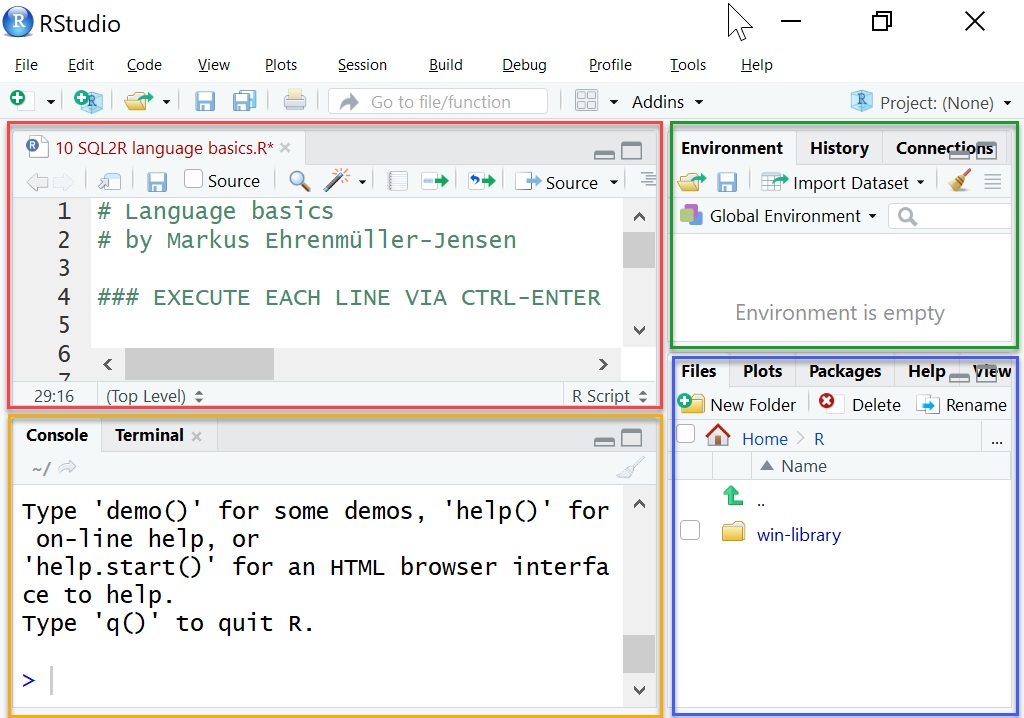

You can play around with the examples yourself once you install R and an Integrated Development Environment (IDE) such as RStudio or R Tools for Visual Studio. Figure 1 shows the basic structure of the IDE. On the top left of the screen (framed in red in the figure), you can create a script and fully execute it or run it step by step. The input console is on the bottom left (orange). Here you can enter individual commands, execute them and see the results. When you run the script, it is automatically copied to the console line by line. In the top right corner (green), you can switch between an overview of the existing variables, execution history and existing connections. The lower right corner (blue) provides you access to the script files, graphical output (plots) or a help function, among others.

A “Hello World” with R

Enough theory – now it’s time for concrete code! Of course, the most important part of the code is the comments, as from experience we know that they are the only reliable documentation of code. After the # character the rest of the line is ignored as a comment. (Listing 1).

### COMMENTS # A comment line starts with a # character # Multi-line comments are not possible (each line must ... # ... begin with a # sign)

Strings can be set using either single or double quotes (Listing 2). You could get used to it quickly in other languages as well.

### CHARACTER STRINGS "Hello World" 'Hello World'

Calculations (Listing 3) can simply be entered – the result will be displayed immediately in the console.

### CALCULATIONS 1 + 2 2 * 3 2^16

As I had already indicated above, open source is both a blessing and a curse. In the case of R, you sometimes feel that it lacks a clear policy or standard. There are four ways to assign a value to a variable – you will find all of them Listing 4. The most common solution you see is the left assignment (<-).

When using the assign function, note that the variable name must be entered in quotation marks. If the variable name is not specified in quotation marks, the content of the variable is evaluated and the value is assigned to a variable which is called the same as the content. In our case, then, a new variable “SQL2Rx” will be created (because variable a held this content at that time) and the value “SQ2Ry” assigned (because that is the second parameter of the assign function). Listing 4 shows all of this.

Learn more about ML Conference:

### VALUE ASSIGNMENT

a = 'SQL2R' # assignment

a <- 'SQL2R' # left assignment

'SQL2R' -> a # right assignment

assign('a', 'SQL2Rx') # Assignment function on variable "a"

assign(a, 'SQL2Ry') # Assignment function on variable "SQL2Rx" (!)

R is, as mentioned, strongly but dynamically typed. This can be nicely illustrated with the example in Listing 5. First, we assign the numeric value 1 to the variable a . Dynamic typing allows us to assign a value of a different data type to the same variable at any time. In this example, this is a character string (“xyz”).

R is strongly typed though. The calculation a + b is acknowledged with an error if the data types of the two variables are not compatible. In the example, a is numeric and b is a character string. That is not allowed. The calculation will work as soon as b has a compatible data type (Listing 5).

### DYNAMICALLY TYPED a <- 1 a <- 'xyz' ### STRONGLY TYPED a <- 1 b <- 'xyz' a + b # Error in a + b : non-numeric argument to binary operator ### STRONGLY, DYNAMICALLY TYPED a <- 1 b <- 2 a + b

Attention! R is case-sensitive, so it distinguishes between uppercase and lowercase. Personally, I am not too happy about this (and I have to fight again and again with error messages, because I use the wrong case for package or function names). But we could discuss all night about the pros and cons of case sensitivity – and sometimes we indeed will. Anyway, we can’t do anything about it in R, so we have to live with it. So the two variables a and A in Listing 6 are different objects and can therefore have different values or types.

a <- 'SQL2R' A <- 'sql2r' a A

Data types in R

In addition to the usual data types, which we also see in relational databases, there are also complex numbers (i) as a data type (Listing 7).

### DATA TYPES a <- 1 # numeric mode(a) a <- "1" # character mode(a) a <- '1' # character mode(a) a <- TRUE # logical mode(a) a <- 1i # complex mode(a)

Unknown or missing values are presented as NULL in relational databases. This affects all data types, including BOOLEAN (TRUE, FALSE, NULL). Errors in calculations can also lead to NULL. Instead, the R programming language distinguishes between unavailable or undefined values (NULL) and a logical value that is neither TRUE nor FALSE: NA (Not Available).

Calculation errors are signaled as: Inf (Infinity), -Inf (negative Infinity) or NaN (Not a Number). In parallel to constants with these names, we also have the functions is.null, is.na, is.infinite and is.nan to check for the corresponding value, because a direct comparison with == sometimes does not produce the expected result. Listing 8 shows the possibilities in detail.

### MORE THAN JUST NULL 3/0 # Inf = Infinity Inf is.infinite(4) # FALSE is.infinite(3/0) # TRUE is.infinite(Inf) # TRUE -3/0 # -Inf = negative Infinity -Inf # -Inf = negative Infinity is.infinite(-3/0) # TRUE is.infinite(-Inf) # TRUE 1 < Inf # TRUE 1 < -Inf # FALSE 0/0 # NaN = Not A Number is.nan(4) # FALSE is.nan(0/0) # TRUE is.nan(NaN) # TRUE 1/0 - 1/0 # NaN Inf - Inf # NaN NA # Not Available is.na(4) # FALSE is.na(NA) # TRUE Inf == Inf # TRUE NaN == NaN # NA NA == NaN # NA NA == NA # NA is.finite(Inf) # FALSE is.finite(-Inf) # FALSE is.finite(NaN) # FALSE is.finite(NA) # FALSE NULL # NULL is.null(NULL) # TRUE is.null(NA) # FALSE NULL == NULL # logical(0) NULL == NaN # logical(0) NULL == NA # logical(0)

Data Frame

The most important data type for someone familiar with the world of relational databases is the data frame. A data frame consists of variables (in the relational world we would say columns) and observations (also obs.; relational: rows). I will be using the relational terms. All rows of a column have the same data type. The columns can be named. Typically, results of a database query or the contents of CSV files are loaded into a data frame. Even though this object is called a data.frame and not a table, it behaves in a very similar manner. In Listing 9, we create a data frame with the very creative name df from the three vectors col1, col2 and col3. The three vectors were created using the combine function (c). A vector is comparable to a column from a table (all elements must have the same data type). The result is a data frame with three columns (variables) and four rows (observations).

### DATA FRAMES # 3 vectors col1 <- c(11, 21, 31, 41) col2 <- c(12, 22, 32, 42) col3 <- c(13, 23, 33, 43) df <- data.frame(col1, col2, col3) df

Accessing the contents of a data frame is again similar to accessing a matrix (which is also a valid data type in R – but in which the entire content, not just all rows of a column, must have the same type of data). Listing 10 executes the following options: To access a particular row of a given column, the row and column numbers are enclosed in square brackets. If you leave the column number empty, the entire row is returned. And if you leave the row number empty, the whole column is returned.

A column can also be accessed using the column name. One way is to enter the name of the data frame and the name of the column, separated by the dollar sign ($). This is similar to accessing a column of a table in the relational world, where we use the period (.) as a separator. To save on typing, a data frame can also be attached and detached with the help of functions. The with function limits this attachment to the expression in the second parameter.

# Select a cell df[3, 2] # Select a row df[3,] # Select a column df[,2] df$col2 attach(df) col2 detach(df) with(df, col2)

An existing data frame can be extended using the functions cbind (column bind) and rbind (row bind), as documented in Listing 11.

# Insert a column col4 <- c(99, 98, 97, 96) df <- cbind(df, col4) df # Insert a row df <- rbind(df, c(51, 52, 53, 54)) df

Import data

You will very rarely be creating a data frame “by hand”. Much more often you will want to load existing data into a data frame. A common exchange format is the CSV file. In many cases, it will be more practical to have direct access to the data source (for example SQL Server). For this purpose, we have standard functions like read.csv as well as many useful functions from the ODBC package available (Listing 12). You can install the latest version of the ODBC package by using install.packages. To be able to use the functionalities of the package, you need to load it using the library function. With odbcConnect, we can open a connection to a database via an (existing) ODBC connection. The sqlQuery function returns the result of a query practically as a data frame. Close closes the connection again.

### LOAD DATA FROM CSV

setwd("C:\\SQL2R\\Data") # set working directory

FactResellerSales <- read.csv("FactResellerSales.csv")

### ACCESS TO SQL SERVER VIA ODBC

install.packages("RODBC") # quotes needed!

library(RODBC) # without quotes!

MyConnection <- odbcConnect("AdventureWorksDW2014", uid="readonly", pwd="sTr4nGg3h31m")

DimProductCategory <- sqlQuery(MyConnection, 'SELECT * FROM dbo.DimProductCategory')

close(MyConnection)

Querying data

Having the data stored in a data frame is all nice and good, but it is not an end in itself. In the following paragraphs, we will take a look at some typical queries. The SQL keyword TOP is available in R as the head function. If the number of rows is not specified, the first six rows are displayed. Similarly, tail is used to filter the last rows of a data frame (Listing 13).

### TOP head(FactResellerSalesByDateSubCat) # Shows the first 6 lines of a vector, matrix, table, data frame or function head(FactResellerSalesByDateSubCat, 1) tail(FactResellerSalesByDateSubCat, 1)

We can filter the columns by name by creating a vector with the names of the desired columns and entering it as a second parameter in square brackets (Listing 14). Alternatively, the dplyr package offers us functions that may make the code more readable. Typical for dplyr (and not for R) is the use of %>%. This function (even though it may not be recognizable as such at first glance) forwards the output of one expression as the input for the next expression. In our example, the entire content of the data frame FactResellerSalesByDateSubCat is passed to the select function. The latter does exactly what we would expect in SQL: It filters on the specified columns.

### PROJECTION

FactResellerSalesByDateSubCat[,c("OrderDate", "EnglishProductSubcategoryName", "SalesAmount")]

install.packages("dplyr")

library(dplyr)

FactResellerSalesByDateSubCat %>%

select(OrderDate, EnglishProductSubcategoryName, SalesAmount)

We can sort the output list of a data frame using either the order function (and put it as row parameter in square brackets) or the arrange function from the dplyr package (Listing 15).

### ORDER BY FactResellerSalesByDateSubCat[order(FactResellerSalesByDateSubCat$SalesAmount),] FactResellerSalesByDateSubCat %>% select(OrderDate, EnglishProductSubcategoryName, SalesAmount) %>% arrange((SalesAmount))

We create a filter on the query result in SQL using WHERE. In R we again use square brackets and write the condition in the first parameter. In Listing 16, this is on the one hand a comparison to exactly one value with a double = character and on the other through %in% and a vector with a list of product categories. The base package of R (which is automatically installed and loaded) also provides the subset function, used to filter a data frame. The dplyr provides us with the filter function. In SQL, we prevent duplicates by using DISTINCT, in R we use the unique function (Listing 16).

### WHERE

FactResellerSalesByDateSubCat[FactResellerSalesByDateSubCat$EnglishProductSubcategoryName=="Road Bikes",]

FactResellerSalesByDateSubCat[FactResellerSalesByDateSubCat$EnglishProductSubcategoryName %in% c("Road Bikes", "Mountain Bikes"),]

subset(FactResellerSalesByDateSubCat, EnglishProductSubcategoryName=="Road Bikes")

FactResellerSalesByDateSubCat %>%

select(OrderDate, EnglishProductSubcategoryName, SalesAmount) %>%

arrange(SalesAmount)%>%

filter(EnglishProductSubcategoryName=="Road Bikes")

### DISTINCT

unique(FactResellerSalesByDateSubCat)

For calculations, whether it’s as an aggregation over several records, or as an additional column, I like to reach for the dplyr package, which we’re already familiar with at this point. By using group_by, we define a grouping (identical to GROUP BY in SQL), while summarize is the actual aggregate function (which is written in SQL in the projection after SELECT). Totals (i.e. over the entire data frame) are obtained if you enter not just the column name, but add the data frame name before it. This may not be immediately obvious when you browse through Listing 17: sum (FactResellerSalesByDateSubCat$SalesAmount) calculates sales (SalesAmount) over the entire data frame, while sum(SalesAmount) contains only the sales of the current grouping (the respective product category in this case (EnglishProductCategoryName)). In SQL, we would have to fall back on sub-selects or window functions (OVER ()). Finally, in Listing 17, I supplement the output of a data frame with an extra column using the mutate function. The Margin column is part of the output in the example, but is not stored in the data frame.

### AGGREGATION

df <- data.frame(A = c(1, 1, 2, 3, 3), B = c(2, 3, 3, 5, 6))

df

df %>% group_by(A) %>% summarise(B = sum(B))

# Total & Percentage

FactResellerSalesByDateSubCat %>%

group_by(EnglishProductCategoryName) %>%

summarise(SalesAmountSum = sum(SalesAmount),

SalesAmountTotal = sum(FactResellerSalesByDateSubCat$SalesAmount),

SalesAmountPerc = sum(SalesAmount) / sum(FactResellerSalesByDateSubCat$SalesAmount) * 100

)

### CALCULATED COLUMN

# Total & Percentage

FactResellerSalesByDateSubCat %>%

mutate(Margin = SalesAmount - TotalProductCost)

To combine two data frames so that their columns mutually complement each other, we use the merge function. In SQL we would do a JOIN. The example shows two simple data frames df1 and df2, both of which contain a CustomerID column, which serves as a JOIN predicate. Depending on whether we set the all, all.x or all.y parameter to TRUE, we can achieve a full outer join, a left outer join or a right outer join. If we do not set any of these parameters and instead set the by parameter to NULL, we get a cross join (or also: Cartesian product), that is an output wherein each record from df1 is combined with each record from df2 (Listing 18).

### JOINS

df1 <- data.frame(CustomerID = c(1, 1, 2, 3, 3),

SalesAmount = c(20, 30, 30, 50, 60))

df1

df2 <- data.frame(CustomerID = c(1, 2, 4),

Name = c("x","y","z"))

df2

# Full outer join

merge(x = df1, y = df2, by = "CustomerID", all = TRUE)

# Left outer:

merge(x = df1, y = df2, by = "CustomerID", all.x = TRUE)

# Right outer:

merge(x = df1, y = df2, by = "CustomerID", all.y = TRUE)

# Cross join:

merge(x = df1, y = df2, by = NULL)

Predictions

The real strength of R (or of the packages available) is certainly machine learning. These range from simple (linear) regressions, through clustering to neural networks. In Listing 19, we take a look at the prophet package. The name accurately reflects its purpose: The package enables so-called time-series predictions, or forecasts for the future and has been developed and made available by the Data Science group of Facebook.

Once we install and load the package, we create a data frame MyProphetDF from the existing FactResellerSales data frame. This has a dual purpose: On the one hand, the rest of the script can remain the same, even if we want to make predictions for other data. On the other hand, we feed prophet with a data frame which contains only the things we need (date and value).

In the next step, we change the column names to ds and y, because prophet needs to have it done that way. Using the as.POSIXct function, we make sure that the date (which originally came from a SQL Server query) is really compatible with prophet.

The next three rows are needed only because the demo data used is distributed irregularly to only a few days per month. A daily forecast is therefore not possible. Therefore, we change all values of the date column (ds) to the first of the month, and then aggregate all values to one record per month.

Then we let prophet create a model (MyProhpetModel) based on our values from the past. Using make_future_dataframe, we get an empty shell for our forecast values (in our case, for the next six months), which is then filled by predict. Using merge, we merge the values from the past and the forecast values into a common data frame MyProphetForecast to make it easier to carry out the assessment. Using tail we display the last seven lines, i.e. the last available actual value and the six forecast values calculated by prophet.

### prophet

install.packages("prophet")

library(prophet);

MyProphetDF <- FactResellerSales[, c("OrderDate", "SalesAmount")]

colnames(MyProphetDF) <- c("ds", "y")

MyProphetDF$ds <- as.POSIXct(MyProphetDF$ds)

MyProphetDF$ds = as.Date(format(MyProphetDF$ds, "%Y-%m-01"))

library(dplyr)

MyProphetDF <- MyProphetDF %>%

group_by(ds) %>%

summarise(y = sum(y))

MyProphetModel <- prophet(df = MyProphetDF);

MyProphetFuture <- make_future_dataframe(MyProphetModel, periods = 6, freq = "m");

MyProphetForecast <- predict(MyProphetModel, MyProphetFuture);

MyProphetForecast <- merge(x = MyProphetForecast, y = MyProphetDF, by = "ds", all = TRUE)

MyProphetForecast <- merge(x = MyProphetForecast, y = MyProphetForecast[is.na(MyProphetForecast$y) == TRUE, c("ds", "yhat")], by = "ds", all = TRUE)

tail(MyProphetForecast, 7)

Visualizing

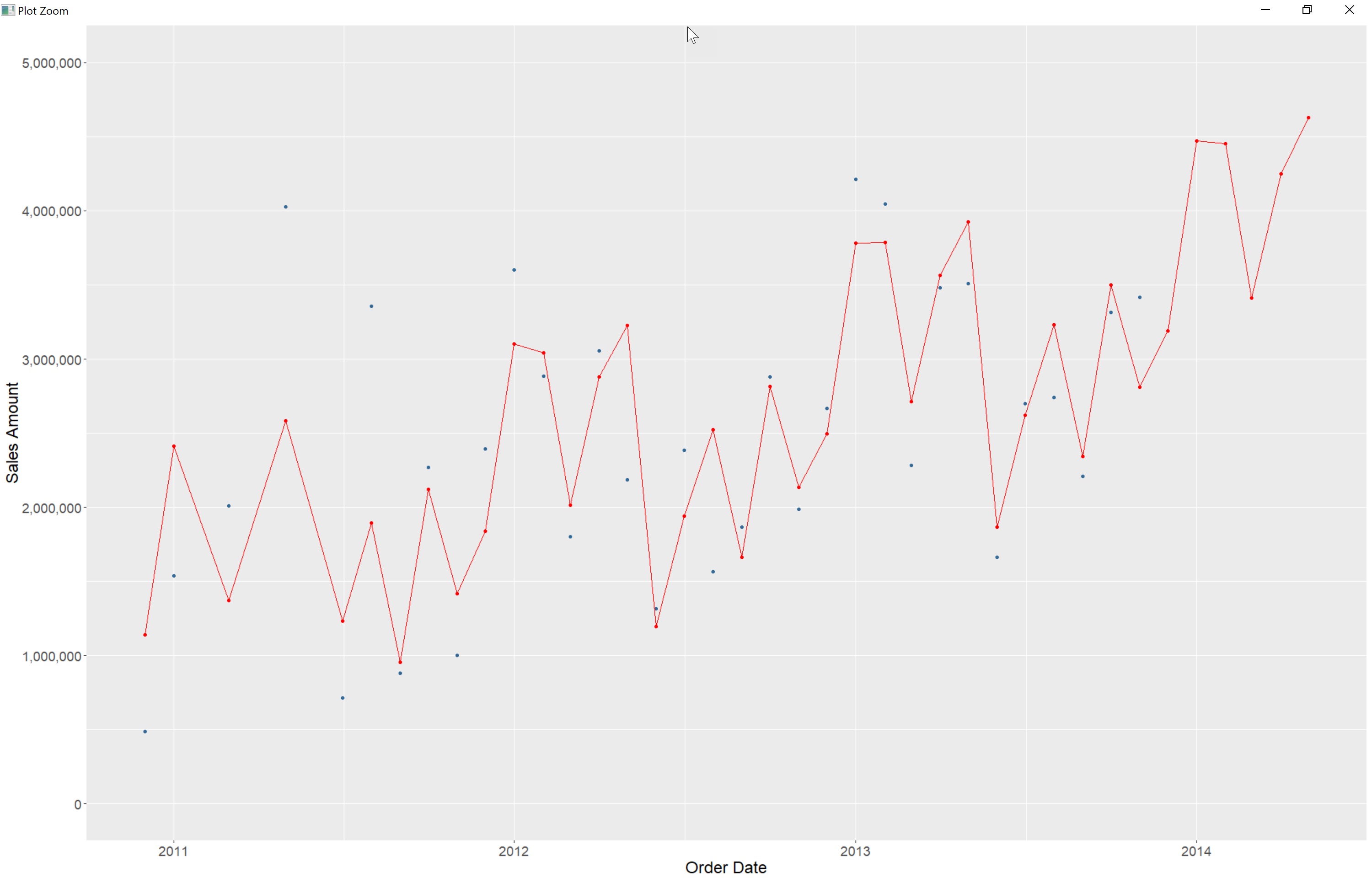

The actual sales figures and the forecast can be displayed together in one chart (Fig. 2). We use the ggplot2 package to do this. The package is characterized above all by its implementation of the “Grammar of Graphics”. So a chart is created layer by layer in a very flexible manner. As a result, a scatter diagram can for example be combined with a line chart as shown in Figure 2. The scales package helps you configure the number format as needed. In Listing 20, we call the ggplot function and pass the following parameters: first the data frame with the data (MyProphetForcast). By using aes, we determine the aesthetics, i.e. the axis values we want to apply (in our case, it is the date ds and the current sales (y), displayed in the first default color). The function geom_point() specifies that we only want to see these values as points. By calling theme() and scale_y_continuous, we set the text size and the value range of the y-axis. Then we supplement the created chart with another line (geom_line()) and points (geom_point()) with the forecast values (yhat.y) in a second color. Finally, we turn off the legend and provide a reasonable axis label.

### DISPLAY CURRENT AND FORECAST SALES VALUES AS A LINE CHART

install.packages("ggplot2")

library(ggplot2)

library(scales)

ggplot(MyProphetForecast,

aes(x = ds,

y = y,

color = 1)) +

geom_point() +

theme(text = element_text(size = 18)) +

scale_y_continuous(limits = c(0, 5000000), labels = comma) +

geom_line(y = MyProphetForecast$yhat.y, color = 2) +

geom_point(y = MyProphetForecast$yhat.y, color = 2) +

theme(legend.position = "none") +

labs (x = "Order Date",

y = "Sales Amount")

R Tutorial – Conclusion

I hope that with this article, I was able to help you get started with learning the R programming language. In any case, the big database vendors have already recognized the potential of R. For example, Microsoft has integrated an R interpreter into both the database engine (so you can run an R script on data in the SQL server and in an Azure SQL database without the need for data transfer) as well as in Power BI Desktop, which is especially interesting for visualizations.

The group of users of the R programming language (to which you may also belong from now on) and the volume of available use cases has been rapidly growing in recent years. An active community is making sure that this will continue in the future.

Get to know the ML Conference better?

Here are more sessions

→ debugging and visualizing tensorflow programs with images

→ Some Things I wish I had known about scaling Machine Learning Solutions

→ Deep Learning mit Small Data

→ Evolution 3.0: Solve your everyday Problems with genetic Algorithms