Exploiting Deep Learning: the most important bits and pieces

Machine learning in general refers to data-based methods of artificial intelligence. A computer learns a model based on sample data. Artificial intelligence plays a significant role in human-machine interaction. An example of this is the Zeno robot shown in Figure 1. It is a therapy tool for autistic children to help them express and understand their emotions better. Zeno recognizes the emotion of its counterpart based on language and facial expression and reacts accordingly. For this purpose, the recorded sensor data must be analyzed in real time through the machine learning process.

Deep learning is based on networks of artificial neurons that have input and output neurons as well as multiple layers of intermediate neurons (hidden layers). Each neuron processes an input vector based on a method similar to that of human nerve cells: A weighted sum of all input values is calculated and the result is transformed with a non-linear function, the so-called activation function. The input neurons record the data, such as unprocessed audio signals, and feed it into the neural network. The audio data passes through the intermediate neurons of all the hidden layers and is thereby processed. Then the processed signals and the calculated results are issued via the output neurons, which then deliver the final result. The parameters of the individual neurons are calculated during the training of the network using the training data. The greater the number of neurons and layers you have, the more complex the problems that you can deal with.

In principle, a greater amount of data also leads to more robust models (as long as the data is not unbalanced). If there is not enough data and the selected network architecture is too complex, there is a risk of overfitting. This means that the model parameters are optimized too much during the training to the given data and that the model is not sufficiently generalized anymore, meaning that it does not work well anymore with independent test data. Possible tasks can be overcome by applying three learning methods: (1) supervised learning, (2) semi-supervised learning, and (3) unsupervised learning.

In supervised learning, a model is trained that can approximate one or more target variables from a set of annotated data. If the target variable is continuous, we speak of regression, in the case of discrete target values of classification. In classification problems, neural networks (with more than two classes) normally use as many neurons in the output layer as there are classes. The neuron that displays the highest activation for given input values is then the class that considers the network most probable.

Semi-supervisedlearning is a variant of supervised learning that uses both annotated and unannotated training data. The combination of this data can greatly improve the learning accuracy when the learning process is monitored by an expert. This learning method is also referred to as cooperative learning because of the artificial neural network and human work together. If the neural network cannot classify specific data with high confidence, it needs the help of an expert for annotation.

Unlike the other two learning methods,unsupervised learningonly has input data and no associated output variables. Since there are no right or wrong answers and no one supervises the behaviour of the system, the algorithms rely on themselves to discover and present relevant structures in the data. The most commonly used unsupervised learning method is clustering. The goal of clustering algorithms is to find patterns or groupings in the dataset. The data within one grouping then has a higher degree of similarity than data in other clusters.

Deep Learning with Java

Deep learning approaches are considered state of the art in various areas of machine learning, such as audio processing (speech or emotion recognition), image processing (object classification or facial recognition) and text processing (sentiment analysis or natural language processing). To simulate the neural networks, program libraries are often used for machine learning. Most robust libraries, such as TensorFlow, Caffe, or Theano, were written in the Python and C ++ programming languages. With Deeplearning4j [1], however, there is also a Java-based deep learning platform that can bridge the gap between the aforementioned Python-based program libraries and Java.

Deeplearning4j is mostly implemented in C and C ++ and uses CUDA to offload the calculations to a compatible NVIDIA graphics processor. The programmer has various architectures available, including CNNs, RNNs and auto-encoders. Likewise, models that have been created with the mentioned tools can be imported.

Essentially, this article addresses the use of deep learning for pattern recognition, such as in computer perception, using the example of learning audio feature representations using Convolutional Neural Networks (CNNs) and Long Short-Term Memory Recurrent Neural Networks (LSTM RNNs).

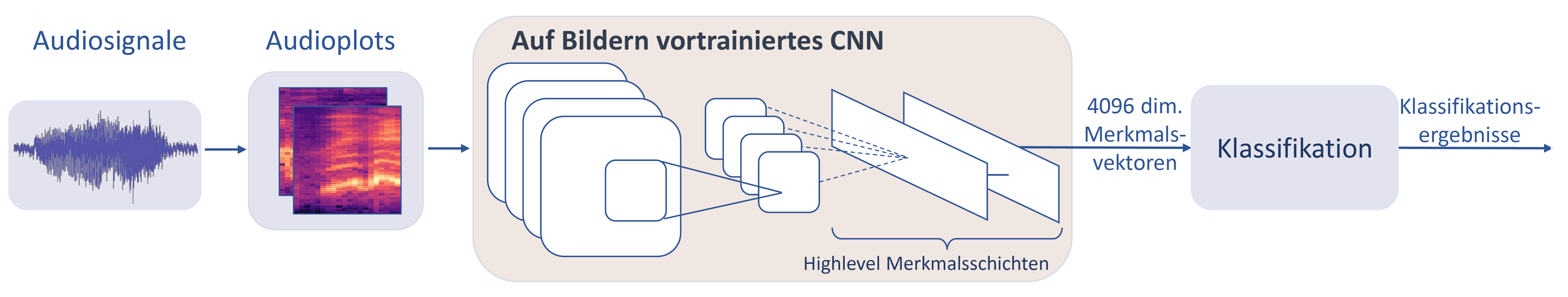

Audio plots (spectrograms) are generated from the audio signals. They are then used as input to the pre-trained CNN, and the activations of the last fully connected layer with 4096 neurons are extracted as deep spectrum features. This leads to a large feature vector that is eventually used for classification (Figure 2).

Convolutional Neural Networks

The presentation of the data that is put into the neural network is crucial. Signals, including two-dimensional signals, such as image data, can be fed directly into the neural network. In the case of image data, this means the colour values of the individual pixels. However, the processing of the data is not shift-invariant, as shifting an object in an image by the width of pixel results in the image information going through a completely different path in the neural network. Some degree of shift invariance can be achieved by CNNs.

CNNs perform a convolution operation, weighing the vicinity of a signal with a convolution kernel, and adding the products together. The weights of the convolution kernel are trained with CNNs and are constant over all areas of an image. For each pixel, multiple convolution operations are normally performed, creating so-called feature maps. Each feature map contains information about specific edge types or shapes in the input image, so each convolution kernel specializes in a specific local image pattern. In order to improve the shift invariance and to compress the image information that is initially blown up by a CNN layer, the described layers are normally used in combination with a subsequent maximum pooling layer. This layer selects, from a (mostly) 2 x 2 vicinity, only the largest activation respectively and propagates it to the subsequent network layer.

CNNs typically consist of a series of several convolutional and maximum pooling layers and are completed by one or more fully networked layers. Although CNNs are also applied to one-dimensional signals, they are most commonly found in the classification of images and have greatly improved the state of the art in this area. A standard problem is the detection of handwritten digits, for which the error rate on the test data of the MNIST standard data set was successfully reduced to below 0.3 percent [2].

Since very large amounts of data are required for the training of complex neural networks and long computation time is associated with it, pre-trained networks have been enjoying great popularity in recent years. An example of such a network is AlexNet, which was trained on the ImageNet image database, and consists of more than one million images in a thousand categories. The network has eight layers, of which the first five layers are those of a CNN. Such a neural network can be used not only for the classification of a thousand pre-trained categories but also for the classification of further objects or image classes by re-training the last layer (or last layers) with image examples from the desired categories while leaving the weights in the previous layers constant. The advantage here is that robust classifiers can be generated even with a much smaller number of training data. Such a procedure, in which we make use of models from another domain or problem definition, is referred to as transfer learning. At the Interspeech Conference 2017, a prestigious international conference, we presented a CNN pre-trained for image recognition for audio classification [3]. Figure 2 shows the structure of the presented approach.

Recurrent Neural Networks

Learn more about ML Conference:

Recurrent Neural Networks (RNNs) are suitable for modeling sequential data. These are the series of data points, mostly over time, such as audio and video signals, but also physiological measurements (such as electrocardiograms) or stock prices. An RNN, in contrast to feedforward networks such as CNNs, also has feedback in itself or to other neurons. Each passing of the activation into another neuron is understood as a time step so that an RNN can implicitly store data over any given period of time.

Since during training of RNNs, i.e. when optimizing the weights of the neurons, the error gradients have to be propagated back through different layers as well as over a large number of time steps and these are multiplied in each step, they gradually vanish (Vanishing Gradient Problem), and the respective weights are not sufficiently optimized. LSTMs solve this problem by introducing so-called LSTM cells. They were presented at the Technical University of Munich in 1997 by Sepp Hochreiter and Jürgen Schmidhuber [4]. LSTMs are able to store activations over a longer number of time steps. This is achieved through a component of multiplicative gates: Input Gate, Output Gate and Forget Gate, which in turn consist of neurons whose weights are trained. The gates determine which activation is transmitted to the cell at what times (input gate), when and to what extent they are output (output gate), and when and if the activation is cleared (forget gate). Gated Recurrent Units (GRUs) are an advancement of the LSTMs. They do without an output gate and are thus faster to train, yet still offer similar accuracy.

LSTMs and GRUs can work with different input data. Classically, in the case of audio signals, time-dependent acoustic feature vectors have been extracted. Typical features include short-term energy in certain spectral bands, or in particular for speech signals, so-called Mel-frequency cepstral coefficients, which represent information from linguistic unities in a compressed manner. In addition, the fundamental frequency of the voice or rhythmic features may be relevant for certain issues. Alternatively, however, so-called end-to-end (E2E) learning has been increasingly in use recently. The step of feature extraction is replaced by several convolutional layers of a CNN. The convolution kernels are one-dimensional in audio signals and may also be considered as bandpass filters. The CNN layers are then preferably followed by LSTM or GRU layers to account for the temporal nature of the signal. E2E learning has been used successfully in the linguistic field for emotion recognition in human speech and is currently the most important subject of research in automatic speech recognition (speech-to-text).

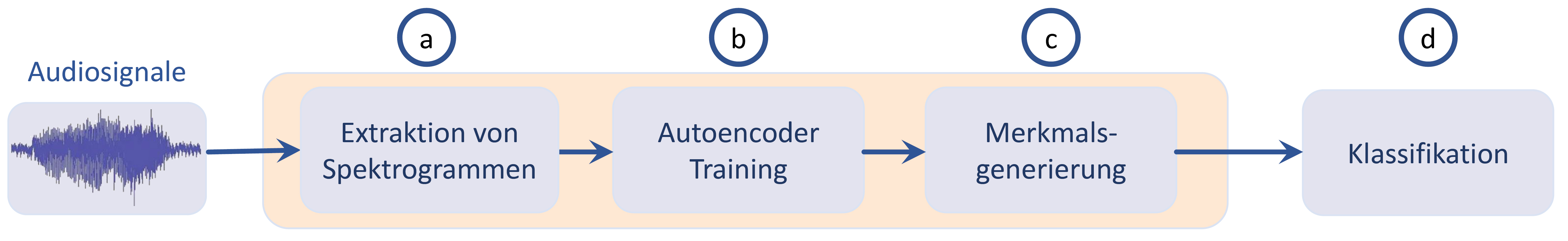

The emotion recognition from voice recordings mentioned above using the example of the robot is a complex example. First of all, it has to be considered that the robot has to work in a wide variety of acoustic environments – environments that it does not know yet from the training data. As shown in Figure 3, audio features vary greatly depending on the acoustic recording conditions and the respective speakers, possibly more than in comparison to different emotions. First, Mel-spectrograms are extracted from the audio files (a). Subsequently, a recurrent sequence-to-sequence autoencoder is trained on these spectrograms, which are considered to be time-dependent sequences of frequency vectors (b). After the autoencoder training, the learned representations are generated by the Mel-spectrograms for use as feature vectors for the corresponding instances (c). Finally, a classifier (d) is trained on the feature vectors. Therefore, as a first step, normally either the audio features or the audio signal itself are improved, therefore freed from any interference. Again, artificial neural networks, mostly RNNs, can be used for this purpose. Furthermore, ambient noise detection is often necessary, that is to say, a determination of the acoustic environment so that the system can select a model which is optimal for the respective situation, or it can adapt the model parameters accordingly. Finally, actual emotion recognition is performed from the preprocessed language.

The emotion recognition from voice recordings mentioned above using the example of the robot is a complex example. First of all, it has to be considered that the robot has to work in a wide variety of acoustic environments – environments that it does not know yet from the training data. As shown in Figure 3, audio features vary greatly depending on the acoustic recording conditions and the respective speakers, possibly more than in comparison to different emotions. First, Mel-spectrograms are extracted from the audio files (a). Subsequently, a recurrent sequence-to-sequence autoencoder is trained on these spectrograms, which are considered to be time-dependent sequences of frequency vectors (b). After the autoencoder training, the learned representations are generated by the Mel-spectrograms for use as feature vectors for the corresponding instances (c). Finally, a classifier (d) is trained on the feature vectors. Therefore, as a first step, normally either the audio features or the audio signal itself are improved, therefore freed from any interference. Again, artificial neural networks, mostly RNNs, can be used for this purpose. Furthermore, ambient noise detection is often necessary, that is to say, a determination of the acoustic environment so that the system can select a model which is optimal for the respective situation, or it can adapt the model parameters accordingly. Finally, actual emotion recognition is performed from the preprocessed language.

One of the latest RNN-based developments for unsupervised learning is auDeep [5], [6]. The system is a sequence-to-sequence autoencoder that learns audio representations in an unsupervised method from extracted Mel-spectrograms. Figure 2 shows an illustration of the structure of auDeep. Mel-spectrograms are considered as a time-dependent sequence of frequency vectors in the interval[-1;1]{N_mel }, each of which describes the amplitudes of the Nmel

Mel-frequency bands within an audio portion. This sequence is applied to a multilayer RNN encoder which updates its hidden state in each time step based on the input frequency vector. Therefore, the last hidden state of the RNN encoder contains information about the entire input sequence. This last hidden state is transformed using a fully connected layer, and another multilayer RNN decoder is used to reconstruct the original input sequence from the transformed representation.

The encoder RNN consists of Nlayers, each contains Nunit GRUs. The hidden states of the encoder GRUs are initialized to zero for each input sequence and their last hidden states in each layer are concatenated into a one-dimensional vector. This vector can be viewed as a fixed length representation of a variable length input sequence – with dimensionality N layer⋅ N unit when the encoder RNN is unidirectional, and the dimensionality 2 ⋅Nlayer⋅Nunit⋅ if it is bidirectional.

The representation vector is then passed through a fully connected layer with hyperbolic tangent activation. The output dimension of this layer is chosen so that the hidden states of the RNN decoder can be initialized.

The RNN decoder contains the same number of layers and units as the RNN encoder. Their task is the partial reconstruction of the input Mel-spectrogram based on the representation with which the hidden states of the RNN decoder were initialized. At the first time step, a zero input is fed to the RNN decoder. During the subsequent time steps t, the expected decoder output at time t-1 is passed as an input to the RNN decoder. Greater representations could possibly be obtained by using the actual decoder output rather than the expected output, as this reduces the amount of information available to the decoder.

The outputs of the decoder RNNs are passed through a single linear projection layer having hyperbolic tangent activation at each time step to assign the dimensionality of the decoder output of the target dimensionalityN mel . The weights of this output projection are distributed over several time steps. To introduce larger short-term dependencies between the encoder and the decoder, the RNN decoder reconstructs the reverse input sequence.

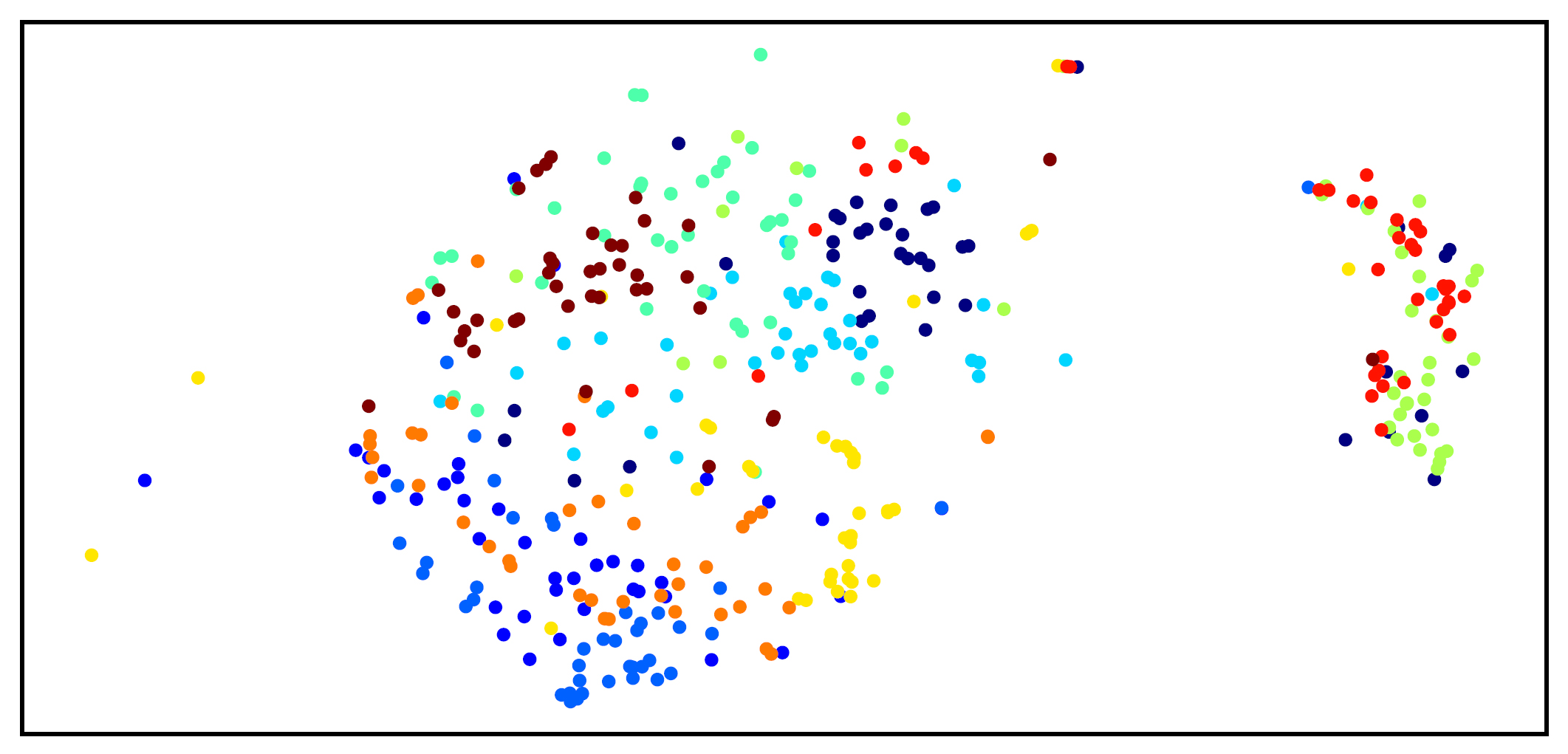

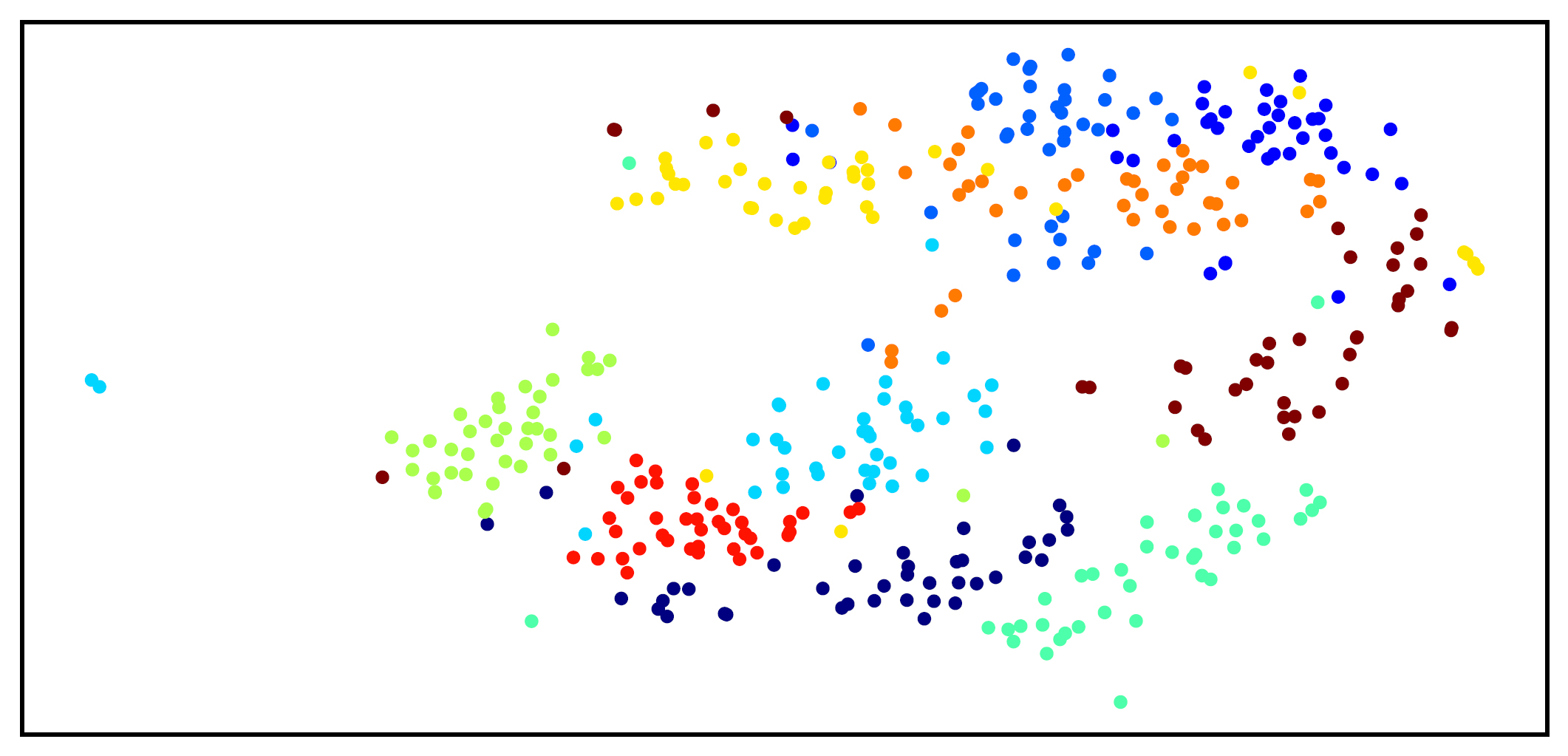

Autoencoder training is performed using the root mean square error (RMSE) between the decoder output and the target sequence as the target function. A so-called dropout is applied to the inputs and outputs of the recurrent layers, but not to those of the hidden states. Dropout corresponds to the random elimination of neurons during the learning iterations to enforce a so-called regularization, which should allow individual neurons to learn more independently from their vicinity. Once training is completed, the fully connected layer activations are extracted as the learned spectrogram representations and forwarded for a decision, such as classification. Figure 4 illustrates how the autoencoder has learned new representations in an unsupervised manner from the mixed spectrograms. Finally, the learned audio representations can be classified by means of an RNN. This is illustrated below by Deeplearning4j. Deeplearning4j offers numerous libraries for the modelling of diverse neural networks.

Finally, in Listing 1 we show the implementation of an RNN with Graves LSTM cells for the classification of feature vectors, which we extracted using the unsupervised method (Figure 2). To train the LSTM network, a number of hyperparameters must be adjusted. These include, for example, the learning rate of the network, the number of input and output neurons corresponding to the number of extracted features and the classes, and a majority of other parameters.

import org.deeplearning4j.datasets.datavec.RecordReaderDataSetIterator;

import org.deeplearning4j.eval.Evaluation;

import org.deeplearning4j.nn.api.OptimizationAlgorithm;

import org.deeplearning4j.nn.conf.*;

import org.deeplearning4j.nn.conf.layers.GravesLSTM;

import org.deeplearning4j.nn.conf.layers.RnnOutputLayer;

import org.deeplearning4j.nn.multilayer.MultiLayerNetwork;

import org.deeplearning4j.nn.weights.WeightInit;

import org.datavec.api.records.reader.RecordReader;

import org.datavec.api.records.reader.impl.csv.CSVRecordReader;

import org.datavec.api.split.FileSplit;

import org.nd4j.linalg.dataset.api.iterator.DataSetIterator;

import org.nd4j.linalg.lossfunctions.LossFunctions.LossFunction;

import org.nd4j.linalg.activations.Activation;

import org.nd4j.linalg.api.ndarray.INDArray;

import org.nd4j.linalg.dataset.DataSet;

import java.io.File;

public class Main {

public static void main(String[] args) throws Exception {

int batchSize = 128; // set batchsize

// Load training data

RecordReader csvTrain = new CSVRecordReader(1, ",");

csvTrain.initialize(new FileSplit(new

File("src/main/resources/train.csv")));

DataSetIterator iteratorTrain = new RecordReaderDataSetIterator(csvTrain,

batchSize, 4096, 2);

// Load evaluation data

RecordReader csvTest = new CSVRecordReader(1, ",");

csvTest.initialize(new FileSplit(new

File("src/main/resources/eval.csv")));

DataSetIterator iteratorTest = new RecordReaderDataSetIterator(csvTest,

batchSize, 4096, 2);

//****LSTM hyperparameters****

int anzInputs = 4096; // number of extracted features

int anzOutputs = 2; // number of classes

int anzHiddenUnits = 200; // number of hidden units in each LSTM layer

int backPropLaenge = 128; // Length for truncated back propagation over time

int anzEpochen = 32; // number of training epochs

double lrDecayRate = 10; // Decline of the learning rate

//****Network configuration****

MultiLayerConfiguration netzKonfig = new NeuralNetConfiguration.Builder()

.optimizationAlgo(OptimizationAlgorithm.STOCHASTIC_GRADIENT_DESCENT).iterations(1)

.learningRate(0.001)

.seed(234)

.l1(0.01) // least absolute deviations (LAD)

.l2(0.01) // least squares error (LSE)

.regularization(true)

.dropOut(0.1)

.weightInit(WeightInit.RELU)

.updater(Updater.ADAM)

.learningRateDecayPolicy(LearningRatePolicy.Exponential).lrPolicyDecayRate(lrDecayRate)

.list()

.layer(0, new GravesLSTM.Builder().nIn(anzInputs ).nOut(anzHiddenUnits)

.activation(Activation.TANH).build())

.layer(1, new

GravesLSTM.Builder().nIn(anzHiddenUnits).nOut(anzHiddenUnits)

.activation(Activation.TANH).build())

.layer(2, new

GravesLSTM.Builder().nIn(anzHiddenUnits).nOut(anzHiddenUnits)

.activation(Activation.TANH).build())

.layer(3, new

RnnOutputLayer.Builder(LossFunction.MEAN_ABSOLUTE_ERROR).activation(Activation.RELU)

.nIn(anzHiddenUnits).nOut(anzOutputs).build())

.backpropType(BackpropType.TruncatedBPTT).tBPTTForwardLength(backPropLaenge).tBPTTBackwardLength(backPropLaenge)

.pretrain(true).backprop(true)

.build();

MultiLayerNetwork modell = new MultiLayerNetwork(netzKonfig);

modell.init(); // initialization of the model

// Training after each epoch

for (int n = 0; n < anzEpochen; n++) {

System.out.println("Epoch number: " + (n + 1));

modell.fit(iteratorTrain);

}

// Evaluation of the model

System.out.println("Evaluation of the trained model ...");

Evaluation Eval = new Evaluation(anzOutputs);

while (iteratorTest.hasNext()) {

DataSet data = iteratorTest.next();

INDArray features = data.getFeatureMatrix();

INDArray lables = data.getLabels();

INDArray predicted = modell.output(features, false);

Eval.eval(lables, predicted);

}

//****Show evaluation results****

System.out.println("Accuracy:" + Eval.accuracy());

System.out.println(Eval.confusionToString()); //Confusion Matrix

}

}

Exploiting Deep Learning – Conclusion

Because of their special capabilities, deep learning methods will continue to increasingly dominate machine learning research and practice in the years to come. In recent years, a large number of companies have been founded which specialize in deep learning, while large IT companies such as Google and Apple are hiring experienced experts to a large extent. In the field of research, deep learning has in the meantime displaced a large part of classical signal processing and now dominates the field of data analysis.

Developers will increasingly have to deal with the integration of deep learning models. In the area of Java development, the toolkit Deeplearning4j presented earlier is a promising framework. In one example, the application of deep learning for audio analysis was shown here. Following the principle and code, a multitude of related issues can be solved elegantly and efficiently. Artificial intelligence has again become the focus of general interest thanks to deep learning. It remains to be seen what kind of new solutions and applications we will experience in the near future.

Links & literature

[1] Deeplearning4j: https://deeplearning4j.org/

[2] http://yann.lecun.com/exdb/mnist/

[3] Amiriparian, Shahin; Gerczuk, Maurice; Ottl, Sandra; Cummins, Nicholas; Freitag, Michael; Pugachevskiy, Sergey; Baird, Alice; Schuller, Björn: „Snore Sound Classification Using Image-based Deep Spectrum Features“, in: Proceedings INTERSPEECH 2017, 18th Annual Conference of the International Speech Communication Association, Stockholm, S. 3512–3516, ISCA, ISCA, August 2017

[4] Hochreiter, Sepp; Schmidhuber, Jürgen: „Long Short-Term Memory“, Neural Computation, 9 (8), S. 1735-1780, 1997

[5] Amiriparian, Shahin; Freitag, Michael; Cummins, Nicholas; Schuller, Björn: „Sequence to Sequence Autoencoders for Unsupervised Representation Learning from Audio“, in: Proceedings of the Detection and Classification of Acoustic Scenes and Events 2017 Workshop (DCASE2017), München, S. 17–21, IEEE, November 2017

[6] auDeep: https://github.com/auDeep/auDeep/

Get to know the ML Conference better?

Here are more sessions

→ debugging and visualizing tensorflow programs with images

→ Some Things I wish I had known about scaling Machine Learning Solutions

→ Deep Learning mit Small Data

→ Evolution 3.0: Solve your everyday Problems with genetic Algorithms