Machine Learning and the Apache Kafka Ecosystem are an excellent combination for training and deploying scalable analytical models. Here, Kafka becomes the central nervous system in the ML architecture, feeding analytical models with data, training them, using them for forecasting and monitoring them. This yields enormous advantages:

- Data pipelines are simplified

- The implementation of analytical models is separated from their maintenance

- Real time or batch can be used as needed

- Analytical models can be applied in a high-performance, scalable and business-critical environment

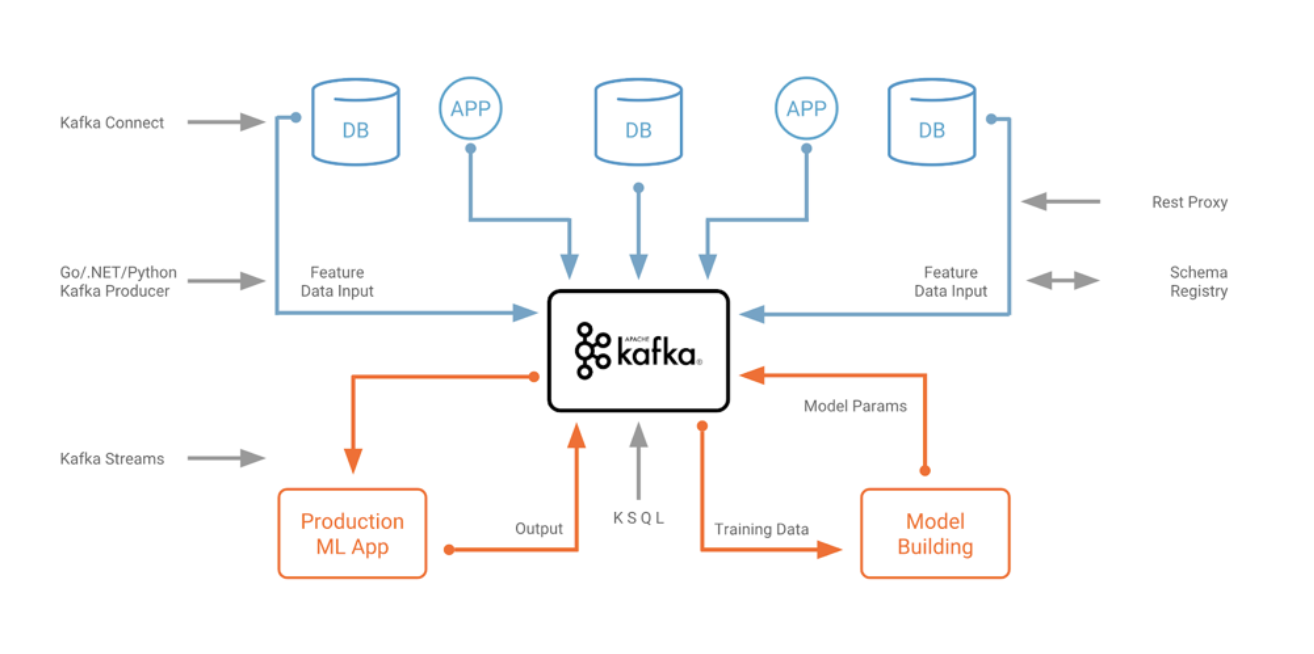

Since customers expect information in real time today, the challenge for companies is to respond to customer inquiries and react to critical moments before it’s too late. Batch processing is no longer sufficient here; the reaction must be immediate, or better yet – proactive. This is the only way you can stand out from the competition. Machine learning can improve existing business processes and automate data-driven decisions. Examples of such use cases include fraud detection, cross-selling or the predictive maintenance of IoT devices. The architecture of such business-critical real-time applications which use the Apache Kafka Ecosystem as a scalable and reliable central nervous system for your data can be presented as shown in Figure 1.

Deployment of analytical models

Generally speaking, a machine learning life cycle consists of two parts:

- Training the model: In this step, we load historic data into an algorithm to learn patterns from the past. The result is an analytical model.

- Creating forecasts/predictions: In this step, we use the analytical model to prepare forecasts about new events based on the learned pattern.

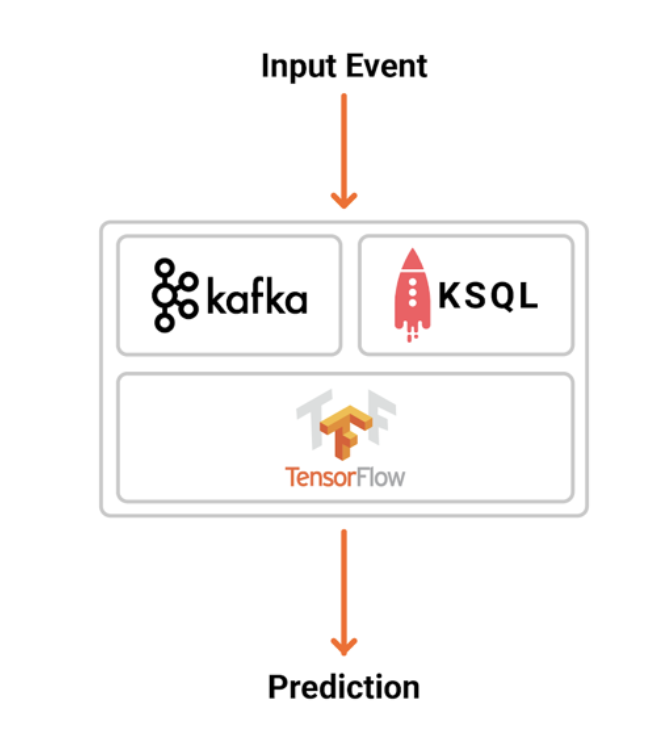

Machine learning is a continuous process in which the analytical model is constantly improved and redeployed over time. Forecasts can be deployed within an application or microservice in various ways. One possibility is to embed an analytical model directly into a stream processing application, such as an application that uses Kafka streams. For example, you can use TensorFlow for Java API to load models and apply them in real time (Fig. 2).

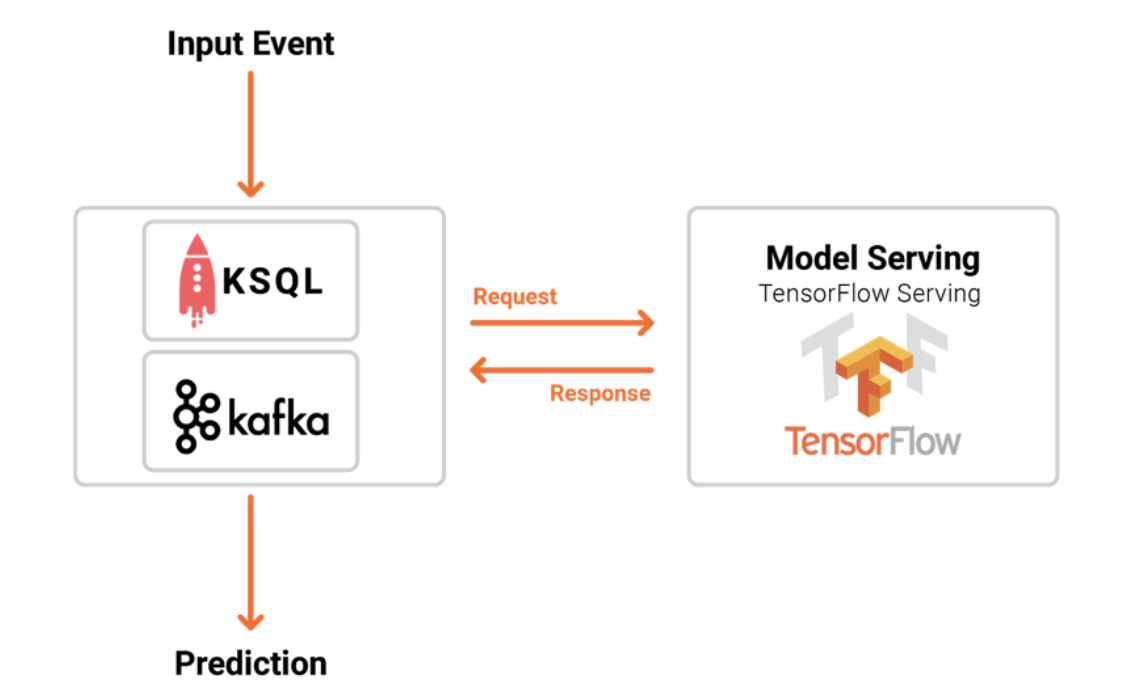

Alternatively, you can deploy the analytical models to a dedicated model server (such as TensorFlow Serving) and use remote procedure calls (RPC) from the streaming application for the service (for example, with HTTP or gRPC) (Fig. 3).

Both options have their advantages and disadvantages. The advantages of a dedicated mail server include:

- Easy integration into existing technologies and business processes

- The concept is easier to understand if you’re coming from the “non-streaming world”

- Later migration to “real” streaming is possible

- Model management incorporates the use of various models, including versioning, A/B testing, etc.

On the other hand, here are some disadvantages of a dedicated model server:

- Often linked to specific ML technologies

- Often linked to a specific cloud provider (vendor lock-in)

- Higher latency

- More complex security concepts (remote communication via firewalls and authorization management)

- No offline inference (devices, edge processing, etc.)

- Combines the availability, scalability, and latency/throughput of your stream processing application with the SLAs of the RPC interface

- Side effects (e.g. in the event of a failure during network issues) that are not covered by Kafka processing (such as exactly-once processing)

Learn more about ML Conference:

How analytical models are deployed is a matter of individual decisions for each scenario, including latency and security considerations. Some of the trends discussed below, such as hybrid architectures or low-latency requirements, also require model deployment considerations: For example, do you use models locally in edge components such as sensors or mobile devices to process personal information, or integrate external AutoML services to take advantage of the benefits and scalability of cloud services? To make the best choice for your use case and your architecture, it is very important to understand both options and the associated trade-offs.

Model deployment in Kafka applications

Kafka applications are event-based and use event streams to continuously process incoming data. If you use Kafka, you can natively embed an analytical model in a Kafka Streams or KSQL application. There are several examples of Kafka Streams microservices that incorporate models natively created with TensorFlow, H2O, or Deeplearning4j.

Due to reasons of an architectural, safety or organizational nature, it is not always possible or feasible to embed analytical models directly. You can also use RPC to perform model inferences from your Kafka application (while considering the pros and cons described above).

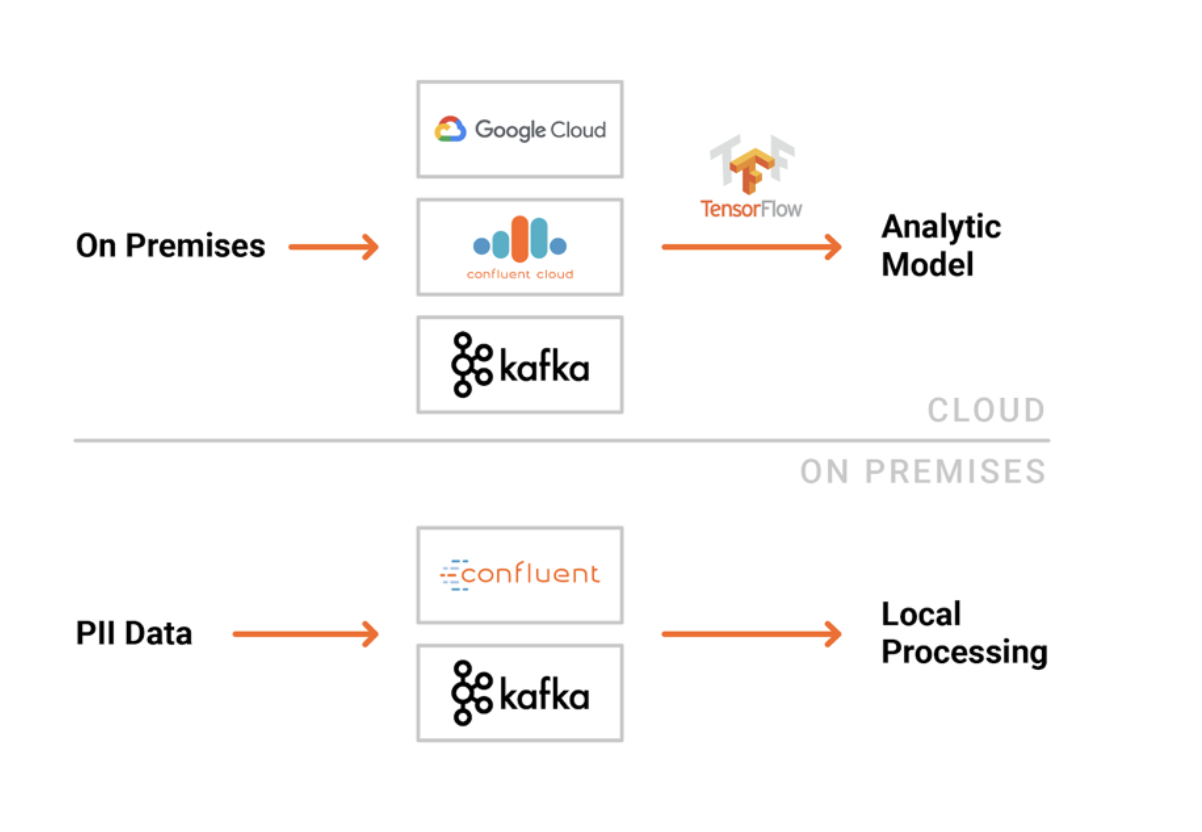

Hybrid cloud and on-premise architectures

Hybrid cloud architectures are often the technology of choice for machine learning infrastructure. Training can be conducted with large amounts of historic data in the public cloud or in a central data lake within an in-house data center. The model deployment needed to prepare forecasts can be done anywhere.

In many scenarios it makes perfect sense to use the scalability and adaptability of public clouds. So for example, new large calculation instances can be created to train a neural network for a few days, after which they can be stopped easily. Pay-as-you-go is a perfect model, especially for deep learning.

In the cloud, you can use specific processing units that would be costly to run in your own data center and often go unused. For example, Google’s TPU (Tensor Processing Unit), an application-specific integrated circuit (ASIC) engineered from the ground up for machine learning, is a specific processor designed for deep learning only. TPUs can do one thing well: Matrix multiplication – the heart of deep learning – for the training of neural networks.

If you need to keep data out of the public cloud, or if you want to build your own ML infrastructure for larger teams or departments in your own data center, you can buy specially designed hardware/software combinations for deep learning, such as the Nvidia DGX platforms.

Wherever you need it, forecasts can be made using the analytical model regardless of model training: in the public cloud, on-site in your data centers or on edge devices such as the Internet of Things (IoT) or mobile devices. However, edge devices often have higher latency, limited bandwidth, or poor connectivity.

Building hybrid cloud architectures with Kafka

Apache Kafka allows you to build a cloud-independent infrastructure, including both multi-cloud and hybrid architectures, without being tied to specific cloud APIs or proprietary products. Figure 4 shows an example of a hybrid Kafka infrastructure used to train and deploy analytical models.

Apache Kafka components such as Kafka Connect can be used for data feed, while Kafka Streams or KSQL are good for preprocessing data. Model deployment can also be done within a Kafka client like Java, .NET, Python, Kafka Streams or KSQL. Quite often, the entire monitoring of the ML infrastructure is carried out with Apache Kafka. This includes technical metrics such as latency and project-related information such as model accuracy.

Data streams frequently come from other data centers or clouds. A common scenario is to use a Kafka replication tool, such as MirrorMaker or Confluent Replicator, to replicate the data from the source Kafka clusters in a reliable and scalable manner to the analysis environment.

The development, configuration and operation of a reliable and scalable Kafka cluster always requires a solid understanding of distributed systems and experience in dealing with them. Therefore, you can alternatively use a cloud service like Confluent Cloud, which offers Kafka-as-a-Service. Here, only the Kafka client (such as Kafka’s Java API, Kafka Streams or KSQL) is created by the developer and the Kafka server side is used as an API. In in-house data centers, tools such as Confluent Operator for operating on Kubernetes distributions and Confluent Control Center for the monitoring, control and management of Kafka clusters are helpful.

Streaming analysis in real time

A primary requirement in many use cases is to process information while it is still current and relevant. This is relevant for all areas of an ML infrastructure:

- Training and optimization of analytical models with up-to-date information

- Forecasting new events in real time (often within milliseconds or seconds)

- Monitoring of the entire infrastructure for model accuracy, infrastructure errors, etc.

- Security-relevant tracking information such as access control, auditing or origin

The simplest and most reliable way to process data in a timely manner is to create real-time streaming analyzes native to Apache Kafka using Kafka clients such as Java, .NET, Go, or Python. In addition to using a Kafka Client API (i.e. Kafka Producer and Consumer), you should also consider Kafka Streams, the stream processing framework for Apache Kafka. This is a Java library that enables simple and complex stream processing within Java applications.

For those of you who do not want to write Java or Scala or cannot do so, there is KSQL, the streaming SQL engine for Apache Kafka. It can be used to create stream-processing applications that are expressed in SQL. KSQL is available as an open source download at https://hub.docker.com/r/confluentinc/cp-ksql-cli/.

KSQL and Machine Learning for preprocessing and model deployment

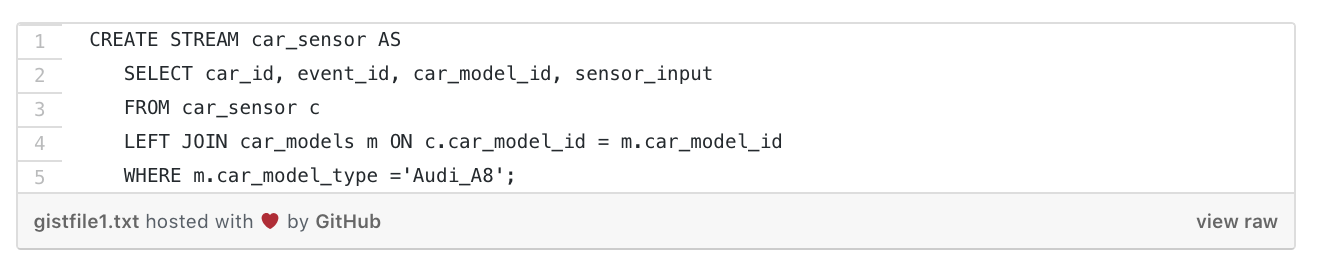

Processing streaming data with KSQL makes data preparation for machine learning easy and scalable. SQL statements can be used to perform filtering, enrichment, transformation, feature engineering or other tasks. Figure 5 shows only one example on how to filter sensor data from multiple vehicle types for a particular vehicle model to be used for further processing or analysis in real time.

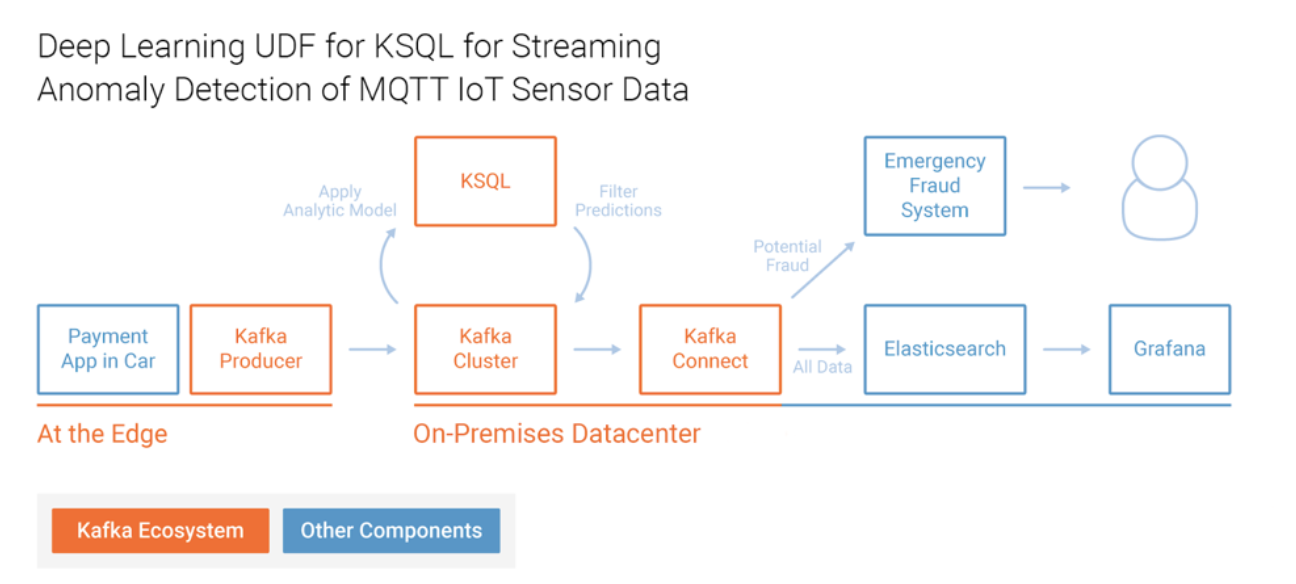

ML models can easily be embedded in KSQL by creating a user-defined function (UDF). In the detailed example “Deep Learning UDF for KSQL for Streaming Anomaly Detection of MQTT IoT Sensor Data”, a neural network is used for sensor analysis, or more specifically as an auto-encoder, to detect anomalies (Fig. 6).

In this example, KSQL continuously processes millions of events from networked vehicles through MQTT integration to the Kafka cluster. MQTT is a publish/subscribe messaging protocol which was developed for restricted devices and unreliable networks. It is often used in combination with Apache Kafka to integrate IoT devices with the rest of the company. The auto-encoder is used for predictive maintenance.

Real-time analysis in combination with this vehicle sensor data makes it possible to send anomalies to a warning or emergency system to be able to react before the engine fails. Other use cases for intelligent networked car include optimized route guidance and logistics planning, the sale of new features and functions for a better digital driving experience, and loyalty programs that correspond directly with restaurants and roadside businesses.

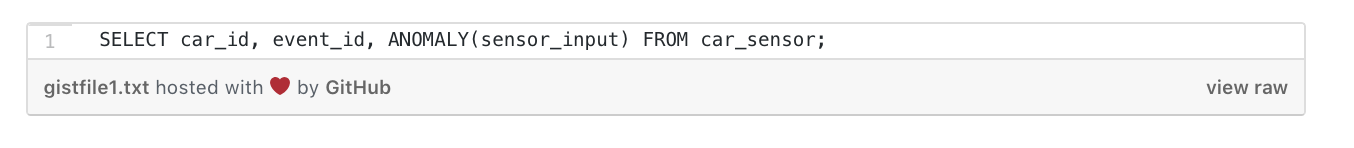

Even if a KSQL UDF requires a bit of code, it only needs to be written by the developer once. After that, the end user can easily use the UDF within their KSQL statements like any other integrated function. In Figure 7, you can see the KSQL query from our example using the ANOMALY UDF, which uses the TensorFlow model in the background.

Depending on preferences and requirements, both KSQL and Kafka streams are perfect for ML infrastructures when it comes to preprocessing streaming data and performing model inferences. KSQL lowers the entry-level barrier and allows you to implement streaming applications with simple SQL statements, rather than having to write source code.

Scalable and flexible platforms for Machine Learning

Technology giants are typically several years ahead of traditional companies. They have already built what others (have to) build today or tomorrow. Machine learning platforms are no exception here.

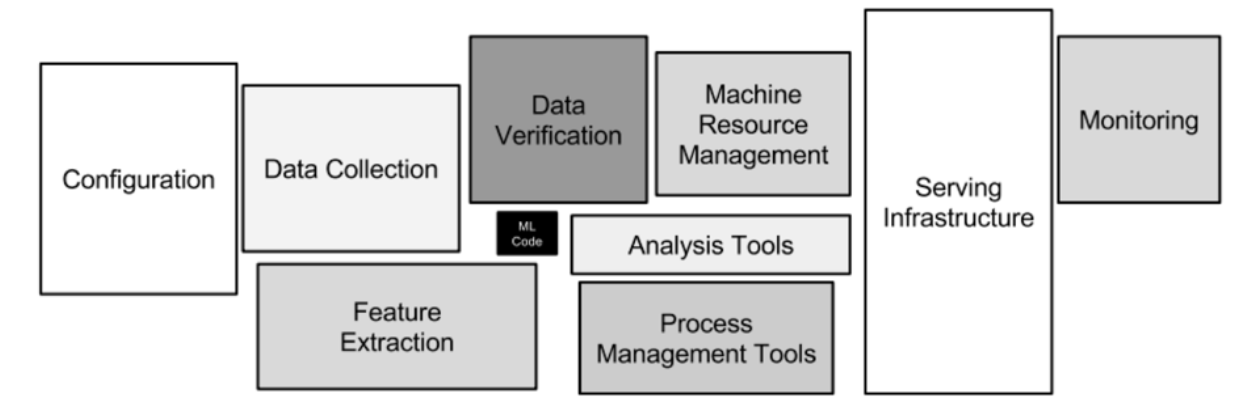

The “Hidden Technical Debt in Machine Learning Systems” paper explains why creating and deploying an analytical model is far more complicated than just writing some machine learning code using technologies like Python and TensorFlow. You also have to take care of things like data collection, feature extraction, infrastructure deployment, monitoring and other tasks – all this with the help of a scalable and reliable infrastructure (Fig. 8).

In addition, technology giants are showing that a single machine learning/deep learning framework such as TensorFlow is not sufficient for their use cases, and that machine learning is a fast-growing field. A flexible ML architecture must support different technologies and frameworks. It also needs to be fully scalable and reliable when used for essential business processes. That’s why many technology giants have developed their own machine learning platforms, such as Michelangelo by Uber, Meson by Netflix, and the fraud detection platform by PayPal. These platforms enable businesses to build and monitor powerful, scalable analytical models, while remaining flexible in choosing the right technology for each application.

Apache Kafka as a central nervous system

One of the reasons for Apache Kafka’s success is its rapid adoption and acceptance by many technology companies. Almost all major Silicon Valley companies such as LinkedIn, Netflix, Uber or eBay blog and speak of their use of Kafka as an event-driven central nervous system for their business-critical applications. Many of them concentrate on the distributed streaming platform for messaging, but components such as Kafka Connect, Kafka Streams, REST Proxy, Schema Registry and KSQL are being used more and more.

As I had already explained, Kafka is a logical addition to an ML platform: Training, monitoring, deployment, inferencing, configuration, A/B testing, etc. That’s probably why Uber, Netflix, and many others already use Kafka as a key component in their machine learning infrastructure.

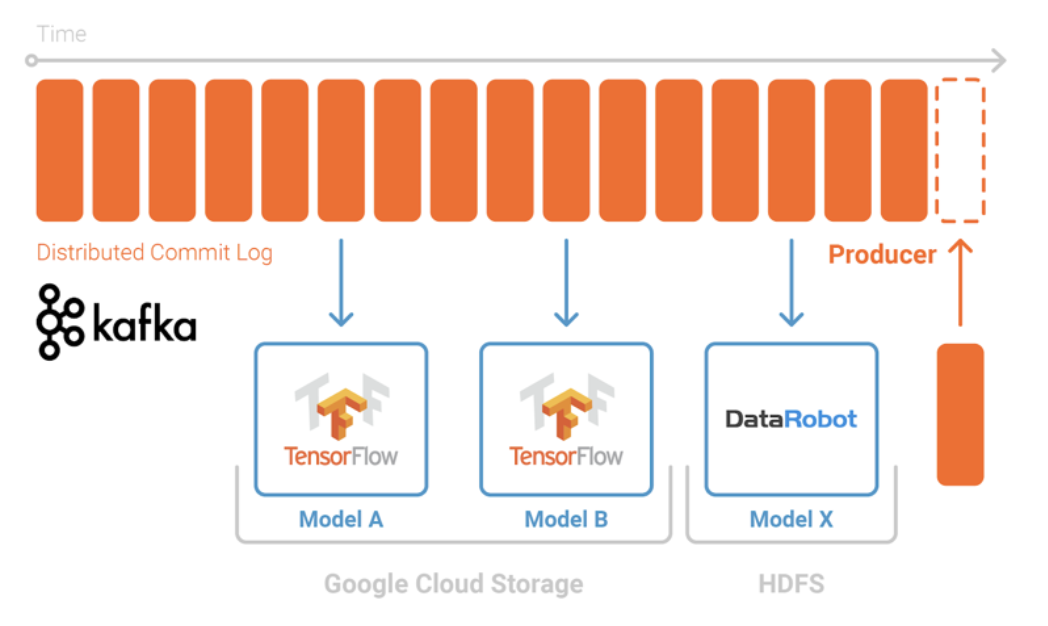

Kafka makes deploying machine learning tasks easy and uncomplicated, without the need for another large data cluster. And yet it is flexible in terms of integration with other systems. If you rely on Hadoop for your batch data processing or or want to do your ML Processing using Spark or AWS Sagemaker for example, all you need to do is connect them to Kafka via Kafka Connect. With the Kafka ecosystem as the basis of an ML infrastructure, nobody is forced to use only one specific technology (Fig. 9).

You can use different technologies for analytical model training, deploy models for any Kafka native or external client application, develop a central monitoring and auditing system, and finally be prepared and open for future innovations in machine learning. Everything is scalable, reliable and fault-tolerant, since the decoupled systems are loosely linked to Apache Kafka as the nervous system.

In addition to TensorFlow, the above example also uses the DataRobot AutoML framework to automatically train different analytical models and deploy the model with optimal accuracy. AutoML is an emerging field, as it automates many complex steps in model training such as hyperparameter tuning or algorithm selection. In terms of integration with the Kafka ecosystem, there are no differences with other ML frameworks, both work well together.

Conclusion

Apache Kafka can be viewed as the key to a flexible and sustainable infrastructure for modern machine learning. The ecosystem surrounding Apache Kafka is the perfect complement to an ML architecture. Choose the components you need to build a scalable, reliable platform that is independent of any specific on-premise/cloud infrastructure or ML technology.

You can build an integration pipeline for modeling training and use the analytical models for real-time forecasting and monitoring within Kafka applications. This does not change with new trends such as hybrid architectures or AutoML. Quite the contrary: with Kafka as the central but distributed and scalable layer, you remain flexible and ready for new technologies and concepts of the future.

Get to know the ML Conference better?

Here are more sessions

→ Honey Bee Conservation using Deep Learning

→ The Development of (Deep) Reinforcement Learning

→ Understanding how neural networks work by looking at the low level API of TensorFlow 2

→ AI Is Eating Software – How Second Generation AutoML Will Replace Software Development